Hi everyone!

For the Winter 2025 edition of the Osijek Competitive Programming Camp (OCPC), we are working with the National University of Singapore (NUS) to host a mirror of the OCPC in Singapore, during 22-26 Feb 2025. The mirror will consist of 4 training contests, that are a subset of the main 7 contest of the OCPC (scheduled for 15 Feb-23 Feb 2025 in Osijek, Croatia and online, will be officially announced later).

The contest days will be on 22, 23, 25 and 26 Feb, and 24 Feb will be a rest day. The mirror takes place right before ICPC APAC Championship 2025, scheduled for 27 Feb-2 Mar, and is a good training opportunity for its participants. Please note that the mirror is a private initiative of NUS and OCPC, and is not an official part of the ICPC event.

If you want to get a feel of the contests, refer to this comment for past public OCPC contests.

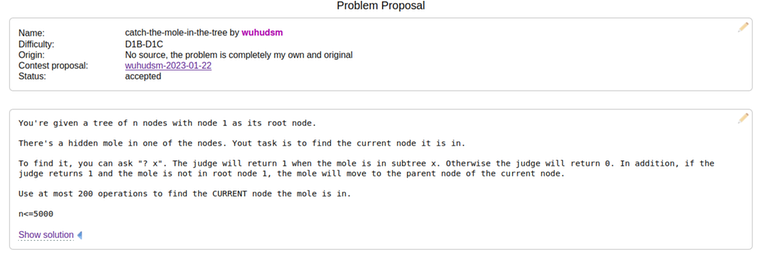

We are still looking for problem-setters for this edition's OCPC. If you are interested in problem-setting, please contact adamant. We are especially interested in problem-setters who can come onsite to give live editorials. Refer to this post for more details.

Registration

The participation fee for the mirror is 50 SGD per person, and it is only possible to participate in person. Please note that it does not include travel, accommodation or meal expenses. If you want to participate, please register here before 10 Feb 2025.

FAQ

Will the contest system be the same as the ICPC APAC Championships?

You will use the same physical set-up, but we will be in a different room in NUS. The online judge used will also very likely be different.

Are teams that are not attending the ICPC APAC Championships allowed to come?

Yes, you are welcome to come.

What is included in the costs?

In addition to the contest system and problems, snacks and a final dinner will be included in the costs. Again, it does not include travel, accommodation or meal expenses.

If you have any other questions, please write them in the comments below!