There seems to be a general trend of problem-setters going out of their way to ensure that their problems aren't solvable by AI. This obviously restricts the meta to the kinds of problems that AI is bad at: which, in practice seem to primarily be ad-hoc problems that require multiple observations. This approach doesn't always (and in my opinion, rarely) yield the most enjoyable/natural problems to humans.

The only motivation for this tendency is to ensure that CF ratings and leaderboards in contests retain some meaning.

What I want to express from this blog:

I think we should stop intentionally making anti-GPT problems and optimize problem-setting for human enjoyment, with a complete disregard for how AI models (and people using said models to cheat) perform on these problems.

I will try to explain why I think so below.

There are only two ways (distinct in their relevance to CP) in which AI gets better in the future:

- It becomes smarter than humans and creating problems which are unnaturally hard (with respect to other kinds of problems) for it becomes infeasible because it doesn't have any weaknesses which humans are good at. This seems like a very probable outcome based on how quickly it has improved in the last few years (I can recall discussions in which people made fun of GPT's incompetence and how it would never be able to solve even slightly non-trivial problems due to fundamental issues in how AI models work, back in 2022). However, I don't have any real knowledge about how AI works or what the current AI "scene" looks like so let's not focus too much on whether this is bound to happen.

Let's say it does happen in 5 years, what happens to CP then? Assuming that sites like CF don't go down to due to a lack of revenue (I don't actually know how CF generates revenue), we would realize that since there is no way to prevent misuse of AI, we might as well maximise enjoyment for actual humans (enjoyment for humans + cheating > no enjoyment for humans + cheating).

Now, you may ask: how is the future relevant to the present moment? Well, I think it's because most people are subconsciously thinking of the current decision to make anti-AI problems as going down a path which leads to AI never making cf ratings meaningless, at the cost of some eternal but minor decrease in problem quality (akin to making greedily optimal choices in an optimization problem; they aren't seeing far enough). If one does recognize that ratings will become meaningless in a significantly short amount of time (5 years being a good estimate) then one views the two choices in a different light:- Keep making anti-GPT problems, which become increasingly hard to create, and less pleasant for humans to solve, as AI keeps getting smarter, cheaper and faster. This also entails dealing with the countless blogs and comments by crying cyans about how a guy using o69 caused his rating delta to go from +2 to -10. Do this for 5 years until there is a pivotal moment when people realize the futility of this charade. If you were to look back upon the last few years from that point, giving up the prestige and meaning we attach to ratings in exchange for preserving the actual enjoyment we derive from this activity would seem like the right thing to have been done.

- Stop caring about misuse of AI and how it affects ratings. Optimize for elegance and enjoyment when creating problems.

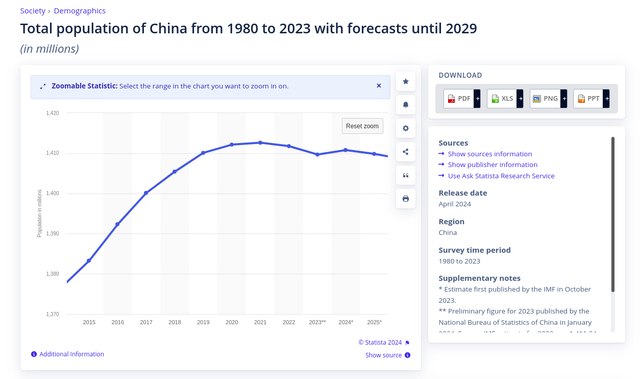

In other words, the destination is the same- we can either choose to be slowly dragged to it while throwing a hissy fit or accept reality and walk to it ourselves, enjoying every part of the journey. - Somehow, magically, AI hits a "wall" which it cannot meaningfully improve beyond. For us, this would mean that it's expected performance rating would asymptote to some value. Keep in mind that this value would still be quite high, since it currently seems to be able to perform at a performance rating of at least 2000 in most contests, which places it at the 95th percentile(https://codeforces.me/blog/entry/126802) of human competitors. Once again, I don't want to speculate about how much this score would be in the future, but it seems safe to say that it would at least be 2300, implying that the vast majority of human competitors would benefit in some measure by using AI during contests.

Until the "wall" is hit, the intermittent period would be more-or-less identical to the period where AI gets better in the first situation. The meta would have to rapidly change, problem-setters would have to put effort into making sure that their problems weren't solvable by the SOTA, there would be a lot of volatility, people would constantly complain about AI cheaters and their effects on rating distributions, etc. This period would be as unpleasant as in the first scenario. There are two possible final destinations here:- We stop caring about misuse of AI and how it affects ratings. Optimize for elegance and enjoyment when creating problems. The same endpoint as the first scenario. We would realize that we should have stopped caring about AI cheating earlier in this timeline too.

- The CF meta also asymptotes to a certain place, and is significantly based around the limitations of the then-SOTA AI. It is obviously unlikely that the kinds of problems AI would be bad at would be the ones that humans enjoy and find natural. It would, also not be perfect wrt cheating and there would still be a lot of it since AI would still be smarter than most humans at all kinds of problems (but worse at certain kinds of problems than a small percentage of humans). Maybe there will be a weird gap between the kinds of problems at offline events like olympiads and online contests, since the former would likely always optimize for human enjoyment (or at least not consider AI capability when creating problems). It could just be me, but I certainly wouldn't want this wonderful activity to end up in such a place and would much prefer having gone the other way earlier.

I shouldn't have started writing this at 6 AM, now the sun is out and I don't feel like sleeping.