We really hope you enjoyed the problems! We apologize again for the long queue and unrated round. Just for fun, here's how many of our problems were rejected:

Rejection Count

Tutorial is loading...

Solution: 86603804

Idea: MagentaCobra

Preparation: MagentaCobra, qlf9

Tutorial is loading...

Solution: 86603838Idea: qlf9

Preparation: qlf9

Tutorial is loading...

Solution: 86603857Idea: MagentaCobra

Preparation: MagentaCobra, qlf9

Tutorial is loading...

Solution: 86603878Idea: MagentaCobra

Preparation: MagentaCobra, Tlatoani

Tutorial is loading...

Solution: 86603896Idea: golions

Preparation: Tlatoani, golions

Tutorial is loading...

Solution 1: 86603917Solution 2: 86603930

Idea: golions

Auto comment: topic has been updated by MagentaCobra (previous revision, new revision, compare).

Lol Quite surprised to see the amount of problems rejected and the contest got unrated I think the coordinator wanted a quality round this time!

If you can't solve it.....break it...

Lol how much days it took you guys to create this problem set :) 72 problems rejected xD

Good problemset!! but the queue:)

By seeing the rejection count ! I am sad that such a thing happened today ! It was an awesome problem set ! Kudos to All Setters and Testers :)

I feel sorry for the Problem setters and testers. The problem set was really nice. Also, 72 problems getting rejected must be some sort of a record

For problem E, let $$$dp_{i,j}$$$ be the best quality for the first $$$i$$$ columns and there is still $$$j$$$ intervals not contain $$$1$$$. The transition needs to check the restriction of available intervals. What's wrong with this approach? I checked my code very long time during contest and got WA on test 4. 86580081

UPD:Got it.

I have also done the same approach and got WA on pretest 4 https://codeforces.me/contest/1372/submission/86588280

Really appreciate for the hard work of the problem setters... 72 problems are rejected :(

Many people participated to increase their rating after a good decrease in last two rounds. Ironically, this round got unrated.

Feel sorry for people who got their best rank in this contest (like me). Sob :(

a small correction

First note that any possible circular value consists of the ...”

Thats not true

Consider 5 elements and leave a3 element two times, then the circular value is a1 + a5

I dont think it matters though

yeap, i think this situcation will be contained in one kind of (n+1)/2 elements

Can we use dynamic programming to solve D?

For problem D, how do we arrive at the fact that the optimal solution has exactly (n+1)/2 elements?

I arrived at this hypothesis during the contest while working through small examples.

I wonder how to prove it?

Usually in contests you rely on your intuition, make your best guess and code it out.

Oh yes.But I think we should prove it strictly after the contest.

In fact,I have this problem too.Can anybody prove it?thanks.

If you want to include two adjacent elements $$$a_i$$$ and $$$a_{i+1}$$$ , the only way is to include them at the final step when you have $$$3$$$ elements. So, you have to chose some number of elements, such that exactly two are adjacent. For convenience, lets merge the two adjacent elements and reduce $$$n$$$ by 1. In this reduced set we should select some elements such that no two are adjacent and their sum is maximum. Since all numbers are positive, we have to select half the elements, either all the elements at odd positions or all elements at even positions. So in total, $$$(n-1)/2 + 1$$$ elements.

I think there is one irritating thing. It is the fact that the last element is not always the sum of $$$(n-1)/2+1$$$ elements. It is only true that there cannot be more. But of course there can be less.

So we would have to prove that the solutions with more or most possible numbers always include the best result.

let's say we have a1,a2,a3,a4,a5

then say we do 1 operation and get, a1,a2+a4,a5

and after one more , we will have ans = a1 + a5.

however there's a way to get a1 + a5 + a3 or some other with 3 elements. so it's never better to have X number of elements for any X < (n+1)/2

It is given that n is odd so n can be represented as 2x+1 and it is easy to notice that after performing 1 operations the size of the circular queue decreases by 2. Therefore we have to apply the operation x times. We can also say that we have to delete x elements from the queue and hence will be left with x+1 elements which is equal to (n+1)/2

This is not true. We can combine two elements, and then remove the combined element. Which removes two original elements in one turn. And as a result the last element will contain less elements.

Logically they are the same! Even if we are removing some element which was formed by combining previous states but if you expand them so that the resultant element is formed from the original elements, It will turn out to be same

You can remove the combined element but by doing so you would be decreasing the no of elements added to the ans and as the elements are non-negative that strategy won't help.

It's easy to see how does the answer look like by solving for n = 5. You can notice, that answer will have some pair of adjacent elements, and a few more(maybe 0), separated each from the others with odd number of elements, that will be deleted. Than it's trivial to prove it by induction for any n. If you want, I can draw some pictures to prove this solution

Then, for every pair of adj. element you take what is left one through one, so there goes (n + 1) / 2 elements in sum. It's easy to understand the proof by looking at pictures, unfortunately i can't attach them :(

At each step, you are removing two elements. In the final list number of elements is 1(initial n). So you would require (n-1)/2 steps. So (n-1)/2 elements have to be deleted. The rest of (n+1)/2 elements would sum up for the final answer.

There could be a step in which the merged elements are deleted

If possible, consider the Optimal Solution has m<(n+1)/2 elements. Construction of any solution still follows that — (m-1) elements are alternate elements of the cycle, and the mth element is adjacent to exactly one of the (m-1) elements. Then, by freedom of construction, I can always pick the element alternate to the last element on the opposite side of the mth element and add to the current set of elements. As all elements are positive, I have found a better set of elements.

How'd you prove it's optimal to start at a point and keep taking alternating elements? You can apply operations on random elements too and you may get the sum of less than $$$(n + 1) / 2$$$ elements in the end.

I'm not saying its optimal. I'm saying that its the only possible construction. Even if we take random elements and add them up and get a sum of m (< (n+1)/2) elements; then (m-1) of them will be alternate and the mth will be adjacnt to a boundary element

in the constraint, n is odd, so you can see that if we choose index i to be our start index, then we will end at index before i. example: 9 0 7 6 5 6 6 if we choose start i = 1, then the end will be n and sequence will be 9 7 5 6. so this will make answer consist of n + 1 / 2. the optimal answer here, start i = 4, end = 3 and sequence is 6 6 9 7 -> 28.

In the final answer, we will include fewer (n + 1) / 2 elements only if at some point we remove an element that was a union of two (or more) others. We will call such an element X. Then let's not create X (combine the elements), that is, we will have 3 elements instead of X. Then, at the stage of deleting X, we will delete elements 1 and 3 in our 3. Thus, after this step, the situation on the table will change for the better compared to what happened with the removal of X, because the element instead of X increased (you can track based on what we did). That is, we removed one deletion to improve the situation. So what we will do with each removal of two elements. As a result, they will not be. I had such thoughts at the contest, I'm not sure how true this is.

yea..its a sure thing...coz

In an optimal solution, consider all elements except the element destroyed in the last step. These form a subarray. Now color each element in an alternating way (black-white-black-white-...-white). In each step before last only two numbers of the same color can be added. Also before the last step, the number at the left must be black and the number of the right must be white (because those two cannot be destroyed), and the answer is the sum of these two numbers. Therefore the optimal solution must be to add k consecutive black numbers to the left and (n+1)/2-k consecutive white numbers to the right for some k.

You can take less than (n+1)/2 elements but that won't improve your answer

because you can take at most 1 adjacent pair

72 rejections!? In what year did you propose the contest?

I mean, the URL contest code was 1372, and there were three other rounds which had higher contest url numbers :)

Thanks for this great work! Why is this in problem C ? "It can be proved that under given constraints this number doesn't exceed 10^18"

Basically to clarify that the answers fit in a 64 bit or long.

Thanks for your response. But, as it is clear now the answer is 0, 1 , or 2.So, this statement is confusing and is not needed.

is not needed

As Java said, it was meant to clarify that the answer fits in a 64 bit integer.

this statement is confusing

The statement is clear and unambiguous. You could have thought that there are test cases whose answer is very big. This is your fault, not the author's. Do you think that the author gives you hints about possible size of an answer?

just to confuse us maybe.

To make you think that question is hard

I solved E in O(M^2 * N) based on the same exact idea but by precalculating how much points we get for filling a column i in interval [l, r]. Here's my submission if anyone's interested :) 86578968

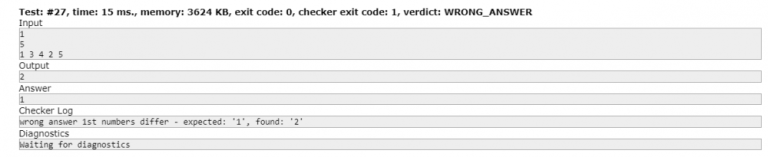

Can anyone please explain why ans is 1? This is the Problem C

[This is what I was getting in the 27th test case please help]( )

)

As 1 and 5 are already in position. You just have to pick [3,4,2] and do a single special exchange

Can you explain a little how your fix function is working.I understand that counter[i][l][r] = no. Of intervals in l,r having ith column, but I don't understand how checking previous column of l and succeeding column of r can help in computing it.

I copied the explanation from my messages, hopefully it's understandable.

Basically what I am computing is how many values I can put in column i when the nearest blocked (already have 1 in them) columns are l — 1 and r + 1. Let's look at one row.

Column i is blocked if and only if interval containing l — 1 contains i or interval containing r + 1 contains i. So, for each row you can find out interval [x, y] that for each column in that interval we can put a 1 there.

Right now answer on column i is equal to "how many intervals [x, y] contain i". This is a standard problem which we can solve by adding +1 on x, adding -1 on y + 1, and going from left to right. https://www.geeksforgeeks.org/count-the-number-of-intervals-in-which-a-given-value-lies/ more details here if you want them.

Obviously a lot of work went into the contest as unfortunate as the queue was I really enjoyed the problems and appreciate all the hard work :)

No matter rejections but the final problemsets were very good. I saw somewhere that this was first contest of the organisers. It was a wonderfull start. Ideal contest for me. I don't know about others but i liked the problemset a lot.

My theoretic nlogn solution to D was to use MinHeap and a LinkedList and simulate the following step: Find the min value in the circle, and replace it by the sum of its neighbors.

Peform the above step n//2 times. You just need a pointer between the LinkedList element and the MinHeap element. Pop the min value, go to the linked list node, remove its neighbors from the list and the heap, and insert into the heap and the list the new element (sum of neighbors).

But I really wasn't able to find a normal implementation(I can also use a balanced BST), and didn't really have the drive to start implementing it.

Did anyone else encounter the same thing?

Consider this case,

5

20 2 1 3 10

Correct answer : 31

Your answer : 30

I managed to implement this using two sets , one has elements {position,value} and the other {value,position}. You can use one set for finding the smallest element and the other for adjacent elements, remove all the three and insert the new value in both the sets. It doesn't work obviously, but this is how I implemented. Hope it helps!!

Thanks! I could have spent my life thinking my trash solution was accurate in theory ^_^

Yep I also tried this and implemented it using a set and three arrays but I failed on pretest 3

If you want to know how to implement it(even if it is a wrong approach), Here's how you can do it.

Hello, in my case, when I encounter problems that I can only think solutions like the one you describe (for example, that include simulation) and I implement it, most of the time it turns out to be wrong. This is especially the case when I can't find a counterexample when it fails. That's why if I need to solve similar problems, I try my best to dive into the problem and try to find any invariants or to see if there is an observation hidden in the problem.

For example, on this problem, you need to perform some actions and find the max sum. In the beginning, I tried playing with some examples, found a $$$O(n^3)$$$ DP solution (not sure if it's correct). Then, I observed that by performing the given operation, the central element is "destroyed" and therefore we can't have it in our desired sum. Then, I thought, what if we try and minimize the sum of those vanished elements (say by picking beforehand what value we will vanish)? After playing more with examples, I noticed some more stuff:

We can never vanish two adjacent values.

Say we have a sequence and we denote a vanished element as "-" and a remaining as ".", then the only pattern we can have is the following: -.-..-.

From the above we can note that no matter what, we will have always a sequence of a — followed by a ., but we can have only one place where we can have 2 adjacent dots. Then, I convinced myself with examples that this is the only possible case.

Finally, I considered fixing the 2 positions where we can have 2 remaining values and calculate the value we will get based on that (we can precalculate the sum of the other remaining values by using prefix/suffix sums). You can find my submission here: (if you have any questions let me know)

https://codeforces.me/contest/1372/submission/86606419

The takeaway from this is that we can derive a pattern. There is a class of problems where you can only derive the result by playing around with various examples and trying to find what lies behind on the background. To me, it's like being a detective and trying to do investigation work in order to uncover what really goes on on the background. Most of the time, when I can't find any solutions directly, I avoid to find a greedy one that looks correct. This is a trap and I am calling it the "greedy hell". You try to find ad hoc solutions that are not easy to prove and they waste time. Sometimes this method work (especially on easy problems), but most of the time it's a trap, so you need to watch out for it! :)

I think if you practice more problems where you try proactively to solve them by observing stuff etc., after some practice you will get better at them. This strategy worked for me! :)

I also thought of the same and implemented using sets, but as given by the counter case it failed.

Submission Link : Wrong Answer Using the same Algorithm

Even I was thinking on the exact same lines...

Can someone prove in D the maximum number of values in circular value is as given is (n+1)/2 optimally? I can show an array where the number of values in circular value is less than that(though its not optimal obviously). The following represent indices: 1 2 3 4 5 -> (1+3) 4 5 -> (4+5)

If the array is of size $$$n$$$ you will have to do exactly $$$(n - 1) / 2$$$ operations to get the circular value. This means that the minimum number of elements that you are not including in your answer is $$$(n - 1) / 2$$$. Thus at max, you are taking $$$n - (n - 1) / 2 = (n + 1) / 2$$$ values in the final answer.

The necessary and sufficient condition is that unchosen elements are not adjacent. Given this condition, just delete unchosen elements in any order.

Following this approach, you can get the answer as given.

Doesn't using one element to make a move multiple times complicate matters?

How to prove that a combined (1 + 3) bubble will never be removed?

.

If you are taking less than (n+1)/2 numbers in your final answer, you can always add more numbers to improve your answer.

By building some sample cases and making random moves on them, you can prove that no matter what the value of n is, in the final answer you can never have more than 2 consecutive indices and more than 1 pair of adjacent indices.

So when you select a pair of adjacent indices to be in your answer, it is always optimal to select all the remaining alternate indices in your final answer.

May you give a formal proof for the same?

Let's assume n = 7.

You have indices [1, 2, 3, 4, 5, 6, 7] and you want to keep [3, 4, 5] in your final answer.

Now, by making a move on i, we lose i in the final answer. So, we cannot make a move on 3, 4, or 5. Let us make the following moves:

Suppose we prove it for n = 5. The elements are a, b, c, d, e

All the possible sums we can get at the end are as follows:-

Sums using three elements:- bde, bce, acd, ace, abd

Sums using two elements:- ae, ab, bc, cd, de

Now if we look closely at those having two elements, we notice that they are all contained inside sums with three elements. Three element sums are obtained when we only delete individual elements which were originally present in the array and not their sums.

If this satisfies for n = 5, by common sense (mathematical induction) it satisfies for all odd n's, with the maximum sum always having (n + 1) / 2 elements.

Also talking of the alternate part; we now know one thing that at any point in the sum tree we must not delete the elements which are sums. This way the we know that the since the sum is always produced in the middle of two elements, the indices would be alternate. Also, since one pair of indices needs to be consecutive, that comes from the last step when there are three elements left.

If this satisfies for n = 5, by mathematical induction it satisfies for all n

The inductive step doesn't seem trivial to me. Can you, please, elaborate?

I mean to say is that if you make a tree for all possible sums then the sums having smaller number of elements will always be contained inside the sums having the most elements. Also the most number of elements in the final sum will always be (n + 1) / 2 because we obtain this sum by deleting only those elements which were originally present in the array at each step of the tree. Try doing it on paper for n = 7 and you will know.

If this satisfies for n = 5, by mathematical induction it satisfies for all n

Try doing it on paper for n = 7 and you will know

Here answer should be 2+4+5

My code for problem C gives right output on test 27 when i run it on my computer but judge says another ouput? Can someone please help? https://codeforces.me/contest/1372/submission/86581340. Btw it passed pretests.

scanf("%d", &n); min = n + 1;

you initialize before reading n

You set min to n+1 before reading n.

can anyone clearly explain the problem D? i am unable to understand editorial of this problem

If you have tried D, then you would have found that the circular value will consist of (n+1)/2 elements of the given circular array.

Just imagine how the solution would look like.

Soon you will find that

answer = max(...alternate elements.... + a[i] + a[i+1] + .....altername elements...)

For problem E, isn't $$$O(M^3)$$$ possible by just using prefix sums?

Submission

Yeah, but we didn't see a need to require it.

Wow, both of you are from TJ :o

Could any one prove the solution of E? I don't know how to prove that when we pick a column (say k) in [l, r], it is optimal to set 1 for every interval containing that column covered by [l, r], instead of leaving some intervals unset for now.

Think about the column in [l,r] with the maximum no of ones. It's always optimal to bring a one from any other column in this column if it's possible. Think about how much you will gain due to this change.

In problem D, why can't the optimal answer consist of the sum of less than $$$(n + 1) / 2$$$ elements?

You have to delete at least (n-1)/2 elements.

If you delete more that that, The sum of the elements would surely be lesser

You have to do $$$(n - 1) / 2$$$ operations but it's possible to get the sum of less than $$$(n + 1) / 2$$$ elements after doing all operations. Consider $$$n = 11$$$ and we apply the following operations: change $$$a_1$$$ to $$$a_0 + a_2$$$, $$$a_4$$$ to $$$a_3 + a_5$$$ and $$$a_7$$$ to $$$a_6 + a_8$$$.

The circle now looks like $$$a_0 + a_2$$$, $$$a_3 + a_5$$$, $$$a_6 + a_8$$$, $$$a_9$$$, $$$a_{10}$$$. Applying the operation on the last 3 values, we get $$$a_0 + a_2$$$, $$$a_3 + a_5$$$, $$$a_6 + a_8 + a_{10}$$$.

Finally, we apply the operation on the remaining three values and get the sum as $$$a_0 + a_2 + a_6 + a_8 + a_{10}$$$. This contains $$$5$$$ elements, not $$$(11 + 1) / 2 = 6$$$ elements.

I know that for this particular case you can get a better sum by also including $$$a_4$$$ by doing the operations as mentioned in the editorial, but how do you prove that having $$$(n + 1) / 2$$$ elements will always be optimal? Maybe there exists a higher sum by taking a different set of elements with one less element?

Just look at what you have got at the end....

What I meant to say is, You can always get a0 , a2, A4, a6, a8, a10.

In simple words, Whatever the 5 elements you choose to get at the end, you can always get one more element together with your 5 elements...

Now you decide, which one is optimal.

ohhh...!!! (rejections) so that's the reason, why div 2 happened after 7 days

My solution of 1372F - Omkar and Modes.

I also used function $$$solve(l,r)$$$ to get all numbers in segment $$$[l,r]$$$. First, query the interval $$$[l,r]$$$, and let the result be $$$(x,f)$$$.

Link to submission: 86574107.

Can someone prove that this solution is correct/incorrect?

It's correct,but I can only prove it in Chinese,can anyone translate it into English? /kel

注意到如果一段完全相同,他只需要 $$$1$$$ 次,即 $$$4k-3$$$ 次

考虑证明对于任意一个不完全相同的段,只需要 $$$4k-5$$$ 次。

边界条件:

若它由两个不完全相同的段拼成,设两端中不同数的出现个数分别为 $$$a,b$$$ ,则 $$$k=a+b$$$ 或 $$$k=a+b-1$$$ ,共需操作 $$$4a-5+4b-5+1=4(a+b)-9$$$ 次,两者的限制分别是 $$$4(a+b)-5$$$ 和 $$$4(a+b)-9$$$ ,均能满足。

由数学归纳法,得证。

The other translation seems to be Google Translate so I will try to translate it too. Please let me know if there is anything wrong/mistranslated.

First off, I would like to thank you and VladProg for sharing this very fascinating solution! (谢谢分享这个很有趣的做法!)

Observe that if the entire segment is the same, you only need 1 query, which is $$$4k-3$$$.

Consider proving that for any case with any different elements, you only need $$$4k-5$$$ queries.

Boundary Conditions:

He has two segments that contain equal elements put together, thus $$$k=2$$$, you need $$$3$$$ queries, which fulfills the constraint.

He has a segment that contains equal elements and a segment doesn't contain equal elements put together, let's say the segment on the left contains all equal elements.

If he has two segments that contain different elements, let the number of distinct elements on the left side be $$$a$$$ and the number of distinct elements on the right side be $$$b$$$, thus $$$k = a+b$$$ or $$$k = a+b-1$$$, together they take $$$4a-5+4b-5+1 = 4(a+b)-9$$$ queries, the limit for the two cases is $$$4(a+b)-5$$$ and $$$4(a+b)-9$$$, and both satisfy the constraint.

Using mathematical induction, we can prove the rest.

Note that if a paragraph is exactly the same, he only needs 1 time, that is $$$4k−3$$$ times

Consider proving that for any segment that is not identical, only $$$4k−5$$$ times are required.

Boundary conditions:

He is composed of two identical segments, then $$$k=2$$$, which needs $$$3$$$ times, and meets the conditions He is made up of a completely identical and an incompletely identical segment. Let us assume that the segment on the left is identical The left side and the right side are not exactly the same: suppose there are b different numbers on the right side, the limit is $$$4(b+1)−5$$$, a total of $$$4b−5+2$$$ is required, and the conditions are met The left side and the right side are exactly the same: then the number of occurrences of the interval mode exceeds half of the total length, the middle part can be directly assigned, and then converted into a situation where there is no exact same If it is composed of two segments that are not exactly the same, and the number of occurrences of different numbers at both ends is $$$a$$$, $$$b$$$, then $$$k=a+b$$$ or $$$k=a+b−1$$$, a total of $$$4a−5$$$ operations are required $$$4a-5+4b−5+1=4(a+b)−9$$$ times, the limits of the two are $$$4(a+b)−5$$$ and $$$4(a+b)−9$$$, both of which can be satisfied.

It is proved by mathematical induction.

Hey Chinese bro your government is doomed

Can someone please explain why for Problem D (Omkar and Circle) the circle number is made up of (n+1)/2 of the initial values?

Problem E. what is the meaning of "...it is optimal to always fill some column within l to r to the max."

How do we "fill a column"?

Put a 1 in every row of that column where the interval that contains that column is fully contained within [l,r].

Dear MagentaCobra, We appreciate your hardword, and Not-to-GiveUp attitude...

Rejection Count 72 is a very large number I think...

Can someone explain me this line from problem B? L C M ( k , n − k ) ≥ 2 ⋅ ( n − k ) ≥ 2 ⋅ n/2 = n

It is the conclusion of the case where k does not devide n. In that case the lcm() of the numbers is always bigger than n.

Thanks for the hard work.

For people who solved B, I would appreciate some suggestions on how I can improve my approach to such a mathematical problem.I loved the proof and I can only wonder about the paperwork done to get this problem correct.

see it just required to think u about that both a and b were to maximum as possible.Then u would reach upto the conclusion that a should be largest factor for LCM to be least. i hope this helps

All you needed to prove was that either $$$a$$$ or $$$b$$$ was a proper factor of $$$n$$$. After that you can just test all the possible factors in $$$O(\sqrt{n})$$$.

Why arent' we taking 1 into consideration?

1 is a proper divisor, so we take it into consideration. If n is prime then then answer includes 1 in fact.

Can anyone tell me what's wrong with this segemnt tree approach in question D https://codeforces.me/contest/1372/submission/86598893

in the problem of Okam and baseball.for the case where special exchange is 1 , can anyone help me out with its clarification ,that for i have to do??

the exchange will be one if there exist i and j such that there is no correct element between i and j, in this case, we can just get the answer in one step else we will require two steps. https://codeforces.me/contest/1372/submission/86594229

ok thanks!!!

Does someone has better proof for Problem C? Or can explain how author arrives at this idea:

Then, for all integers b such that their position i in a (i. e. the j such that aj=b) is in the appropriate half of p, assign pi=b; assign other b to arbitrary positions in the appropriate half of p. Finally, cyclically rotate each half of p – this ensures that p has no matchingYou can think of it as this way:

Lets define a bad subarray as a subarray which has no element correctly placed inside it.

1-> If all elements are correctly placed. The answer is 0

2-> If the input array contains only 1 bad subarray, the answer is 1. This is because all the elements which are needed to be correctly placed exist inside this subarray.

3-> If more than 1 bad subarray exists in the given input array. The answer is 2. This is because in 1st operation we can re-arrange all elements such that no element is at its correct position. Now in 2nd operation we can place all elements at their correct places

Edit: I'm wrong.

For the last case (when 2 operations are needed):

swap each pair of identity elements, until only one is left, and also eliminate 'extra' cycles, until you arrive at (something like) one of two following cases, which are easy to prove.

case I: two cycles on each side of the identity element.

case II: one big cycle with the identity element in the middle.

"You have been blessed as a child of Omkar." it took me some time to settle after reading this XD. The problem was clever still easy though!!

Problem D

Why can't we keep on taking a_i with the smallest value and replacing it with the sum of neighbors? The addition is associative, thus intuition was to "get rid of" the smallest elements.

Input: 7

3 1 2 5 6 4 8

Your algorithm gives 19

Actual answer is 20

Here once you replace 1 with 3+2, there are two 5 in your array.

For problem D, can someone please explain why the answer will be a "Subarray" of (n+1)/2 elements and not their "Subsequence"? I mean, why is it necessary that all the elements that have to be added will be contiguous?

Can someone explain me why I'm getting a WA(too many queries) verdict for F. My solution: 86602909

What I'm doing:

So, for any range(low, high), I first ask a query for (low, low+2) and if the frequency of mode is equal to the length of query(2 in this case) then I double the length of query and ask again for (low, low+4) and so on. If at any point the frequency of mode comes less than the length of the query then I update the array and my low to low+frequency and again call my recursive function for (low+frequency, high). Now looking at the constraints we can see that the worst case would be when the array has 200000 elements and 25000 are distinct i.e every element repeats 8 times. According to my solution for every number my code will ask 4 queries(for length 2, 4, 8 and 16) and thus the total queries would be 4*k. But still the verdict says that that I'm asking too many queries. Can someone explain what is wrong with this approach?

UPD: I realized that this approach is completely wrong and the worst case comes when k = 1 for large n.

Auto comment: topic has been updated by MagentaCobra (previous revision, new revision, compare).

Auto comment: topic has been updated by MagentaCobra (previous revision, new revision, compare).

Test cases for D do not cover the case where the 2 adjacent elements have to be the first and the last ones. This could potentially lead to some hacks like this one (hacked after contest was over) — Hacked Solution.

The simplest case is

n = 3 , array = {10,1,11}.What will happen to the 72 rejected problems? Will they be used in a future contest, or discarded forever? Do you still have the problem statements for the rejected problems?

The person who decided which question will be B and which one will be C did a very wrong job. C is easy to think and implement in comparison to B. I think everyone will agree.

I liked problem B though.

I think that "everyone will agree" is very far from the truth.

Did you found C relatively difficult compared to B ?

To me C took a lot more time than B to both solve and prove.

My approach for D is : we have to find minimum of the remaining elements and replace with sum of adjacent elements.But i got WA on test 5.Can anyone tell why this approach is wrong? My submission : https://codeforces.me/contest/1372/submission/86606748

Can someone give a proof for why E's dp solution works?

I'm confused about the transition: number of intervals that are fully within l and r and include k

I can see that if we don't restrict to intervals that are within l and r and include k, then we may double count, but I don't see how adding this restriction gives a correct solution.

I explain some intuition on why it is correct at the end of my screencast of the round, if you want a more visual proof. Let me know if something I said there doesn't make sense, and I can further clarify.

Thank you. Your explanation helped me understand.

In the problem E, would assignment of 1's that maximizes the sum of qi^2 also maximize the sum of other functions such as 2^qi or qi^x for any x>1?

Edit: I miss read the question. This is the answer for "why maximize 1 for columns is correct for $$$q_i^2$$$".

Well yes. I did not fully prove it during the concept but I do prove up to 4 $$$q_i$$$s in order to see the pattern why it works. But 4 is complicated, let's do 3 instead, you'll see. What we wanted to prove is that when we share some of the $$$1$$$ of the largest column to the other, we get smaller.

Let's $$$a$$$ be the smallest $$$q_i$$$ ($$$a > 0$$$), $$$a + x$$$ be the second smallest. And finally, let's $$$a + y + u + v$$$ be the largest, where $$$u$$$ is the amount we wanted to share to the smallest column, and $$$v$$$ is the amount to the second column. I wanted to choose $$$0 < x < y$$$. $$$u$$$ and $$$v$$$ can just be positive.

So we wanted to prove that $$$a^2 + (a+x)^2 + (a+y+u+v)^2 > (a+u)^2 + (a+x+v)^2 + (a + y)^2$$$

Let's take the difference of 2 sides then:

Since $$$y > x$$$, obviously the difference is positive.

From there we can see that a lot of things cancel each other (all of the squares $$$a^2, x^2, ...$$$ are canceled, all of the numbers containing $$$2a$$$ are also canceled), and finally, we are left with some positive amount and a different of $$$C(y-x)$$$ with some $$$C$$$. When I did my draft with 4 $$$q_i$$$-s (with something like $$$a$$$, $$$a+x$$$, $$$a+y$$$, $$$a+z + ...$$$), I also got $$$C_1(z - x)$$$, $$$C_2(z - y)$$$. So with a higher number of columns, we still get the same patterns with a positive amount. With this intuition, we can safely claim what is said in the editorial.

use this case 5 2 1 3 6 6 you will know why

I believe you missread what I wrote. I was asking about other functions instead of qi^2 (such as qi^3)

Oof. Yeah sorry for that. My bad.

I think the case $$$2^{q_i}$$$ is correct. Intuitively, if you remove just only one from the largest, and add it to the smaller ones, you lose from the largest more than gaining from the smaller.

Alternative solution to F:

Throughout this solution, $$$n / 2$$$ is the floor of $$$n / 2$$$, so $$$5 / 2$$$ would be equal to $$$2$$$. Note therefore that $$$1 \ldots n / 2$$$ is the shorter half of $$$1 \ldots n / 2$$$.

The idea is to try to recursively solve the problem, i.e. solve it for $$$1 \ldots n/2$$$ and $$$n/2 + 1 \ldots n$$$. Assuming in the first array we have $$$l$$$ different numbers and in the second array $$$r$$$ different numbers, we make at most $$$4(l+r)$$$ queries. However, $$$k$$$ could be equal to $$$l + r - 1$$$ (if the two arrays end/begin with the same number), and that would be $$$4$$$ too many queries. However (and this idea is sometimes seen when formally calculating runtimes of algorithms), if we assume we can solve the problem in $$$4k - 4$$$ queries, then we would be done, as if even if $$$k = l + r - 1$$$, we would make at most $$$4l + 4r - 4 - 4 = 4(l + r - 1) - 4 = 4k - 4$$$ queries. Now, we obviously can't solve the problem in $$$4k - 4$$$ queries, as that would be $$$0$$$ is $$$k$$$ were equal to $$$1$$$. To actually solve the problem, we just need to take the idea only a bit further.

First query the whole array $$$1 \ldots n$$$. Let the mode be $$$x$$$, and assume it appears $$$n_x$$$ times. If $$$n_x = n$$$, we are done. If not, query the array $$$1 \ldots n / 2$$$ (mode $$$y$$$, appears $$$n_y$$$ times). If $$$n_y = n / 2$$$ (i.e. the $$$1 .. n / 2$$$ is filled with $$$y$$$), we have two cases: either $$$x = y$$$ or not. If $$$x = y$$$, then $$$x$$$ forms a prefix of our array and we now where this prefix ends (at $$$n_x$$$). So we now recursively solve the array $$$n_x + 1 \ldots n$$$ (which contains $$$k - 1$$$ elements) and we are done. We have done $$$f(k - 1) + 2$$$ queries, if we denote by $$$f(k)$$$ the number of queries needed to solve the problem if the array consists of $$$k$$$ distinct elements. If $$$x \neq y$$$, we don't have too many possibilities, because we have $$$n_x \geq n_y \geq n / 2$$$. We either have $$$n_x + n_y = n$$$ and we are done (ergo we did $$$3$$$ queries and $$$k$$$ was $$$2$$$), or $$$n_x + n_y = n - 1$$$ and the last element is either at position $$$n / 2 + 1$$$ or at position $$$n$$$. We query the two positions and return the right array (ergo we did $$$4$$$ queries and we had $$$k = 3$$$).

Let's now study the case when $$$n_y \neq n / 2$$$. We again have the two cases, either $$$x = y$$$ or not. If $$$x = y$$$, we either have $$$n_x = n_y$$$ or not. If $$$n_x \neq n_y$$$, we know were the $$$x$$$ lie in our array (as they necessarily have to appear in both halves): they start at $$$n / 2 - n_y + 1$$$ and end at $$$n / 2 - n_y + n_x$$$. Therefore, we recursively solve the subarrays $$$1 \ldots n / 2 - n_y$$$ and $$$n / 2 - n_y + n_x + 1 \ldots n$$$. If the first subarray has $$$l$$$ distinct elements and the second one $$$r$$$, we have done $$$f(l) + f(r) + 2$$$ queries, and $$$l + r = k - 1$$$ (as $$$x$$$ doesn't appear in any of the subarrays). The case when $$$n_x = n_y$$$ is done similarly as the $$$x \neq y$$$ case, which is done in the next paragraph.

First, if $$$n_x + n_y = n$$$ we are done (and we have done $$$2$$$ queries for $$$k = 2$$$). Otherwise, we query $$$n / 2 + 1 \ldots n$$$, and let the mode be $$$z$$$, appearing $$$n_z$$$ times. If it happens that $$$n_z = n - n / 2$$$ (i.e. the $$$n / 2 + 1 \ldots n$$$ array is filled with $$$z$$$), we necessarily have that $$$x = z$$$ (because $$$n / 2 + 1 \ldots n$$$ is the longer half). Therefore, we know that $$$x$$$ forms the suffix of our array, and we know the suffixes length, $$$n_x$$$. Therefore, we just recursively solve for the array $$$1 \ldots n - n_x$$$. As this array has $$$k - 1$$$ distinct elements, we have made $$$f(k - 1) + 3$$$ queries.

We are now left with the case that $$$n_y \neq n / 2$$$ and $$$n_z \neq n - n / 2$$$. Therefore, both the left and the right half contain at least two elements. We recursively solve for both halves, but note that we already made one of the queries: the query on the whole half. Therefore, we can cache this result in the recursive call, and assuming the left half has $$$l$$$ distinct elements and the right half $$$r$$$ distinct elements, we solve the problem in $$$f(l) - 1 + f(r) - 1 + 3 = f(l) + f(r) + 1$$$ queries.

We now have to find a function $$$f$$$ that works and we are done. Let $$$f(0) = 0$$$ by definition. First of all, $$$f(1) = 1$$$. Then, we need that $$$f(k) \geq f(k - 1) + 3$$$, $$$f(k) \geq f(l) + f(r) + 1$$$ for all $$$l, r \geq 2$$$ and $$$l + r = k + 1$$$ and $$$f(k) \geq f(l) + f(r) + 2$$$ for all $$$l, r \geq 0$$$ and $$$l + r = k - 1$$$. Furthermore, we need $$$f(2) \geq 3$$$ and $$$f(3) \geq 4$$$. One last important piece of information is that we can't have the case $$$f(k) \geq f(k - 1) + 3$$$ if $$$k = 2$$$: note that oin that case we had that $$$x = z$$$, therefore if $$$x = y$$$ we would have $$$n_y \neq n_x$$$ which was handled in $$$f(l) + f(r) + 2$$$. But in this case $$$l = 1$$$ and $$$r = 0$$$, so we made only $$$3$$$ queries (we don't need to query the right part as it is empty). Now, it is easy to see that $$$f(k)$$$ defined by $$$f(0) = 0$$$, $$$f(1) = 1$$$ and $$$f(k) = 4k - 5$$$ for $$$k \geq 2$$$ satisfies these inequalities and we are done.

PS: If (but of course it's not possible) we had $$$f(2) = 2$$$, this would actually result in $$$f(k) = 3k - 4$$$ for $$$k \geq 2$$$. Maybe we could somehow use the query on the whole array when we do $$$2$$$ recursive calls on the two halves (which in this solution is not used, apart from the other cases), we could get some other functions, and eventually get $$$f(k) = 3k + \mathcal{O}(1)$$$. However, I don't believe we could get something like $$$f(k) = ck + \mathcal{O}(1)$$$ with some real $$$c < 3$$$ mainly because of the case that results in $$$f(k) \geq f(k - 1) + 3$$$.

In problem D, any possible circular value consists of the sum of some (n+1)/2 elements, but why? Is it because when all elements are arranged in a row, those with even indices will always be deleted and it's better to make all those with odd indices remained?

What a problemset \o/ But the editorial is even better. I actually write programs with an intuition that this should work. Providing proof of the questions really enhances the clarity of why the solution actually works. Hope to see more such problems and without the unhandled queue :-D

Fortune favors the brave!!

After the end of the contest, I finally realize the problem E has the same concept with Facebook Hackercup 2018 Round 3 pD.

A silly doubt just to confirm

Is the number of operations allowed in 1second are 10^8 or 10^6 ?? Asking this question because in the editorial of B question it was written that

"We're given that n≤10^9, so n=√10^9<10^5. t≤10, meaning that we will check less than 10^6 numbers, which runs well under the time limit."

around 10^8 operations in 1 second

Thank You very much

In the editorial for B, what does k | n mean?

It’s math notation for “k divides n”

Solved Problem-C in an easier way (I hope you people also find it easier)...

While traversing the array:

Case 1: At first we need to find such an element in the array that it is not in its actual position if the array was sorted.

Case 2: After fulfilling Case 1, we need to find such an element in the array that it is in its actual position if the array was sorted.

Case 3: After fulfilling Case 2, alike case 1, again we need to find such an element that it is not in its actual position if the array was sorted.

Now:

If not a single case is fulfilled then it means the array is already sorted, so the answer is 0

If only Case 1 is fulfilled then it means not a single element is sorted, so the answer is 1

If case 1 & case 2, are fulfilled that means some elements are not sorted, but rest of the elements are sorted. So the answer here is 1

If all the cases are fulfilled means some of the elements are not sorted, some of other elements are sorted, and rest of the elements are not sorted. So the answer is 2

My Submission : 86624638

In problem C , Test 5 a = [ 3 , 1 , 2 , 4 ] Jury's answer = 1 WHY ????

You Can sort the array using the subarray [3, 1, 2], number of special exchange here is 1.

Problem link : #655 — Problem D

Create an array whose values consists of [a1,a3,...,an,a2,a4,...,an−1]. Append this new array to itself to account for the circular structure.I knew the idea in the contest but it was very frustrating to be not able to think of any implementation for it. How can I learn to think of such implementations like how do this click you? Please share approaches of thinking if you can. That would be very helpful.

Can question D be done using segment trees?

You will need 2 segments trees. One tree for odd indexes and another for even indexes.

count of rejections speaks all about the quality of this round. Thanks for such a wonderful contest but the queue..

If Anyone need detail explanation for C Here

I will attempt a formal proof for problem $$$D$$$, since I struggled a bit with it and it may help someone else as well.

Problem formulation: Notice that the final sum is created from elements in a subset of the circular array. Let us create a binary circular array $$$b$$$ having same size with $$$b_i = 1$$$ if $$$a_i$$$ is included in the final sum and $$$0$$$ if it is excluded. So, we need to find $$$b$$$ such that :-

We will henceforth restrict our discussion to binary circular arrays that are reducible. Here comes the interesting part. Now, the only allowed operations on $$$b$$$ we can perform are of the form :-

We cannot perform a reduction on adjacent elements of the form 001.

Let us attempt to merge the first and third elements, so that now this element functions as a single unit. Since the first element was 0, this unit must be excluded from the final sum. However, since the third element was 1, this unit must be included in the final sum. This leads to a contradiction.

Similarly, we cannot perform a reduction on adjacent elements of the form x1x.

Whenever we merge the two elements on either side of the 1, the middle element must be deleted. However, this element had to be included in the final sum. This is a contradiction.

Now, let us look at some properties of $$$b$$$.

$$$Lemma$$$ $$$1:$$$ There exists an $$$i$$$ such that $$$b_i=1$$$.

$$$Proof:$$$ Clearly, the last element remaining will be included in the final sum. So there will be at least one element which is included in the final sum.

$$$Lemma$$$ $$$2:$$$ A contiguous sequence of all 0s bounded by 1s on either side cannot have an even number of 0s.

$$$Explanation:$$$ Sequences of the form 1001, 100001, 10000001,.... are not reducible.

$$$Proof:$$$ Using the 2nd operation, we can reduce any such contiguous sequence to 1001. Notice that we cannot remove the bounding 1s on either side. Even if $$$b$$$ is something like 101001 we can only reduce it to 1001 (101 -> 1 using the first operation). Clearly, there are no valid operations, since neither 100 nor 001 can be used to reduce this sequence.

$$$Lemma$$$ $$$3:$$$ A contiguous sequence of all 1s can have size at most 2.

$$$Proof:$$$ Let there exist a contiguous sequence such as 111 (having size at least 3). Again, we cannot remove the bounding 1s on either side, and there are no valid operations to reduce this sequence.

$$$Lemma$$$ $$$4:$$$ There can be at most one contiguous sequence of 1s having size 2.

$$$Proof:$$$ Let us consider two such contiguous sequences 11 and 11 which are next to each other, i.e. no other "11" sequence occurs between them, such as 11011, 1100011, 1101011, 110100011, .. etc. Again, using the two operations, we can reduce any such sequence to 11011, which can then be reduced to 111 (101 -> 1) which is not reducible.

Using the above 4 lemmas we have a very clear picture of what $$$b$$$ can look like. An example is 11010001010000010. Now notice we can always get a better sum if instead of allowing any odd number of contiguous 0s, we allow only a single 0. So for our example, 11010 1 01010 1 0 1 010 will yield an optimal sum given the position of the "11". Hence, the optimal sum contains exactly $$$(n+1)/2$$$ elements.

Hey! This is my first attempt at a written formal proof. Do let me know if I have made any mistakes / if any improvements I can make in future. Thanks!

Thank you, this is amazing.

72 problems got rejected. In one of the previous contest, problems were rejected because they were too common or they have a standard approach.

So, can't we have a different section of rejected problems or articles after the contest is over so as to know that these problems are some of the standard ones nowadays.

why is the dp on problem E right? Can anyone help me understand that?

I understood... sorry

MagentaCobra I have one doubt, according to you 12 A type problems were rejected, then it doesn't make sense that after that finalized A problem would be that trivial.

Let me know what you think about it as a 3rd person perspective :)

golions, I think the editorial code provided is $$$O(n m^3)$$$, not $$$O(n^2m^2)$$$, right? I'm just counting the for loops in 86603896, plus I think the dp states mentioned in the editorial runtime analysis is $$$m^2$$$, not $$$nm$$$. Am I missing something?

Btw, my solution is $$$O(m^4)$$$ other than reading input. So this would run in time even if we had much larger constraints on $$$n$$$, as long as we also have something like "the total number of intervals not exceed $$$10^5$$$".

My submission if anyone is interested: 86769500

Yes, you are right. The editorial will be updated shortly. Thank you for pointing that out!

It's possible to solve F using only $$$2k$$$ queries by ensuring that the second time we get a number as the mode, we can locate all occurences of it. See code for details.

Code: 87277077

Could someone please tell why this is failing for D?

https://codeforces.me/contest/1372/submission/88648252

DIV2 C

My proof that the number of exchanges can be atmost 2, Let us first "de-arrange" the array, i.e. there is no i such that a[i] equals i. For this we have to find a permuation such that there exists no i in that permuation with p[i] = org[i] or identity[i] = c[i] where array org is original array and identity is array [1,2,3,4,5,6..]. Total number of available permutations is n! and we have to remove n*(n-1) permutations for first and second array each.

For n > 4 n! — 2*n*(n-1) is positive , so there is always atleast one "de-arranged permutation"

For n = 3 we can manually check it is possible

And for n = 4,there are special cases like a[4] = 4, so it reduces to n = 3 case.

After de-arranging , we can arrange it in just one speical exchange