Hello, Codeforces!

If you're developing problems in Polygon, you might like this image to attract attention.

For the new members of the community, I would like to remind you that Polygon (https://polygon.codeforces.com/) is a system developed and maintained by Codeforces for preparing programming problems. It is there that authors and coordinators develop all the problems for the rounds. Moreover, I believe a significant (large?) portion of the problems for other competitions is also developed there: various stages of ICPC, national competitions of different levels, educational problems for various courses, etc. In 2023, more than 50000 problems were prepared in Polygon (only those for which a package was compiled are counted)!

We often implement such improvements in Polygon that make the process of preparing a problem more reliable. These often include various automatic warnings and tips for problem developers and/or coordinators.

Today I want to talk about two recent such innovations in Polygon. Both of these innovations exploit validators written in Testlib. They will work only if you write the validator correctly:

- Use variables exactly as they are named in the problem statement. For example, if $$$t$$$ is the number of test cases and $$$a$$$ is a given array, then

int t = inf.readInt(1, 10'000, "t");andvector<int> a = inf.readInts(n, -100, 100, "a");are correct, butint t = inf.readInt(1, 10'000, "testCases");andvector<int> a = inf.readInts(n, -100, 100, "ai");are not. - Use the built-in features of Testlib: for reading arrays, use readInts/readLongs etc. Whenever possible, check constraints through the arguments of read functions, not with custom code afterward.

In the Polygon/Codeforces ecosystem, a properly written validator not only ensures the correctness of the test but also helps to analyze and process the test. Here, read https://codeforces.me/blog/entry/105779 about how we highlight input data sets in the test when displayed in the problem statement.

Warnings about Incomplete Tests

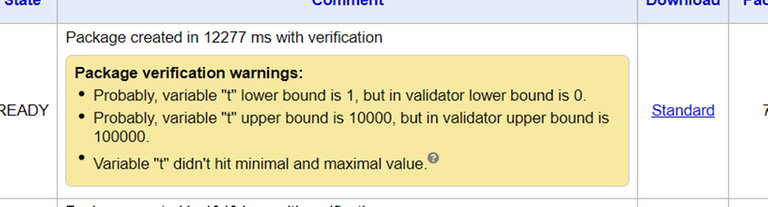

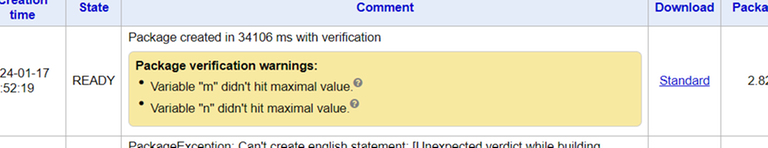

When you build a package, you may see a warning like this from the system. Please do not ignore it!

We extract this information from the validator. Most variables from the test in the validator are read either as int t = inf.readInt(1, 10'000, "t"); (a single number) or as vector<int> a = inf.readInts(n, -100, 100, "a"); (an array of n numbers). The validator can report externally that on some of the tests a lower/upper limit of the variable's constraints was (or was not) reached. And if a limit was not reached in a test set, a warning will appear.

Sometimes (although quite rarely) the limit is indeed not reached or there is no reason to require its achievement. For example, in a problem with test cases, the case $$$t=1$$$ may not have special meaning (but often it does, since maximum tests are often possible only in this case). Then you can use a notation like int t = inf.readInt(1, 10'000, "~t");. The tilde to the left of the variable simply indicates that there should be no warning that the lower limit was not reached in the tests. Similarly, the tilde can be placed to the right (upper limit), or even on both sides.

Once again, I urge the use of methods to suppress warnings only in rare cases when they are indeed absolutely irrelevant. In any other case, just add a test of the required type, and this will make the problem better.

For developers of Codeforces round problems – it would be good to check that there are no such warnings for pretests. You can simply run a solution on a pretests testset and at the bottom of the invocation report, there will be warnings (if they are present).

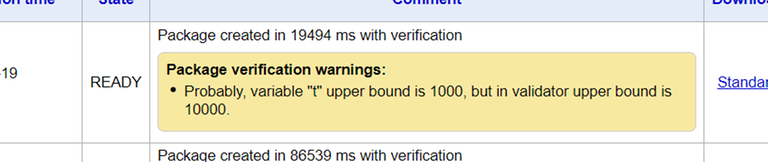

Warnings about Mismatches between Constraints in Problem Statements and Validators

I thought about this feature for a long time but got down to implementation after a recent incident https://codeforces.me/blog/entry/124696?#comment-1107429 Fortunately, it affected only a very small number of participants. But such a mistake can significantly affect the course of the contest.

Now, if you set constants while checking limits in the validator (like here int t = inf.readInt(1, 10'000, "t");), these bound constants can be returned to the system by the validator. Besides, I wrote code that, in simple cases, extracts similar information from LaTeX markup. In case of a mismatch of limits, you will see a message like this.

Of course, this automation works only for simple constant constraints. However, they are most common. There will probably be rare cases of false positives. Please report them to me.

Mike is working really hard!

Does the mismatch-detecting system validate the limit of the sum of $$$n$$$ over all test cases?

When unrated participation?

STFU to all those spoiled brats who downvoted

Sorry since it is not relevant question, but I want to ask how to "separate" test case in the sample tests in polygon. I mean, when I hover the mouse, it will display the number for each case.

setTestCase(i)

I have used that in the checker but it doesn't work :(

this should be in validator

thanks, I got it now

we are also making an internal contest for our juniors of our institute. Polygon already was really great and these additions made our process extremely simple. kuddos!

Great invention, but probably I won't use that since preparing a problem in IOI contest type dosen't need a validator and I'm tired of writing validators.

What a great invention!

Still waiting for Good Bye 2023 to get unrated

It's nearly impossible now since lots of contests are held after GB2023. If we want to roll back, we should calculate so many things.

still waiting cause u lost rating? agree with xcx0902 there was already contests after goodbye 2023; if Mike wanted it to be unrated, he would already made it unrated

I know, but I wanted it to become unrated because it deserved to become unrated, due to various reasons, that you can find easily, and I don't want to repeat the reasons because a lot of other people have explained it, and I don't wanna repeat it.

Can it be improved even further and developed in a way, that we have only one place in polygon to change constraints and this change will be automatically apply to statement, validator, command line parameters of generators in a test generating script and may be even to source codes of solutions?

For now, it sounds too general, with all the possible nuances in the problem statements and solutions. Certainly something like this can be done, but it would inevitably be applicable only to part of the problems which maintain a certain form of statements and solutions.

However, with AI advancements, who knows? Quite probably, in 5 years, AI would look at a validator change, and have a good guess (often correct) of what to change in the other parts of the problem.

I thought about something like new tab in polygon, where you can define constants like

MAXN. And then you can use it everywhere (statement, C++ code, test generating script) by expression like!MAXN!and it will be simply replaced to the corresponding value. Seems way simpler than extracting this constant value from 2 different sources (validator code and statement) and check it for equality.Looks viable at first glance. We have FreeMarker templates already, for statements (latex) and test generation (essentially a simple shell script).

However, much caution would be required. Preprocessing a source, especially C++ source, with virtually any syntax would almost certainly clash with some obscure valid C++ syntax. Even more so when you consider there are other programming languages in use.

Adding a preprocessing step for source codes is tricky therefore, and potentially dangerous. At least with the statement, you see immediately if it went wrong. With a solution or generator, not so obvious.

Still waiting for Good Bye 2023 to get unrated

you're literally unrated

MikeMirzayanov Hello, Could you please share the system design of codeforces if possible (whole thing, live rating updates, program compilation and checker, final rating changes, fraud detection etc

I am sure there will be a lot to learn from this for everyone

Dislike if you are a freak

Is there a problem?

How does the system think that the lower bound of $$$m$$$ is $$$2$$$?