1919A - Wallet Exchange

Author: maomao90

When does the game end?

Depending on whether the player chooses to exchange wallets with their opponent on step $$$1$$$, $$$1$$$ coins will be removed from either the opponent's wallet or the player's wallet. This means that if either of the players still has remaining coins, the game will not end as at least one of the choices will still be valid.

The only way that the game ends is when both players have $$$0$$$ coins. Since each operation decreases the total amount of coins by exactly $$$1$$$, the only way for Alice to win the game is if $$$a + b$$$ is odd.

#include <bits/stdc++.h>

using namespace std;

int main() {

int t; cin >> t;

while (t--) {

int a, b; cin >> a >> b;

if ((a + b) % 2 == 0) {

cout << "Bob\n";

} else {

cout << "Alice\n";

}

}

}

1919B - Plus-Minus Split

Author: maomao90

Try to find a lower bound.

The answer is $$$|a_1 + a_2 + \ldots + a_n|$$$. Intuitively, whenever we have a subarray with a sum equal to $$$0$$$, it will be helpful for us as its penalty will become $$$0$$$. Hence, we can split $$$a$$$ into subarrays with a sum equal to $$$0$$$ and group up the remaining elements into individual subarrays of size $$$1$$$. A formal proof is given below.

Let us define an alternative penalty function $$$p2(l, r) = |a_l + a_{l+1} + \ldots + a_r|$$$. We can see that $$$p2(l, r) \le p(l, r)$$$ for all $$$1\le l\le r\le n$$$. Since the alternative penalty function does not have the $$$(r - l + 1)$$$ term, there is no reason for us to partition $$$a$$$ into two or more subarrays as $$$|x| + |y| \ge |x + y|$$$ for all integers $$$x$$$ and $$$y$$$, so the answer for the alternative penalty function is $$$|a_1 + a_2 + \ldots + a_n|$$$.

Since $$$p2(l, r)\le p(l, r)$$$, this means that the answer to our original problem cannot be smaller than $$$|a_1 + a_2 + \ldots + a_n|$$$. In fact, this lower bound is always achievable. Let us prove this by construction.

Note that if we flip every "$$$\mathtt{+}$$$" to "$$$\mathtt{-}$$$" and every "$$$\mathtt{-}$$$" to "$$$\mathtt{+}$$$", our answer will remain the same since our penalty function involves absolute values. Hence, we can assume that the sum of elements of $$$a$$$ is non-negative.

If the sum of elements of $$$a$$$ is $$$0$$$, we can split $$$a$$$ into a single array equal to itself $$$b_1 = a$$$ and obtain a penalty of $$$0$$$. Otherwise, we find the largest index $$$i$$$ where $$$a_1 + a_2 + \ldots + a_i = 0$$$. Then we let the first subarray be $$$b_1 = [a_1, a_2, \ldots, a_i]$$$ and the second subarray be $$$b_2 = [a_{i + 1}]$$$, so we have $$$p(b_1) = 0$$$ and $$$p(b_2) = 1$$$. Since $$$i$$$ is the largest index, $$$a_{i + 1}$$$ has to be equal to $$$1$$$ as if $$$a_{i + 1}$$$ is $$$-1$$$ instead, there has to be a larger index where the prefix sum becomes $$$0$$$ again for the prefix sum to go from negative to the final positive total sum. This means that for the remaining elements of the array $$$a_{i+2\ldots n}$$$, the sum of its elements decreases by $$$1$$$, so we can continue to use the same procedure to split the remaining elements which decrease the sum by $$$1$$$ and increase the penalty by $$$1$$$ each time until the sum of elements becomes $$$0$$$. Hence, the total penalty will be equal to the sum of elements of $$$a$$$.

#include <bits/stdc++.h>

using namespace std;

int t;

int n;

string s;

int main() {

cin >> t;

while (t--) {

cin >> n;

cin >> s;

int sm = 0;

for (int i = 0; i < n; i++) {

sm += s[i] == '+' ? 1 : -1;

}

cout << abs(sm) << '\n';

}

}

1919C - Grouping Increases

Author: maomao90

Consider a greedy approach.

Consider the following approach. We start with empty arrays $$$b$$$ and $$$c$$$, then insert elements of the array $$$a$$$ one by one to the back of $$$b$$$ or $$$c$$$. Our penalty function only depends on adjacent elements, so at any point in time, we only care about the value of the last element of arrays $$$b$$$ and $$$c$$$. Suppose we already inserted $$$a_1, a_2, \ldots, a_{i - 1}$$$ into arrays $$$b$$$ and $$$c$$$ and we now want to insert $$$a_i$$$. Let $$$x$$$ and $$$y$$$ be the last element of arrays $$$b$$$ and $$$c$$$ respectively (if they are empty, use $$$\infty$$$). Note that swapping arrays $$$b$$$ and $$$c$$$ does not matter, so without loss of generality, assume that $$$x\le y$$$. We will use the following greedy approach.

- If $$$a_i\le x$$$, insert $$$a_i$$$ to the back of the array with a smaller last element.

- If $$$y < a_i$$$, insert $$$a_i$$$ to the back of the array with a smaller last element.

- If $$$x < a_i\le y$$$, insert $$$a_i$$$ to the back of the array with a bigger last element.

The proof of why the greedy approach is optimal is given below:

- $$$a_i\le x$$$. In this case, $$$a_i$$$ is not greater than the last element of both arrays, so inserting $$$a_i$$$ to the back of either array will not add additional penalties. However, it is better to insert $$$a_i$$$ into the array with a smaller last element so that in the future, we can insert a wider range of values into the new array without additional penalty.

- $$$y < a_i$$$. In this case, $$$a_i$$$ is greater than the last element of both arrays, so inserting $$$a_i$$$ to the back of either array will contribute to $$$1$$$ additional penalty. However, it is better to insert $$$a_i$$$ into the array with a smaller last element so that in the future, we can insert a wider range of values into the new array without additional penalty.

- $$$x < a_i\le y$$$. In this case, if we insert $$$a_i$$$ to the back of the array with the larger last element, there will not be any additional penalty. However, if we insert $$$a_i$$$ to the back of the array with the smaller last element, there will be an additional penalty of $$$1$$$. The former option is always better than the latter. This is because if we consider making the same choices for the remaining elements $$$a_{i+1}$$$ to $$$a_n$$$ in both scenarios, there will be at most one time where the former scenario will add one penalty more than the latter scenario as the former scenario has a smaller last element after inserting $$$a_i$$$. After that happens, the back of the arrays in both scenarios will become the same and hence, the former case will never be less optimal.

Following the greedy approach for all 3 cases will result in a correct solution that runs in $$$O(n)$$$ time.

Consider a dynamic programming approach.

Let $$$dp_{i, v}$$$ represent the minimum penalty when we are considering splitting $$$a_{1\ldots i}$$$ into two subarrays where the last element of one subarray is $$$a_i$$$ while the last element of the second subarray is $$$v$$$.

Speed up the transition by storing the state in a segment tree.

Let us consider a dynamic programming solution. Let $$$dp_{i, v}$$$ represent the minimum penalty when we are considering splitting $$$a_{1\ldots i}$$$ into two subarrays where the last element of one subarray is $$$a_i$$$ while the last element of the second subarray is $$$v$$$. Then, our transition will be $$$dp_{i, v} = dp_{i - 1, v} + [a_{i - 1} < a_i]$$$ for all $$$1\le v\le n, v\neq a_{i - 1}$$$ and $$$dp_{i, a_{i - 1}} = \min(dp_{i - 1, a_{i - 1}} + [a_{i - 1} < a_i], \min_{1\le x\le n}(dp_{i - 1, x} + [x < a_i]))$$$.

To speed this up, we use a segment tree to store the value of $$$dp_{i - 1, p}$$$ at position $$$p$$$. To transition to $$$dp_i$$$, notice that the first transition is just a range increment on the entire range $$$[1, n]$$$ of the segment tree if $$$a_{i - 1} < a_i$$$. For the second transition, we can do two range minimum queries on ranges $$$[1, a_i - 1]$$$ and $$$[a_i, n]$$$. The final time complexity is $$$O(n\log n)$$$.

#include <bits/stdc++.h>

using namespace std;

const int INF = 1000000005;

const int MAXN = 200005;

int t;

int n;

int a[MAXN];

int main() {

ios::sync_with_stdio(0), cin.tie(0);

cin >> t;

while (t--) {

cin >> n;

for (int i = 1; i <= n; i++) {

cin >> a[i];

}

int t1 = INF, t2 = INF;

int ans = 0;

for (int i = 1; i <= n; i++) {

if (t1 > t2) {

swap(t1, t2);

}

if (a[i] <= t1) {

t1 = a[i];

} else if (a[i] <= t2) {

t2 = a[i];

} else {

t1 = a[i];

ans++;

}

}

cout << ans << '\n';

}

}

#include <bits/stdc++.h>

using namespace std;

const int INF = 1000000005;

const int MAXN = 200005;

int t;

int n;

int a[MAXN];

int mn[MAXN * 4], lz[MAXN * 4];

void init(int u = 1, int lo = 1, int hi = n) {

mn[u] = lz[u] = 0;

if (lo != hi) {

int mid = lo + hi >> 1;

init(u << 1, lo, mid);

init(u << 1 ^ 1, mid + 1, hi);

}

}

void propo(int u) {

if (lz[u] == 0) {

return;

}

lz[u << 1] += lz[u];

lz[u << 1 ^ 1] += lz[u];

mn[u << 1] += lz[u];

mn[u << 1 ^ 1] += lz[u];

lz[u] = 0;

}

void incre(int s, int e, int x, int u = 1, int lo = 1, int hi = n) {

if (lo >= s && hi <= e) {

mn[u] += x;

lz[u] += x;

return;

}

propo(u);

int mid = lo + hi >> 1;

if (s <= mid) {

incre(s, e, x, u << 1, lo, mid);

}

if (e > mid) {

incre(s, e, x, u << 1 ^ 1, mid + 1, hi);

}

mn[u] = min(mn[u << 1], mn[u << 1 ^ 1]);

}

int qmn(int s, int e, int u = 1, int lo = 1, int hi = n) {

if (s > e) {

return INF;

}

if (lo >= s && hi <= e) {

return mn[u];

}

propo(u);

int mid = lo + hi >> 1;

int res = INF;

if (s <= mid) {

res = min(res, qmn(s, e, u << 1, lo, mid));

}

if (e > mid) {

res = min(res, qmn(s, e, u << 1 ^ 1, mid + 1, hi));

}

return res;

}

int main() {

ios::sync_with_stdio(0), cin.tie(0);

cin >> t;

while (t--) {

cin >> n;

for (int i = 1; i <= n; i++) {

cin >> a[i];

}

init();

for (int i = 1; i <= n; i++) {

int ndp = min(qmn(1, a[i] - 1) + 1, qmn(a[i], n));

if (i > 1) {

if (a[i - 1] < a[i]) {

incre(1, n, 1);

}

int dp = qmn(a[i - 1], a[i - 1]);

if (ndp < dp) {

incre(a[i - 1], a[i - 1], ndp - dp);

}

}

}

cout << qmn(1, n) << '\n';

}

}

Solve the problem if you have to split the array into $$$k$$$ subsequences, where $$$k$$$ is given in the input ($$$k = 2$$$ for the original problem).

Modified statement

There is an array $$$A$$$ of size $$$N$$$ and an array $$$T$$$ of size $$$K$$$. Initially, $$$T_i = \infty$$$ for all $$$1 \le i \le K$$$. For each time $$$t$$$ from $$$1$$$ to $$$N$$$, the following will happen:

- Select an index $$$1 \le i \le K$$$. If $$$A_t > T_i$$$, we increase the cost by $$$1$$$. Then, we set $$$T_i := A_t$$$.

Find the minimum possible cost after time $$$N$$$ if we select the indices optimally.

Greedy

The order of $$$T$$$ does not matter. Hence for convenience, we will maintain $$$T$$$ in non-decreasing order. At each time $$$t$$$, we will use the following algorithm:

- If $$$A_t > T_K$$$, do the operation on index $$$1$$$.

- Otherwise, find the smallest index $$$1 \le i \le K$$$ where $$$A_t \le T_i$$$ and do the operation on index $$$i$$$.

Proof

Suppose there exists an optimal solution that does not follow our algorithm. We will let $$$OT_{t, i}$$$ denote the value of $$$T_i$$$ before the operation was done at time $$$t$$$ in the optimal solution. Let $$$et$$$ be the earliest time that the operation done by the optimal solution differs from that of the greedy solution.

- Case 1: $$$A_{et} > OT_{et,K}$$$. Since we are maintaining $$$T$$$ in the sorted order, having $$$A_{et} > OT_{et,K}$$$ means that $$$A_{et}$$$ is larger than all elements of $$$T$$$. This means that no matter which index $$$i$$$ we choose to do the operation on, the cost will always increase by $$$1$$$. Suppose an index $$$i > 1$$$ was chosen in the optimal solution. We can always choose to do the operation on index $$$1$$$ instead of index $$$i$$$ and the answer will not be less optimal. This is because if we let $$$T'$$$ be the array $$$T$$$ after the operation was done on index $$$1$$$, $$$T'_p \le OT_{et+1,p}$$$ for all $$$1 \le p \le K$$$ since $$$T'_p = \begin{cases}OT_{et,p+1}&\text{if }p<K\newline A_{et}&\text{if }p=K\end{cases}$$$ while $$$OT_{et+1,p} = \begin{cases}OT_{et,p}&\text{if }p<i\newline OT_{et,p+1}&\text{if }i\le p<K\newline A_{et}&\text{if }p=K\end{cases}$$$.

- Case 2: $$$A_{et} \le OT_{et,K}$$$. For convenience, we will denote that the operation was done on index $$$i$$$ in the greedy solution while the operation was done on index $$$j$$$ based on the optimal solution during time $$$et$$$.

- Case 2A: $$$i < j$$$. In this case, the cost does not increase for both the optimal solution and the greedy solution. However, we can always do an operation on index $$$i$$$ instead of index $$$j$$$ and the answer will not be less optimal. This is because if we let $$$T'$$$ be the array $$$T$$$ after the operation was done on index $$$i$$$, $$$T'_p\le OT_{et+1,p}$$$ for all $$$1\le p\le K$$$ since $$$T'_p = \begin{cases}OT_{et,p}&\text{if }p\neq i\newline A_{et}&\text{if }p=i\end{cases}$$$ while $$$OT_{et+1,p} = \begin{cases}OT_{et,p}&\text{if }p<i\newline A_{et}&\text{if }p=i\newline OT_{et,p-1}&\text{if }i< p\le j\newline OT_{et,p}&\text{if }j<p\le K\end{cases}$$$.

- Case 2B: $$$i > j$$$. For this case, the cost increases for the optimal solution while the cost does not change for the greedy solution. However, it is not trivial to prove that the greedy solution is more optimal as even though it has a smaller cost, it results in a less optimal array $$$T$$$. Hence, we will prove this case below.

Case 2B

We want to come up with a modified solution that does the same operations as the optimal solution for time $$$1\le t<et$$$ and does an operation on index $$$i$$$ during time $$$et$$$. Adopting a similar notation to $$$OT$$$, we will let $$$MT_{t, i}$$$ denote the value of $$$T_i$$$ before the operation was done at time $$$t$$$ in this modified solution. Then, $$$MT_{et+1,p} = \begin{cases}OT_{et,p}&\text{if }p\neq i\newline A_{et}&\text{if } p=i\end{cases}$$$ and $$$OT_{et+1,p}=\begin{cases}OT_{et,p} &\text{if } p<j\newline OT_{et,p+1}&\text{if }j\le p<i-1\newline A_{et}&\text{if }p=i-1\newline OT_{et,p}&\text{if }i\le p\le K\end{cases}$$$. Note that in this case, $$$MT_{et+1,p}\le OT_{et+1,p}$$$ for all $$$1\le p\le K$$$, which means that our modified solution results in a less optimal state than the optimal solution. However, since our modified solution requires one less cost up to this point, we will be able to prove that our modified solution will not perform worse than the optimal solution.

Notice that $$$OT_{et+1,p}\le MT_{et+1,p+1}$$$ for all $$$1\le p<K$$$. We denote that the index that the optimal solution operates on during time $$$t$$$ is $$$x_t$$$. Let $$$r$$$ be the minimum time where $$$et+1\le r\le N$$$ and $$$e_r=N$$$. Due to the above property that $$$OT_{et+1,p}\le MT_{et+1,p+1}$$$ for all $$$1\le p<K$$$, we can let our modified solution do the operation on index $$$x_t+1$$$ for all time $$$et+1\le t<r$$$ and the cost will not be more than the optimal solution. This is because the property that $$$OT_{t+1,p}\le MT_{t+1,p+1}$$$ for all $$$1\le p<K$$$ still holds throughout that time range even after each update. Note that if such an $$$r$$$ does not exist, we can let our modified solution do the operation on index $$$x_t+1$$$ for all time $$$et+1\le t\le K$$$ and we completed coming up with the modified solution with a cost not more than the optimal solution.

However, if such an $$$r$$$ exists, then at time $$$r$$$, since $$$x_r=N$$$, we are no longer able to use the same method. However, let us consider what happens if we let our modified solution do an operation on index $$$1$$$ during time $$$r$$$.

If $$$A_r>MT_{r,K}$$$, it will mean that $$$MT_{r+1,p}=\begin{cases}MT_{r,p+1}&\text{if }p<K\newline A_r&\text{if }p=K\end{cases}$$$ while $$$OT_{r+1,p}=\begin{cases}OT_{r,p}&\text{if }p<K\newline A_r&\text{if }p=K\end{cases}$$$ since $$$OT_{r,K-1}\le MT_{r,K}<A_r$$$. Even though during this time, it might be possible that the cost of the modified solution increases by $$$1$$$ while the cost of the optimal solution remains the same, recall that previously during time $$$i$$$ our modified solution used one less cost than the optimal solution. As a result, the modified solution will end up having a cost of not more than the optimal solution. At the same time, $$$OT_{r+1,p}\le MT_{r+1,p}$$$ for all $$$1\le p\le K$$$. Hence, for all time $$$r<t\le K$$$, we can let our modified solution do the operation on the same index as the optimal solution $$$x_t$$$ and the cost of our modified solution will not be more than that of the optimal solution.

On the other hand, suppose $$$A_r\le MT_{r,K}$$$. Let $$$v$$$ be the minimum position such that $$$A_r\le MT_{r,v}$$$ and let $$$w$$$ be the minimum position such that $$$A_r\le OT_{r,w}$$$. Then, $$$MT_{r+1,p}=\begin{cases}MT_{r,p+1}&\text{if }p<v-1\newline A_r&\text{if }p=v-1\newline MT_{r,p}&\text{if }p\ge v\end{cases}$$$ and $$$OT_{r+1,p}=\begin{cases}OT_{r,p}&\text{if }p<w\newline A_r&\text{if }p=w\newline OT_{r,p-1}&\text{if }p>w\end{cases}$$$. In the same way, the cost of our modified solution might increase while the cost of the optimal solution stays the same, however, $$$OT_{r+1,p}\le MT_{r+1,p}$$$ for all $$$1\le p\le K$$$. - For $$$p<v-1$$$ and $$$p>w$$$, the condition holds since $$$OT_{r,p}\le MT_{r,p+1}$$$ for all $$$1\le p<K$$$. Note that $$$v-1\le w$$$ because of the same inequality as well. - Suppose $$$v-1=w$$$. Then for $$$p=v-1$$$, $$$OT_{r+1,p}=A_r\le A_r=MT_{r+1,p}$$$. From now on, we suppose $$$v-1\neq w$$$ - For $$$p=v-1$$$, $$$OT_{r,v-1}\le A_r$$$ as $$$w$$$ is defined as the minimum position that $$$A_r\le OT_{r,w}$$$ and $$$v-1< w$$$. - For $$$v\le p<w$$$, $$$OT_{r,p}\le MT_{r,p}$$$ as $$$OT_{r,p}<A_r\le MT_{r,p}$$$ - For $$$p=w$$$, $$$A_r\le MT_{r,w}$$$ as $$$v$$$ is defined as the minimum position that $$$A_r\le MT_{r,v}$$$ and $$$v-1<w$$$

Now that we managed to construct a modified solution which follows the greedy algorithm from time $$$1\le t\le et$$$ and is not less optimal than the optimal solution, we can let the optimal solution be our modified solution and find the new $$$et$$$ to get a new modified solution. Hence by induction, our greedy solution is optimal.

1919D - 01 Tree

Author: maomao90

What does the distance of two leaves that share the same parent look like?

What happens if we delete two leaves that share the same parent?

Consider two leaves that share the same parent. They will be adjacent to each other in the dfs order, so their distances will be adjacent in array $$$a$$$. Furthermore, their distances to the root will differ by exactly $$$1$$$ since one of the edges from the parent to its children will have weight $$$0$$$ while the other will have weight $$$1$$$.

If we delete two leaves that share the same parent, the parent itself will become the leaf. Since one of the edges from the parent to the child has weight $$$0$$$, the distance from the parent to the root is equal to the smaller distance between its two children. This means that deleting two leaves that share the same parent is the same as selecting an index $$$i$$$ such that $$$a_i = a_{i - 1} + 1$$$ or $$$a_i = a_{i + 1} + 1$$$, then removing $$$a_i$$$ from array $$$a$$$.

Consider the largest value in $$$a$$$. If it is possible to delete it (meaning there is a value that is exactly one smaller than it to its left or right), we can delete it immediately. This is because keeping the largest value will not help to enable future operations as it can only help to delete elements that are $$$1$$$ greater than it, which is not possible for the largest value.

Now, all we have to do is to maintain all elements that can be deleted and choose the element with the largest value each time. Then, whenever we delete an element, we need to check whether the two adjacent elements become deletable and update accordingly. We can do this using a priority_queue and a linked list in $$$O(n\log n)$$$. Note that many other implementations exist, including several $$$O(n)$$$ solutions.

#include<bits/stdc++.h>

using namespace std;

const int MAXN = 200005;

int n;

int a[MAXN];

int prv[MAXN],nxt[MAXN];

bool in[MAXN];

bool good(int i) {

if (i < 1 || i > n) {

return 0;

}

return a[prv[i]] == a[i] - 1 || a[nxt[i]] == a[i] - 1;

}

int main(){

ios::sync_with_stdio(0), cin.tie(0);

int t; cin >> t;

while (t--) {

cin >> n;

priority_queue<pair<int, int>> pq;

for (int i = 1; i <= n; i++) {

prv[i] = i - 1;

nxt[i] = i + 1;

in[i] = 0;

cin >> a[i];

}

a[n + 1] = a[0] = -2;

for (int i = 1; i <= n; i++) {

if (good(i)) {

in[i] = 1;

pq.push({a[i], i});

}

}

while (!pq.empty()) {

auto [_, i] = pq.top(); pq.pop();

nxt[prv[i]] = nxt[i];

prv[nxt[i]] = prv[i];

if (!in[prv[i]] && good(prv[i])) {

in[prv[i]]=1;

pq.push({a[prv[i]], prv[i]});

}

if (!in[nxt[i]] && good(nxt[i])) {

in[nxt[i]]=1;

pq.push({a[nxt[i]], nxt[i]});

}

}

int mn = n, bad = 0;

for (int i = 1; i <= n; i++) {

bad += !in[i];

mn = min(a[i], mn);

}

if (bad == 1 && mn == 0) {

cout << "YES\n";

} else {

cout << "NO\n";

}

}

}

1919E - Counting Prefixes

Author: maomao90

Try solving the problem if the sum of elements of array $$$a$$$ is equal to $$$s$$$. If we can do this in $$$O(n)$$$ time, we can iterate through all possible values of $$$p_1 \le s \le p_n$$$ and sum up the number of ways for each possible sum.

Consider starting with array $$$a = [1, 1, \ldots, 1, -1, -1, \ldots, -1]$$$ where there are $$$p_n$$$ occurences of $$$1$$$ and $$$p_n - s$$$ occurrences of $$$-1$$$. Then, try inserting $$$(-1, 1)$$$ into the array to fix the number of occurrences of prefix sum starting from the largest value ($$$p_n$$$) to the smallest value ($$$p_1$$$).

Let us try to solve the problem if the sum of elements of array $$$a$$$ is equal to $$$s$$$. Consider starting array $$$a = [1, 1, \ldots, 1, -1, -1, \ldots, -1]$$$ where there are $$$p_n$$$ occurrences of $$$1$$$ and $$$p_n - s$$$ occurrences of $$$-1$$$. Notice that when we insert $$$(-1, 1)$$$ into array $$$a$$$ between positions $$$i$$$ and $$$i + 1$$$ where the sum of $$$a$$$ from $$$1$$$ to $$$i$$$ is $$$s$$$, two new prefix sums $$$s - 1$$$ and $$$s$$$ will be formed while the remaining prefix sums remain the same. Let us try to fix the prefix sums starting from the largest prefix sum to the smallest prefix sum.

In the starting array $$$a$$$, we only have $$$1$$$ occurrence of prefix sum with value $$$p_n$$$. We can insert $$$(-1, 1)$$$ right after it $$$k$$$ times to increase the number of occurrences of prefix sum with value $$$p_n$$$ by $$$k$$$. In the process of doing so, the number of occurrences of prefix sum with value $$$p_n - 1$$$ also increased by $$$k$$$. Now, we want to fix the number of occurrences of prefix sum with value $$$p_n - 1$$$. If we already have $$$x$$$ occurrences but we require $$$y > x$$$ occurrences, we can choose to insert $$$y - 1$$$ pairs of $$$(-1, 1)$$$ right after any of the $$$x$$$ occurrences. We can calculate the number of ways using stars and bars to obtain the formula $$$y - 1\choose y - x$$$.

We continue using a similar idea to fix the number of occurrences of $$$p_n - 2, p_n - 3, \ldots, p_1$$$, each time considering the additional occurrences that were contributed from the previous layer. Each layer can be calculated in $$$O(1)$$$ time after precomputing binomial coefficients, so the entire calculation to count the number of array $$$a$$$ whose sum is $$$s$$$ and has prefix sum consistent to the input takes $$$O(n)$$$ time. Then, we can iterate through all possible $$$p_1 \le s \le p_n$$$ and sum up the answers to obtain a solution that works in $$$O(n^2)$$$ time.

#include <bits/stdc++.h>

using namespace std;

typedef long long ll;

const int INF = 1000000005;

const int MAXN = 200005;

const int MOD = 998244353;

ll fact[MAXN * 2], ifact[MAXN * 2];

int t;

int n;

int f[MAXN * 2], d[MAXN * 2];

inline ll ncr(int n, int r) {

if (r < 0 || n < r) {

return 0;

}

return fact[n] * ifact[r] % MOD * ifact[n - r] % MOD;

}

// count number of a_1 + a_2 + ... + a_n = x

inline ll starbar(int n, int x) {

if (n == 0 && x == 0) {

return 1;

}

return ncr(x + n - 1, x);

}

int main() {

ios::sync_with_stdio(0), cin.tie(0);

fact[0] = 1;

for (int i = 1; i < MAXN * 2; i++) {

fact[i] = fact[i - 1] * i % MOD;

}

ifact[0] = ifact[1] = 1;

for (int i = 2; i < MAXN * 2; i++) {

ifact[i] = MOD - MOD / i * ifact[MOD % i] % MOD;

}

for (int i = 2; i < MAXN * 2; i++) {

ifact[i] = ifact[i - 1] * ifact[i] % MOD;

}

cin >> t;

while (t--) {

cin >> n;

for (int i = 0; i < n * 2 + 5; i++) {

f[i] = 0;

}

n++;

for (int i = 1; i < n; i++) {

int s; cin >> s;

f[s + n]++;

}

f[n]++;

int mn = INF, mx = -INF;

for (int i = 0; i <= 2 * n; i++) {

if (f[i]) {

mn = min(mn, i);

mx = max(mx, i);

}

}

bool bad = 0;

for (int i = mn; i <= mx; i++) {

if (!f[i]) {

bad = 1;

break;

}

}

if (bad || mn == mx) {

cout << 0 << '\n';

continue;

}

ll ans = 0;

for (int x = mx; x >= mn; x--) {

d[mx - 1] = f[mx] + (mx > n) - (mx == x);

for (int i = mx - 2; i >= mn - 1; i--) {

d[i] = f[i + 1] - d[i + 1] + (i >= x) + (i >= n);

}

if (d[mn - 1] != 0) {

continue;

}

ll res = 1;

for (int i = mx - 1; i >= mn; i--) {

res = res * starbar(d[i], f[i] - d[i]) % MOD;

}

ans += res;

if (ans >= MOD) {

ans -= MOD;

}

}

cout << ans << '\n';

}

}

Solve the problem in $$$O(n)$$$ time.

Unfortunately, it seems like ARC146E is identical to this problem :(

1919F1 - Wine Factory (Easy Version)

Author: maomao90

When $$$c_i$$$ and $$$z$$$ equals $$$10^{18}$$$, it means that all the remaining water will always flow into the next water tower. Hence, the answer will be the sum of $$$a_i$$$ minus the amount of water remaining at tower $$$n$$$ after the process.

From hint 1, our new task now is to determine the amount of water remaining at tower $$$n$$$ after the process. Let $$$v_i = a_i - b_i$$$. The remaining amount of water remaining at tower $$$n$$$ is the maximum suffix sum of $$$v$$$, or more formally $$$\max\limits_{1\le k\le n}\ \sum\limits_{i = k}^n v_i$$$. We can use a segment tree where position $$$p$$$ of the segment tree stores $$$\sum\limits_{i = p}^n v_i$$$. The updates can be done using range prefix increment and the queries can be done using range maximum.

Code ReLU segment tree. A similar method can be used to solve the full problem if you combine even more ReLUs. However, it is not very elegant and is much more complicated than the intended solution below.

ReLU is a common activation function used in neural networks which is defined by $$$f(x) = \max(x, 0)$$$. The objective of ReLU segment tree is to compose ReLU-like functions together. More precisely, ReLU segment tree can solve the following problem:

You are given two arrays $$$a$$$ and $$$b$$$ of length $$$n$$$. You are required to answer the following queries:

- $$$\texttt{1 p x y}$$$. Update $$$a_p = x$$$ and $$$b_p = y$$$.

- $$$\texttt{2 l r c}$$$. Output the value of $$$f_l(f_{l+1}(\ldots f_{r-1}(f_r(c))))$$$, where $$$f_i(x) = \max(x - a_i, b_i)$$$.

The main idea to solve the problem is to observe that composing ReLU functions still results in a ReLU function, so we just need to store in each node the resultant function $$$f(x) = \max(x - p, q)$$$ after composing the functions that fall in the range of the segment tree node. For the merge function, we just need to figure out the details of composing two ReLU functions together.

#include <bits/stdc++.h>

using namespace std;

typedef long long ll;

const ll LINF = 1000000000000000005;

const int MAXN = 500005;

int n, q;

int a[MAXN], b[MAXN];

ll c[MAXN];

ll v[MAXN], sv[MAXN];

ll mx[MAXN * 4], lz[MAXN * 4];

void init(int u = 1, int lo = 1, int hi = n) {

lz[u] = 0;

if (lo == hi) {

mx[u] = sv[lo];

} else {

int mid = lo + hi >> 1;

init(u << 1, lo, mid);

init(u << 1 ^ 1, mid + 1, hi);

mx[u] = max(mx[u << 1], mx[u << 1 ^ 1]);

}

}

void propo(int u) {

if (lz[u] == 0) {

return;

}

lz[u << 1] += lz[u];

lz[u << 1 ^ 1] += lz[u];

mx[u << 1] += lz[u];

mx[u << 1 ^ 1] += lz[u];

lz[u] = 0;

}

void incre(int s, int e, ll x, int u = 1, int lo = 1, int hi = n) {

if (lo >= s && hi <= e) {

mx[u] += x;

lz[u] += x;

return;

}

propo(u);

int mid = lo + hi >> 1;

if (s <= mid) {

incre(s, e, x, u << 1, lo, mid);

}

if (e > mid) {

incre(s, e, x, u << 1 ^ 1, mid + 1, hi);

}

mx[u] = max(mx[u << 1], mx[u << 1 ^ 1]);

}

int main() {

ios::sync_with_stdio(0), cin.tie(0);

cin >> n >> q;

for (int i = 1; i <= n; i++) {

cin >> a[i];

}

for (int i = 1; i <= n; i++) {

cin >> b[i];

}

for (int i = 1; i < n; i++) {

cin >> c[i];

}

ll sma = 0;

for (int i = n; i >= 1; i--) {

v[i] = a[i] - b[i];

sv[i] = v[i] + sv[i + 1];

sma += a[i];

}

init();

while (q--) {

int p, x, y; ll z; cin >> p >> x >> y >> z;

sma -= a[p];

incre(1, p, -v[p]);

a[p] = x;

b[p] = y;

v[p] = a[p] - b[p];

sma += a[p];

incre(1, p, v[p]);

cout << sma - max(0ll, mx[1]) << '\n';

}

}

1919F2 - Wine Factory (Hard Version)

Author: maomao90

Try modelling this problem into a maximum flow problem.

Try using minimum cut to find the maximum flow.

Speed up finding the minimum cut using a segment tree.

Consider a flow graph with $$$n + 2$$$ vertices. Let the source vertex be $$$s = n + 1$$$ and the sink vertex be $$$t = n + 2$$$. For each $$$i$$$ from $$$1$$$ to $$$n$$$, add edge $$$s\rightarrow i$$$ with capacity $$$a_i$$$ and another edge $$$i\rightarrow t$$$ with capacity $$$b_i$$$. Then for each $$$i$$$ from $$$1$$$ to $$$n - 1$$$, add edge $$$i\rightarrow i + 1$$$ with capacity $$$c_i$$$. The maximum flow from $$$s$$$ to $$$t$$$ will be the answer to the problem.

Let us try to find the minimum cut of the above graph instead.

Claim: The minimum cut will contain exactly one of $$$s\rightarrow i$$$ or $$$i\rightarrow t$$$ for all $$$1\le i\le n$$$.

Proof: If the minimum cut does not contain both $$$s\rightarrow i$$$ and $$$i\rightarrow t$$$, $$$s$$$ can reach $$$t$$$ through vertex $$$i$$$ and hence it is not a minimum cut. Now, we will prove why the minimum cut cannot contain both $$$s\rightarrow i$$$ and $$$i\rightarrow t$$$. Suppose there exists a minimum cut where there exists a vertex $$$1\le i\le n$$$ where $$$s\rightarrow i$$$ and $$$i\rightarrow t$$$ are both in the minimum cut. We will consider two cases:

- Case 1: $$$s$$$ can reach $$$i$$$ (through some sequence of vertices $$$s\rightarrow j\rightarrow j+1\rightarrow \ldots \rightarrow i$$$ where $$$j < i$$$). If our minimum cut only contains $$$i\rightarrow t$$$ without $$$s\rightarrow i$$$, nothing changes as $$$s$$$ was already able to reach $$$i$$$ when $$$s\rightarrow i$$$ was removed. Hence, $$$s$$$ will still be unable to reach $$$t$$$ and we found a minimum cut that has equal or smaller cost.

- Case 2: $$$s$$$ cannot reach $$$i$$$. If our minimum cut only contains $$$s\rightarrow i$$$ without $$$i\rightarrow t$$$, nothing changes as $$$s$$$ is still unable to reach $$$i$$$, so we cannot make use of the edge $$$i\rightarrow t$$$ to reach $$$t$$$ from $$$s$$$. Hence, $$$s$$$ will still be unable to reach $$$t$$$ and we found a minimum cut that has equal or smaller cost.

Now, all we have to do is select for each $$$1\le i\le n$$$, whether to cut the edge $$$s\rightarrow i$$$ or the edge $$$i\rightarrow t$$$. Let us use a string $$$x$$$ consisting of characters $$$\texttt{A}$$$ and $$$\texttt{B}$$$ to represent this. $$$x_i = \texttt{A}$$$ means we decide to cut the edge $$$s\rightarrow i$$$ for a cost of $$$a_i$$$ and $$$x_i = \texttt{B}$$$ means we decide to cut the edge from $$$i\rightarrow t$$$ for a cost of $$$b_i$$$. Notice that whenever we have $$$x_i = \texttt{B}$$$ and $$$x_{i + 1} = \texttt{A}$$$, $$$s$$$ can reach $$$t$$$ through $$$s\rightarrow i\rightarrow i + 1\rightarrow t$$$. To prevent this, we have to cut the edge $$$i\rightarrow i + 1$$$ for a cost of $$$c_i$$$.

To handle updates, we can use a segment tree. Each node of the segment tree stores the minimum possible cost for each of the four combinations of the two endpoints being $$$\texttt{A}$$$ or $$$\texttt{B}$$$. When merging the segment tree nodes, add a cost of $$$c$$$ when the right endpoint of the left node is $$$\texttt{B}$$$ and the left endpoint of the right node is $$$\texttt{A}$$$. The final time complexity is $$$O(n\log n)$$$ as only a segment tree is used.

#include <bits/stdc++.h>

using namespace std;

typedef long long ll;

const ll LINF = 1000000000000000005ll;

const int MAXN = 500005;

int n, q;

int a[MAXN], b[MAXN];

ll c[MAXN];

ll st[MAXN * 4][2][2];

void merge(int u, int lo, int hi) {

int mid = lo + hi >> 1, lc = u << 1, rc = u << 1 ^ 1;

for (int l = 0; l < 2; l++) {

for (int r = 0; r < 2; r++) {

st[u][l][r] = min({st[lc][l][0] + st[rc][0][r],

st[lc][l][1] + st[rc][1][r],

st[lc][l][0] + st[rc][1][r],

st[lc][l][1] + st[rc][0][r] + c[mid]});

}

}

}

void init(int u = 1, int lo = 1, int hi = n) {

if (lo == hi) {

st[u][0][0] = a[lo];

st[u][1][1] = b[lo];

st[u][1][0] = st[u][0][1] = LINF;

return;

}

int mid = lo + hi >> 1, lc = u << 1, rc = u << 1 ^ 1;

init(lc, lo, mid);

init(rc, mid + 1, hi);

merge(u, lo, hi);

}

void upd(int p, int u = 1, int lo = 1, int hi = n) {

if (lo == hi) {

st[u][0][0] = a[lo];

st[u][1][1] = b[lo];

st[u][1][0] = st[u][0][1] = LINF;

return;

}

int mid = lo + hi >> 1, lc = u << 1, rc = u << 1 ^ 1;

if (p <= mid) {

upd(p, lc, lo, mid);

} else {

upd(p, rc, mid + 1, hi);

}

merge(u, lo, hi);

}

int main() {

ios::sync_with_stdio(0), cin.tie(0);

cin >> n >> q;

for (int i = 1; i <= n; i++) {

cin >> a[i];

}

for (int i = 1; i <= n; i++) {

cin >> b[i];

}

for (int i = 1; i < n; i++) {

cin >> c[i];

}

init();

while (q--) {

int p, x, y; ll z; cin >> p >> x >> y >> z;

a[p] = x; b[p] = y; c[p] = z;

upd(p);

cout << min({st[1][0][0], st[1][0][1], st[1][1][0], st[1][1][1]}) << '\n';

}

}

1919G - Tree LGM

Author: maomao90

Think about how you would construct matrix $$$s$$$ if you were given the tree.

If $$$s_{i, i} = \mathtt{0}$$$, what should the value of $$$s_{j, i}$$$ be for all $$$1\le j\le n$$$?

For some $$$i$$$ where $$$s_{i, i} = \mathtt{1}$$$, let $$$Z$$$ be a set containing all the vertices $$$j$$$ where $$$s_{j, i} = \mathtt{0}$$$. More formally, $$$Z = \{j\ |\ 1\le j\le n\text{ and } s_{j, i} = \mathtt{0}\}$$$. Does $$$Z$$$ have any special properties?

Using hint 3, can we break down the problem into smaller sub-problems?

Try solving the problem if the values in each column are a constant. In other words, $$$s_{i, j} = s_{j, j}$$$ for all $$$1\le i\le n$$$ and $$$1\le j\le n$$$.

Let us consider how we can code the checker for this problem. In other words, if we are given a tree, how can we construct matrix $$$s$$$? We can solve this using dynamic programming. $$$s_{i, j} = \mathtt{1}$$$ if and only if at least one child $$$c$$$ of vertex $$$j$$$ (when the tree is rooted at vertex $$$i$$$) has $$$s_{i, c} = \mathtt{0}$$$. This is because the player can move the coin from vertex $$$j$$$ to vertex $$$c$$$ which will cause the opponent to be in a losing state.

For convenience, we will call a vertex $$$i$$$ special if there exists some $$$1\le j\le n$$$ where $$$s_{j, i} \neq s_{i, i}$$$. Suppose there exist some $$$i$$$ where $$$s_{i, i} = \mathtt{0}$$$. This means that moving the coin to any of the neighbours of $$$i$$$ results in a winning state for the opponent. If the tree was rooted at some other vertex $$$j\neq i$$$, it will still be a losing state as it reduces the options that the player can move the coin to, so $$$s_{j, i}$$$ should be $$$\mathtt{0}$$$ for all $$$1\le j\le n$$$. This means that special vertices must have $$$s_{i, i} = \mathtt{1}$$$

Now, let us take a look at special vertices. Let $$$x$$$ be a special vertex, meaning $$$s_{x, x} = \mathtt{1}$$$ and there exist some $$$j$$$ where $$$s_{j, x} = \mathtt{0}$$$. Let $$$Z$$$ be a set containing all the vertices $$$j$$$ where $$$s_{j, x} = \mathtt{0}$$$. More formally, $$$Z = \{j\ |\ 1\le j\le n\text{ and } s_{j, x} = \mathtt{0}\}$$$. $$$Z$$$ cannot be empty due to the property of special vertices. Notice that whenever we choose to root at some vertex $$$j\neq x$$$, the number of children of $$$x$$$ decreases by exactly $$$1$$$. This is because the neighbour that lies on the path from vertex $$$x$$$ to vertex $$$j$$$ becomes the parent of $$$x$$$ instead of the child of $$$x$$$. If rooting the tree at vertex $$$x$$$ is a winning state but rooting the tree at some other vertex $$$j$$$ results in a losing state instead, it means that the only winning move is to move the coin from vertex $$$x$$$ to the neighbour that is on the path from vertex $$$x$$$ to $$$j$$$.

Let $$$y$$$ denote the only neighbour of vertex $$$x$$$ where we can move the coin from vertex $$$x$$$ to vertex $$$y$$$ and win. In other words, $$$y$$$ is the neighbour of vertex $$$x$$$ where $$$y$$$ lies on the path of the vertices in set $$$Z$$$ and $$$x$$$. This means that $$$Z$$$ is the set of vertices that are in the subtree of $$$y$$$ rooted at vertex $$$x$$$.

Now, let us try to find vertex $$$y$$$. Notice that $$$s_{y, y} = \mathtt{1}$$$. This is because $$$s_{y, x} = \mathtt{0}$$$, so the coin can be moved from vertex $$$y$$$ to vertex $$$x$$$ to result in a losing state for the opponent. Furthermore, $$$s_{j, y} = \mathtt{0}$$$ if and only if $$$j$$$ is not in $$$Z$$$, otherwise $$$s_{j, y} = \mathtt{1}$$$. This is because $$$s_{x, y} = \mathtt{0}$$$ since moving the coin from vertex $$$x$$$ to vertex $$$y$$$ is a winning move for the first player. For all other vertex $$$u\in Z$$$ that is not $$$y$$$, this property will not hold as even if $$$s_{u, u} = \mathtt{1}$$$ and $$$s_{x, u} = \mathtt{0}$$$, $$$s_{y, u}$$$ will be equal to $$$\mathtt{0}$$$ as well as the tree being rooted at $$$x$$$ has the same effect as if it was rooted at $$$y$$$. Since $$$y \in Z$$$, $$$s_{y, u} = \mathtt{0}$$$ does not satisfy $$$s_{j, u} = \mathtt{1}$$$ for all $$$j$$$ in $$$Z$$$.

Since $$$y$$$ is a neighbour of vertex $$$x$$$, we know that there is an edge between vertex $$$y$$$ and $$$x$$$. Furthermore, we know that if the edge between vertex $$$y$$$ and $$$x$$$ is removed, the set of vertices $$$Z$$$ forms a single connected component containing $$$y$$$, while the set of vertices not in $$$Z$$$ forms another connected component containing $$$x$$$. This means that we can recursively solve the problem for the two connected components to check whether the values in the matrix $$$s$$$ are valid within their components.

After recursively solving for each connected component, we are only left with non-special vertices ($$$s_{j, i} = s_{i, i}$$$ for all $$$1\le j\le n$$$) and some special vertices that already have an edge that connects to outside the component. Non-special vertices with $$$s_{i, i} = \mathtt{1}$$$ has to be connected to at least $$$2$$$ non-special vertices with $$$s_{i, i} = \mathtt{0}$$$. The most optimal way to do this is to form a line 0 — 1 — 0 — 1 — 0 as it requires the least amount of $$$s_{i, i} = \mathtt{0}$$$. If there is not enough $$$s_{i, i} = \mathtt{0}$$$ to form the line, a solution does not exist. Otherwise, connect the left-over $$$s_{i, i} = \mathtt{0}$$$ to any of $$$s_{i, i} = \mathtt{1}$$$. On the other hand, special vertices can either be connected to nothing, connected to other special vertices, or connected to non-special vertices with $$$s_{i, i} = \mathtt{1}$$$.

For the final step, we need to check whether $$$s_{i, j}$$$ is consistent when $$$i$$$ and $$$j$$$ are in different components (i.e. ($$$i\in Z$$$ and $$$j\notin Z$$$) or ($$$i\notin Z$$$ and $$$j\in Z$$$)). Notice that $$$s_{i, j} = s_{x, j}$$$ for all $$$i\in Z$$$ and $$$j\notin Z$$$ and $$$j\neq x$$$, and $$$s_{i, j} = s_{y, j}$$$ for all $$$i\notin Z$$$ and $$$j\in Z$$$ and $$$j\neq y$$$. From the steps above, we managed to account for every value in the matrix, hence if matrix $$$s$$$ is consistent through all the steps, the constructed tree would be valid as well.

We can make use of xor hash to find vertex $$$x$$$ together with its corresponding vertex $$$y$$$. With xor hash, the time complexity is $$$O(n^2)$$$. Well-optimised bitset code with time complexity of $$$O(\frac{n^3}{w})$$$ can pass as well.

#include <bits/stdc++.h>

using namespace std;

const int MAXN = 5005;

mt19937_64 rnd(chrono::high_resolution_clock::now().time_since_epoch().count());

int n;

unsigned long long r[MAXN], hsh[MAXN], totr;

string s[MAXN];

vector<pair<int, int>> ans;

bool done[MAXN];

bool solve(vector<int> grp) {

int pr = -1, pl = -1;

vector<int> lft, rht;

for (int i : grp) {

if (s[i][i] == '0' || done[i] || hsh[i] == totr) {

continue;

}

rht.clear();

for (int j : grp) {

if (s[j][i] == '0') {

lft.push_back(j);

} else {

rht.push_back(j);

}

}

if (!lft.empty()) {

pr = i;

break;

}

}

if (pr == -1) {

vector<int> dv, zero, one;

for (int i : grp) {

if (done[i]) {

dv.push_back(i);

} else if (s[i][i] == '0') {

zero.push_back(i);

} else {

one.push_back(i);

}

}

for (int i = 1; i < dv.size(); i++) {

ans.push_back({dv[i - 1], dv[i]});

}

if (one.empty() && zero.empty()) {

return 1;

}

if (one.size() >= zero.size()) {

return 0;

}

if (one.empty()) {

if (zero.size() >= 2 || !dv.empty()) {

return 0;

}

return 1;

}

for (int i = 0; i < one.size(); i++) {

ans.push_back({zero[i], one[i]});

ans.push_back({one[i], zero[i + 1]});

}

for (int i = one.size() + 1; i < zero.size(); i++) {

ans.push_back({one[0], zero[i]});

}

if (!dv.empty()) {

ans.push_back({one[0], dv[0]});

}

return 1;

}

for (int i : lft) {

if (s[i][i] == '0' || done[i] || ((hsh[i] ^ hsh[pr]) != totr)) {

continue;

}

vector<int> trht;

for (int j : grp) {

if (s[j][i] == '0') {

trht.push_back(j);

}

}

if (trht == rht) {

pl = i;

break;

}

}

if (pl == -1) {

return 0;

}

for (int i : lft) {

for (int j : rht) {

if (j == pr) {

continue;

}

if (s[i][j] != s[pr][j]) {

return 0;

}

}

}

for (int i : rht) {

for (int j : lft) {

if (j == pl) {

continue;

}

if (s[i][j] != s[pl][j]) {

return 0;

}

}

}

ans.push_back({pl, pr});

done[pl] = done[pr] = 1;

return solve(lft) && solve(rht);

}

int main() {

ios::sync_with_stdio(0), cin.tie(0);

cin >> n;

for (int i = 0; i < n; i++) {

cin >> s[i];

}

for (int i = 0; i < n; i++) {

r[i] = rnd();

totr ^= r[i];

}

for (int i = 0; i < n; i++) {

for (int j = 0; j < n; j++) {

if (s[i][j] == '1') {

hsh[j] ^= r[i];

}

}

}

bool pos = 1;

for (int i = 0; i < n; i++) {

if (s[i][i] == '1') {

continue;

}

for (int j = 0; j < n; j++) {

if (s[j][i] == '1') {

pos = 0;

break;

}

}

}

if (!pos) {

cout << "NO\n";

return 0;

}

vector<int> v(n, 0);

iota(v.begin(), v.end(), 0);

if (!solve(v)) {

cout << "NO\n";

return 0;

}

cout << "YES\n";

for (auto [u, v] : ans) {

cout << u + 1 << ' ' << v + 1 << '\n';

}

}

1919H - Tree Diameter

Author: maomao90

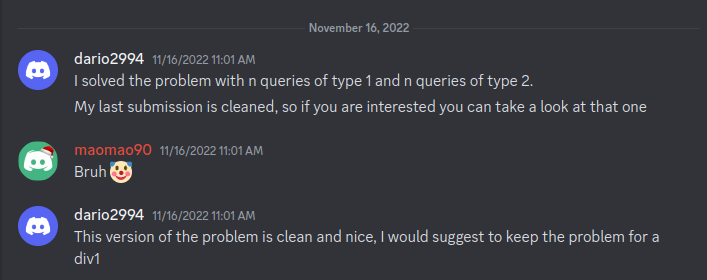

Full solution: dario2994

The original problem allowed $$$5n$$$ type 1 queries and $$$n$$$ type 2 queries and was used as the last problem of a Div. 2 round. When we opened the round for testing, dario2994 solved the problem using $$$2n$$$ type 1 queries, and a few days later, he managed to improve his solution to use only $$$n$$$ type 1 queries. This was what made us decide to change this round into a Div. 1 round instead of a Div. 2 round.

Try rooting the tree at an edge.

Use $$$n - 2$$$ of query $$$2$$$ to find the distance of every edge to the root. For convenience, we will call the distance of an edge to the root the depth of the edge.

We start with only the root edge, then add edges of depth $$$0$$$, followed by depth $$$1, 2, \ldots$$$

If we want to add a new edge with depth $$$i$$$, we need to attach it to one of the edges with depth $$$i - 1$$$. We can let the weight of the edge we want to attach be $$$10^9$$$ to force the diameter to pass through it, then let the edges of depth $$$i - 1$$$ have weights be different multiples of $$$n$$$. This way, we can determine which edges of depth $$$i - 1$$$ are used in the diameter (unless the two largest edges are used).

Make use of isomorphism to handle the case in hint 4 where the largest two edges are used.

If isomorphism cannot be used, find a leaf edge of a lower depth than the query edge and force the diameter to pass through the leaf edge and the query edge. Then, only 1 edge of depth $$$i - 1$$$ will be used and the edge weights for edges of depth $$$i - 1$$$ can follow a similar structure as hint 4.

We will root the tree at edge $$$1$$$. Then, use $$$n - 2$$$ of query $$$2$$$ to find the distance of every edge to the root. For convenience, we will call the distance of an edge to the root the depth of the edge. Our objective is to add the edges in increasing order of depth, so when we are inserting an edge of depth $$$i$$$, all edges of depth $$$i - 1$$$ are already inserted and we just have to figure out which edge of depth $$$i - 1$$$ we have to attach the edge of depth $$$i$$$ to.

For convenience, the edge weights used in query $$$1$$$ will be $$$1$$$ by default unless otherwise stated. Let $$$c_i$$$ store the list of edges with depth $$$i$$$. Suppose we want to insert edge $$$u$$$ into the tree and the depth of edge $$$u$$$ is $$$d$$$. We let the weight of the edge $$$u$$$ be $$$10 ^ 9$$$ and the weight of edges in $$$c_{d - 1}$$$ be $$$n, 2n, 3n, \ldots, (|c_{d - 1}| - 2)n, (|c_{d - 1}| - 1)n, (|c_{d - 1}| - 1)n$$$. The diameter will pass through edge $$$u$$$, the parent edge of $$$u$$$, as well as one edge of weight $$$(|c_{d - 1}| - 1)n$$$. If we calculate $$$\left\lfloor\frac{\text{diameter} - 10^9}{n}\right\rfloor - (|c_{d - 1}| - 1)$$$, we will be able to tell the index of the parent edge of $$$u$$$.

However, there is one exception. When the parent edge of $$$u$$$ is one of the last 2 edges of $$$c_{d - 1}$$$, we are unable to differentiate between the two of them as they have the same weight. This is not a problem if the last 2 edges are isomorphic to each other, as attaching $$$u$$$ to either parent results in the same tree. For now, we will assume that the last 2 edges of $$$c_{d - 1}$$$ are isomorphic to each other.

However, after attaching edge $$$u$$$ to one last 2 edges in $$$c_{d - 1}$$$, they are no longer isomorphic. Hence, we need to use a different method to insert the remaining edges of depth $$$d$$$. Let the new edge that we want to insert be $$$v$$$. Let the weight of edges $$$u$$$ and $$$v$$$ be $$$10^9$$$ and the weights of edges in $$$c_{d - 1}$$$ be the same as before. Now, we can use $$$\left\lfloor\frac{\text{diameter} - 2\cdot 10^9}{n}\right\rfloor$$$ to determine whether edge $$$v$$$ share the same parent as $$$u$$$, and if it does not share the same parent, it can still determine the index of the parent edge of $$$v$$$. With the additional information of whether edge $$$v$$$ shares the same parent as edge $$$u$$$, we will be able to differentiate the last 2 edges of $$$c_{d - 1}$$$ from each other.

Now, we just need to handle the issue where the last 2 edges of $$$c_{d - 1}$$$ are not isomorphic. When we only have the root edge at the start, the left and right ends of the edge are isomorphic (note that for the root edge, we consider it as 2 separate edges, one with the left endpoint and one with the right endpoint). We try to maintain the isomorphism as we add edges of increasing depth. Suppose the last two edges of $$$c_{d - 1}$$$ are isomorphic. Let the two edges be $$$a$$$ and $$$b$$$. Then, we insert edges of depth $$$d$$$ using the above method. Let the child edges attached to $$$a$$$ and $$$b$$$ be represented by sets $$$S_a$$$ and $$$S_b$$$ respectively. If either $$$S_a$$$ or $$$S_b$$$ has sizes at least $$$2$$$, the two edges in the same set will be isomorphic, so we can let those 2 edges be the last 2 edges of $$$c_d$$$. Now, the sizes of $$$S_a$$$ and $$$S_b$$$ are both strictly smaller than $$$2$$$. If the sizes of both sets are exactly $$$1$$$, the two edges from each set will be isomorphic as well as $$$a$$$ and $$$b$$$ are isomorphic. Now, the only case left is if at least one of the sets is empty.

Without loss of generality, assume that $$$S_a$$$ is empty. Since it is no longer possible to maintain two isomorphic edges, we now change our objective to find a leaf (it will be clear why in the following paragraphs). If $$$S_b$$$ is empty as well, both $$$a$$$ and $$$b$$$ are leaves so we can choose any one of them. If $$$S_b$$$ is not empty, then $$$a$$$ and $$$b$$$ are no longer isomorphic due to their children. This means that we cannot simply use $$$b$$$ as the leaf $$$S_a$$$ might be children of $$$b$$$ instead of $$$a$$$ as we did not differentiate $$$a$$$ and $$$b$$$ in the previous paragraphs. To determine whether $$$S_a$$$ belongs to $$$a$$$ or $$$b$$$, we can make use of one type 2 query to find the distance between one of the edges in $$$S_a$$$ and $$$a$$$. If the distance is $$$0$$$, it means that $$$S_a$$$ belongs to $$$a$$$. Otherwise, the distance will be $$$1$$$ and $$$S_a$$$ belongs to $$$b$$$.

Now that we found a leaf, we can use the following method to insert an edge $$$u$$$ of depth $$$d$$$. We let the weight of the edge $$$u$$$ and the leaf edge be $$$10 ^ 9$$$ and the weight of edges in $$$c_{d - 1}$$$ be $$$n, 2n, 3n, \ldots, (|c_{d - 1}| - 2)n, (|c_{d - 1}| - 1)n, |c_{d - 1}|n$$$. The diameter will pass through edge $$$u$$$, the leaf edge, and only one edge of depth $$$d - 1$$$ which is the parent edge of $$$u$$$. Hence, after finding a leaf edge, we can uniquely determine the parent edge from $$$\left\lfloor\frac{\text{diameter} - 2\cdot 10^9}{n}\right\rfloor$$$.

We used $$$n - 2$$$ type 1 queries and $$$n - 1$$$ type 2 queries in total. This is because we used a single type 1 query for each non-root edge. We used $$$n - 2$$$ type 2 queries at the start, and we only used $$$1$$$ additional type 2 query when we were no longer able to maintain two isomorphic edges and changed our methodology to use a leaf edge instead.

#include <bits/stdc++.h>

using namespace std;

typedef long long ll;

const int INF = 1000000000;

const int MAXN = 1000;

int n;

int lvl[MAXN + 5];

int pe[MAXN + 5];

vector<int> ch[MAXN + 5];

ll query(vector<int> a) {

cout << "? 1";

for (int i = 1; i < n; i++) {

cout << ' ' << a[i];

}

cout << endl;

ll res; cin >> res;

return res;

}

int query(int a, int b) {

cout << "? 2 " << a << ' ' << b << endl;

int res; cin >> res;

return res;

}

int main() {

cin >> n;

for (int i = 2; i < n; i++) {

lvl[i] = query(1, i);

}

int ptr = 3;

vector<int> base = {1, 2};

pe[1] = pe[2] = 1;

bool iso = 1;

int piv = -1;

for (int l = 0; l < n; l++) {

vector<int> a(n, 1);

int m = base.size();

for (int i = 0; i < m; i++) {

a[pe[base[i]]] = min(i + 1, m - iso) * MAXN;

}

if (!iso) {

a[pe[piv]] = INF;

}

bool ciso = 0;

for (int u = 2; u < n; u++) {

if (lvl[u] != l) {

continue;

}

a[u] = INF;

ll res = query(a) - INF;

a[u] = 1;

if (!iso || ciso) {

res -= INF;

}

int id = res / MAXN;

if (iso && l) {

id -= m - 1;

}

int v = ptr++;

pe[v] = u;

if (ciso) {

if ((l == 0 && id == 0) || id == -(m - 1)) {

ch[base[m - 2]].push_back(v);

} else if (id == m - 1) {

ch[base[m - 1]].push_back(v);

} else {

ch[base[id - 1]].push_back(v);

}

} else if (iso && id == m - 1) {

ch[base[m - 2]].push_back(v);

ciso = 1;

a[u] = INF;

} else {

ch[base[id - 1]].push_back(v);

}

}

if (m >= 2 && ch[base[m - 2]].size() > ch[base[m - 1]].size()) {

swap(base[m - 2], base[m - 1]);

}

vector<int> nbase;

for (int i = 0; i < m; i++) {

for (int j : ch[base[i]]) {

nbase.push_back(j);

}

}

if (!iso || ch[base[m - 1]].size() >= 2 || ch[base[m - 2]].size() == 1) {

base = nbase;

continue;

}

if (ch[base[m - 1]].empty()) {

piv = base[m - 1];

} else {

ll res = query(pe[ch[base[m - 1]][0]], pe[base[m - 1]]);

if (res) {

swap(base[m - 2], base[m - 1]);

swap(ch[base[m - 2]], ch[base[m - 1]]);

}

piv = base[m - 2];

}

iso = 0;

base = nbase;

}

cout << '!' << endl;

cout << 1 << ' ' << 2 << endl;

for (int u = 1; u <= n; u++) {

for (int v : ch[u]) {

cout << u << ' ' << v << endl;

}

}

}

Thanks for fast editorial

Thank you for the contest!

C was tough

Yeah definitely, I think it should be around 1700 rated. Actually, I thought to find the longest non-increasing subsequence and remove it from the array. Then I have to calculate the cost of the remaining portion of the array. That should be the answer. But I could not implement it.

people are saying that they tried this and it gives WA too!

Yes i implemented that solution , got WA on pretest 2

Same :(

yes same bro but why?? Anyone knows why it is giving WA

One of the Longest non-increasing is

9 8 3 1but if you split it like that your answer will be3but we can instead split in the following way:9 8 3(end)and2 2 3 3 1 10 6In this case answer is2.What if we first list those elements which are like a[i]<a[i+1] and then find longest non-increasing subsequence and remove them from array a to another array b .

Take example of your test case : Element which are like a[i]<a[i+1] are

2 3 3 9 1 10 3 6now longest non-increasing subsequence is3 3 3or3 3 1(Note that first two 3's are reffering to same element in array) , remove either of them from array a we will get( if we remove former) :3 3and2 2 9 8 3 1 10 6We get answer2which is right.Please provide some counterexample if its wrong, I checked it for many test cases and get correct answers, sadly I couldn't implement it in contest :(

As far as I understood you take $$$a_i$$$ and $$$a_{i + 1}$$$ if $$$a_i < a_{i + 1}$$$ holds.

Considering above logic you will first make array containing $$$( 1, 3 )$$$ to consider for Longest non-increasing subsequence candidates.

So you split the array as $$$( 1 )$$$ and $$$( 2, 3, 3)$$$ which gives answer of $$$1$$$ but the actual answer is $$$0$$$ which can be achieved by splitting as $$$( 2, 1)$$$ and $$$( 3, 3)$$$

I am getting 0 but still fail pretest 2.

I find the longest decreasing subsequence. and find the plenty in the remaining elements.

thanks i got it why it doesnt works

Do you know the link of the Problem C with K subsequences? Can you give me it?

Authors wouldn't have the put the easy version in contest, if they found it on the net :p

Thank you, you helped me realize something new about codeforce!

.

same i too get wa

agree :))

IMO, C was somewhat tricky to implement but the concept isn't that hard. I think D was pretty tricky, conceptually.

Can you explain me the concept of C?

You just greedy it. Maintain the two sets and keep on adding them in order. You do have to be careful with how to handle each case though.

Longest non-increasing subsequence can fail sometimes, example 4 3 2 1 8 4 2 1.

If your code detects 4 3 2 2 1, penalty is 1, but optimal answer is 0 (4 3 2 1 and 8 4 2 1).

Oh right!

this case is invalid

Can you explain why? I don't understand

$$$ a_1,a_2,...,a_n \ (a_i\le n)$$$ but we can replace 9 by 8

Thanks, I did not notice that!

I was trying doing it with LIS for whole 2 hours. If only i was able to observe this faster.

Okay so I randomly thought of a testcase: 8 9 10 2 11 7 4 3

The answer for this one is 2 if we split this into 8 2 and 9 10 11 7 4 3

however when I ran the codes submitted by some of the top coders for problem C

I got different outputs from them. Some gave 1, some gave 2 and some 3.

I tried to run Tourist's code and his code's answer was 3.

Now this is really confusing as hell for a newbie like me, was the problem faulty or am I tripping?

This testcase is not valid, as elements should be <= size of the array.

Thank you for pointing out!

I find the longest decreasing subsequence and I am getting 0. still got WA Iin pretest 2

I firstly implemented this way using longest subsequence, but it was WA. Than I implemented greedy that is accepted.

Can you pls share your approach?

Accepted approach is about the same as in editorial

Basically: any item should either be in s or t It doesn't matter which one so have the last item inserted in each one in 2 vars: t,s if (s < t) swap(s,t) so t is the minimum(doesn't matter just wanna have the minimum)

you agree that the most optimal approach is that the current element of the array goes to the one with the minimum, if it's less than min it's the best, if it was larger than min but smaller than the other one you put it into that, still free since it's smaller

otherwise put it inside the any of those u like(doesn't matter since you'll swap them if wasn't good) in any of them the cost will be increased by one

I did something similar and received WA. Time to try to solve it another way.

I thought of that too and implemented it WA 2 but for anykind of tc like this: 1 2 3 4 5 6 7 8 9 where the ans is n-1, it'd output n

but just going on the paper for 5secs and it clicked instantly got the exact approach of editorial

it was nice

I tried with longest decreasing subsequence removal from the array and calculating the rest array i was sure it was right but wasn't able to implement it.

i thought the same but could not implement it

Very same

I wrote DP code for part B and it ran out of memory, then I came up with a simpler approach. Figured the same would happen for part C, so I didn't even try using DP and ran out of time trying to think of a better solution. Oops.

I was going for the same approach , but calculated the value for the output of last sample case as 5 in my mind. The simpler approach was my only guess

greedy worked

Way to hard spent like 10 minutes trying to understand it

Very good competition!

2024 will be a good year, it seems to me because the competition was cool!

Thanks maomao90 for the competition.

I kept my promise!

Happy New Year!

thank you for this fast and organized editorial.

FastEditorialForces!

russian???

F1 statement: "There are n wizard's..."

Me: "Well.... okay, there are n Jesuses..."

P.s. really cool problems and thank you for super fast editorial!

Is the plural of Jesus Jesi?

proofByAcForces

I solved F1 with sqrt decomposition. Why no mention about it in the editorial?

For the same reason the segtree solution to C and the DP solution to B weren't mentioned.

wait but the segtree solution to C is literally mentioned as solution 2

Oh, my bad. A non-sarcastic answer to your question: it's overkill. Also, probably not forseen or intended, because N = 2 * 10^5.

DP solution to B will probably time limit. could you tell me your approach for it, maybe I got it wrong.

I didn't use DP, and I don't know how the solution worked, I just saw an array named dp while hacking. 240512150

oh. I got it wrong then, you can check my solution also if you want 240611932

There is an O(nlogn) dp on problem B

Side note, calling a magic number variable in your code "magic" is hysterical.

got that habit from tfg

i also solved F2 with sqrt decomposition; much simpler than the editorial:

for each block (sqrt(n)), save the following information: - if no (additional) water flows into the first water tower (of the block), how much wine would the block produce? - how much water is flows out of the last tower (considering capacity limits) - how much water that flows into the first water tower can be converted into wine (considering intermediate capacity limits) - the maximum capacity left, i.e. how much water can simply flow from the first to the last tower (i.e. in F1 this is around 10^18)

then you can simply "simulate" the process on the blocks after updating one block, i.e. the complexity is $$$O(n \sqrt{n} + q \sqrt{n})$$$

Edit: Submission: https://codeforces.me/contest/1919/submission/261156832

Did anyone solve problem C with a cartesian tree. I tried so but i couldn't get accepted. My idea was to create de max cartesian tree and count the total amount of left children minus one. Took the tree code from geeksforgeeks. Also mention that if two elements are equal the one from the right will be the child. Here's my approach 240585200.

Thanks for the resources, this is the first time I heard about it.

same here

Do better. B, C have 6 pages long proof.

Can you provide me with a proof for why the greedy approach they gave works in the third case where x < ai < y ? I did not understand the following part:

This is because if we consider making the same choices for the remaining elements ai+1 to an in both scenarios, there will be at most one time where the former scenario will add one penalty more than the latter scenario as the former scenario has a smaller last element after inserting ai . After that happens, the back of the arrays in both scenarios will become the same and hence, the former case will never be less optimal.

Recall that,

Suppose we appended

a[i]to x, thena[i]becomes the new x and we have thata[i] <= y. Recall that sincex < a[i], we get a penalty. Let's start by observing all the possible cases in the future. Suppose we have somea[j]wherei < j,With these 3 possible cases in hand, notice (1) and (3) have a trivial result. From here, it is easy to see the optimal option for (2) is to append it to y since we get no penalty. Sometimes, appending to x may also work but it can be proven that it is no better than appending to y.

Delving a bit more, we see that Case (1) and (3) are intuitive, we are always replacing the smaller element with something larger thereby making it less likely that we get penalties in the future.

On the other hand, Case (2) seems a bit counterintuitive, since appending

a[i]on the larger element y makes Case (1) more likely to happen. At the same time, it makes sense that having one less penalty in the future is better or even.Notice that we can reach the same penalty with with different states, but we always prefer the state with larger x and larger y. If you imagine several case 2s and case 1s stacked together, we will always get a better or even result by greedily choosing IV. and VI. which is exactly by always appending to y for case 2.

Does anyone have any material on ReLU Segment Trees? I solved F1 (and I will try to do F2 as well) using a bit of a different segment tree than first solution and don't know if it is just the second solution (although I don't think it is). Thanks in advance

My F1 solution uses a ReLU segment tree. I wasn't able to adjust it to solve F2 in time though. I thought the problem was really terrible at first because it's an ugly mathy solution, but after seeing that the intended solution was optimized flow instead, I like the problem much more.

Do you know the link of the Problem C with K subsequences? Can you give me it? I really need it!

I updated the editorial tp have slightly more details about ReLU segment tree. Hope it helps!

Do you know the link of the Problem C with K subsequences? Can you give me it? I really need it!

Can one share the intuition behind the observation about remaining water = max suffix sum?

Great contest & fast editorial.

I'm glad that 2024 has had such a perfect start. Thank you!

Thank you for the contest! Best wish for 2024.

This contest is a perfect example of how to set and prepare rounds. Well done to the author and coordinator!

The tasks were pleasurable to solve, balancing math and algorithms. I thought that every problem was on point in terms of difficulty, quality, and their respective subjects (not every task was "constructive math"). Overall, the round seemed thoroughly tested and well-prepared.

I think this contest could've benefited from weaker samples on A and B. They're very proof-by-AC able.

Other than that, best contest I've ever had the joy of participating in.

I was not sure about C, so tried other approaches and when they all failed, then tried the above greedy approach and to my surprise it worked. Greedy is hard to prove!

Proof for D?

Can someone tell me what's wrong with my solution for F1?

240597499

Take a look at Ticket 17195 from CF Stress for a counter example.

so what is my mistake in code?

your idea is wrong a: 0 1 b: 1 0 answer is 0, but you output: min(sum(a), sum(b))

Thanks

I had the same problem. Why this idea is wrong?

I realized my mistake

so fast tutorial

dario2994 orz

F1 can also be solved by first consider D&C solution (maintain sum of A, sum of B, sum of answer for each node, when merge(L, R), try to match L.A with R.B as much as possible), then put this dp into segment tree.

greedy on D was quite unexpected

C and D is really hard.

Could someone help me figure out why I got Wrong Answer on C?

https://codeforces.me/contest/1919/submission/240597831

nice contest

The editorial proof of the greedy algorithm correctness in Problem $$$C$$$ is obviously not complete. It is not clear why the optimal splitting for a prefix coincides with the restriction of the optimal splitting of the whole array to this prefix, while this claim is implicitly used.

Does anybody understand a complete proof?

Is C been solved completely on intuition ?

I'm not sure what you mean by that. Why is this proof not complete? My understanding is that because it's a subsequence, and every element must be inserted into either array b or c, it is a complete proof.

The question is why if you have the optimal splitting of $$$[a_1, \dots, a_k]$$$ to subsequences $$$B$$$ and $$$C$$$, then the optimal splitting of $$$[a_1, \dots, a_k, a_{k+1}]$$$ can be obtained by back inserting of $$$a_{k+1}$$$ either into $$$B$$$ or into $$$C$$$. In general, the optimal splitting for $$$[a_1, \dots, a_k, a_{k+1}]$$$ may have nothing to do with $$$B$$$ and $$$C$$$.

The proof is written such that it looks like this fact is used, but may be I am just misunderstanding something.

I think the idea is that the split doesn't really matter. The only thing that matters is the final number in each array. So suppose that X and Y are the final two numbers in array A and B respectively, then regardless of any of the prior decisions for splitting the numbers, based solely on the values of X and Y we can determine whether a number should go into array A or B. You might argue that X and Y could have been chosen to be greater which might lead to a more optimal result, but the algorithm maximizes the value of X and Y in the first two cases, and in the third case the editorial lays out an argument for why it is at least just as good putting it in the other array.

Does this sound right?

If you do let me know, the same is the reason I wasn't able to give this greedy approach a try

I believe, the following was implied.

Assume that you have any splitting of $$$[a_1, \dots, a_k]$$$ to subsequences $$$(B, C)$$$, which can be extended to a splitting $$$(B', C')$$$ of the whole array $$$[a_1, \dots, a_n]$$$ with penalty $$$x$$$. Then the splitting $$$(\tilde{B}, \tilde{C})$$$ of $$$[a_1, \dots, a_k, a_{k+1}]$$$ constructed greedily from $$$(B, C)$$$ also can be extended to some other splitting $$$(\tilde{B}', \tilde{C}')$$$ of the whole array $$$[a_1, \dots, a_n]$$$ with the same penalty $$$x$$$ or less.

This works, and in order to prove it you need to construct these $$$(\tilde{B}', \tilde{C}')$$$ from $$$(\tilde{B}, \tilde{C})$$$, which is more or less done in the editorial.

I agree with you. The proof is not a standard greedy proof. It only says: when the splitting of the first k elements is fixed, we can obtain the optimal (optimal under this condition, not globally optimal) solution of the splitting of the first k+1 elements. Edit: Actually there is a mistake, when the first k elements are fixed, then out of all optimal splittings of the remaining n-k elements, it's always not worse to choose the splitting (in the editorial way) of the (k+1)th element, so it's always chosen that way.

Can you please elaborate on why optimal choices at each particular step eventually lead to optimal partitioning globally? Still don't understand :(

I'm guessing we should appreciate that there is not one unique optimal solution. The proof in editorial is a Greedy-stays-ahead(or catches up) argument and not an exchange arguments proof. So, if we consider the first point of difference between the optimal and the greedy. We have proved that the greedy isn't any worse. And for the rest of the sequence of moves in the optimal solution, I found that it is always possible directly append those moves to the greedy prefix from this point on without introducing extra penalty. And so now we move to the second point of difference and the same argument applies. Does it makes sense or am I stuck in circular reasoning ?

fast editorial, thx

good contest

Hey can anyone share their intuition for problem D , i didn't understand the idea from editorial , and would appreciate if anyone can share their stack based idea

For any element of the array, we need to make sure it can be absorbed into another element. This can only be done if eventually it can become adjacent to an element with value exactly one smaller than it.

How can we check this? Like in the solution, we can take the larger element and trivially check if that's the case (it can either have a minus one element directly adjacent to it or have elements with similar value on its left and right that eventually reach a minus one element). This way, all max elements are deleted. Sane thing can be done for batch of the next bigger elements, etc until we are done. Make sure than only a single 0 element is permitted initially.

Now, this process can be automated. By the above, it is enough to check that all the elements are eventually adjacent. To assure this, we need to make sure that, for every element, for the closest element to either the left or right that has a smaller value of our element, its value needs to be minus one and not less.

This works, because we make sure this condition is true for every element. As on the above analysis, we can use it to get rid of elements of progressively smaller value and all the bigger elements will be deleted. Then, this element will be ready to be absorbed by this chosen smaller target. Ofc, if this target has a smaller value, the absorption will fail and this element will stuck there forever, so no tree can be found in this case. You can work out some examples and make sure why a sequence of elements with same value doesn't impede this solution.

Here is where the stack idea comes. We can use stacks to solve this problem, like outlined here: https://www.geeksforgeeks.org/find-the-nearest-smaller-numbers-on-left-side-in-an-array. Do that for both directions and make sure that the element found on the stack is exactly one smaller.

Also, there should be exactly 1 zero in the array. Check that as well and you have the solution.

Consider "growing" the tree node by node. Consider the list of current leaves.

In one move, we can replace the subsegment of a single element [x] with [x, x+1] or [x+1, x]

In other words, choose any element x in the array, and insert to the left or right of it x+1.

Problem is now to find if the final array of leaves can be generated via these moves.

thank you , but I am sb

HELLO 2024. THANK YOU

For the problem C I used the greedy approach explained in this editorial but i did it in reverse. I iterated from N-1 to 0 and tried to add the element x to the array with the first largest element unless x is smaller than that but larger than the first smallest element of the two array. I implemented this but it gives WA, here is my submission: 240582496

Is it a solution error or an implementation error?

UPD: My bad, it was >= and not only > :(

Again ,C was too much for me

For problem c I tried to separate longest decreasing subsequence (indices) from all elements, then I tried to compute a[i]<a[i+1] for rest of the elements but couldn't pass. Can anyone explain why?

See this counter case

https://codeforces.me/blog/entry/124227?#comment-1104059

Thanks, can you tell me? If in a contest I start with a wrong approach how can I get around this wrong approach like this problem?

One of the options would be to stress-tests your solution (see: stress-testing on linux, stress-testing on windows).

I had a very different solution to D, I think.

Note that there must be exactly one leaf node with value $$$0$$$. Call this node $$$i$$$ and consider the path from the root to $$$i$$$. Then, for each node $$$v$$$ along this path, let $$$S_v$$$ be its subtree on the side not including $$$i$$$ (the other edge). Then, if we subtract $$$1$$$ from the distance for each node $$$j\in S_v$$$, this must also form a valid dfs ordering of distances.

This gives the following recursive definition: a height-$$$h$$$ tree has DFS ordering $$$[L, h, R]$$$ where $$$L$$$, $$$R$$$ are concatenations of height-$$$h + 1$$$ trees' DFS orderings.

For example, consider the first sample $$$[2, 1, 0, 1, 1]$$$. Then, $$$L=[2, 1]$$$ must be a concatenation of height $$$1$$$ trees and $$$R=[1, 1]$$$ must also be a concatenation of height $$$1$$$ trees.

Checking if $$$[2, 1]$$$ is a concatenation of height $$$1$$$ trees is the same as checking if $$$[1, 0]$$$ is a concatenation of height $$$0$$$ trees. Recursing gives that this is indeed valid.

Similarly, $$$[1, 1]$$$ being a concatenation of height $$$1$$$ trees is the same as checking if $$$[0, 0]$$$ is a concatenation of height $$$0$$$ trees (which it obviously is).

So, we can use Divide & Conquer to check if an array corresponds to a concatenation of height $$$h+1$$$ trees, by (for example) using a RMQ to find all positions with value $$$h + 1$$$ and recursing on their subranges. We also check that the root only has one $$$0$$$.

Overall, the code looks like this:

Since we look at each position as a root exactly once (utilizing the RMQ for this speedup, or some sort of binary search structure), the runtime is $$$O(n\log n)$$$ (coming from building the RMQ).

My code: 240589892

I too have done same, but our solution can actually be O(n). positions just keep increasing for a value. 240578953

Teja-Smart your TLE solution got accepted by applying lower_bound on set by diffrent way 240770136

lol, I never knew it would differ like that

If ai<x insert ai to the back of the array with a smaller last element. If y<ai , insert ai to the back of the array with a smaller last element. If x<ai<=y , insert ai to the back of the array with a bigger last element.

Can someone help with an example here. Which array are they mentioning

Arrays = subsequences here

The sample of D was too weak..

Finally, decent contest! Could've given more examples in D though:(

I think this approach works for C but I couldn't figure out how to implement it efficiently during the contest:

For example, for this approach you would get [8, 8, 9], then since no number is >= 9, you would start from the next number which is 0, and get [0, 5]. So one array is [5,0,9,9,8] (the elements we saw in this process, but reversed since we reversed the array to do this), and the other one is the remaining elements.

Question: is it possible to implement something like this efficiently (even if it is not correct for all cases of C?) I tried to use a set of pairs with {value, index} and finding the lower_bound of the current pair, but couldn't figure out a sorting function to get the exact behavior I wanted. Thanks!

Hi ,