Hello, Codeforces!

1. Recently, adamant published an excellent blog Stirling numbers with fixed n and k. There he described an approach to them via generating functions.

In this (messy) blog, I want to share one useful technique on the example of Stirling numbers of the first kind.

2. We start with the definition of the Stirling transformation of a sequence $$$(x_n)$$$ is the following mapping

where $$$s(n,k)$$$ is a first-kind Stirling number. Here we have a place of flexibility for our technique: we can choose any other function $$$f(n,k)$$$ instead of Stirling numbers, though it only sometimes turns out to be useful.

3. The essence of this method is to find the transition formulae between Stirling transformations of a sequence $$$(x_n)$$$ and the sequence of its finite differences (one can think of it as a discrete analogue of derivative) $$$(y_n)$$$ defined by

The acquaintance with finite differences is highly recommended for the readers of this blog.

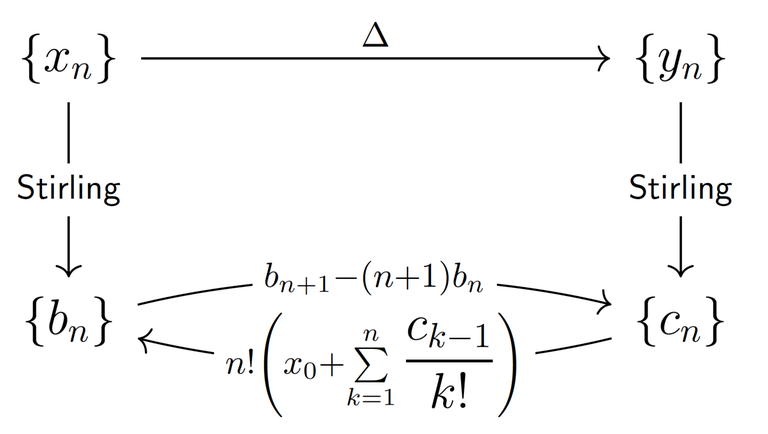

Theorem 1 (Transition formulae). In fact, the following transition formulae hold:

Step 1. How to work with finite differences?

Theorem 2 (solving first-order linear difference equation). Let $$$(y_k)$$$ be a recurrence relation that satisfies

where $$$(p_k), (q_k)$$$ are given sequences and there is no $$$i$$$ such that $$$p_i=0$$$, then the solution to this equation is given by

Step 2. Proof of the Theorem 1.

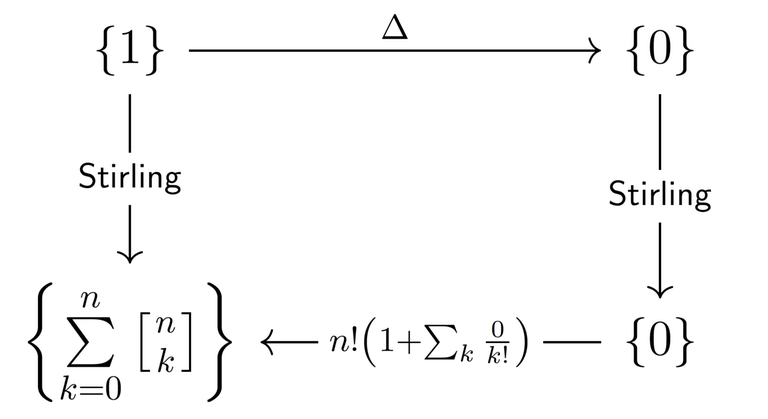

4. Using these transition formulae we can derive countless identities with Stirling numbers of the first kind (as well as identities involving binomial coefficients and other interesting combinatorial functions, as pointed out in 2). For example, taking $$$x_n = 1$$$, we obtain the following nice identity

visualised by the diagram

5. A nontrivial and interesting example. We exploit the combinatorial nature of $$$s(n,k)$$$ to write down the average number of cycles in a random permutation.

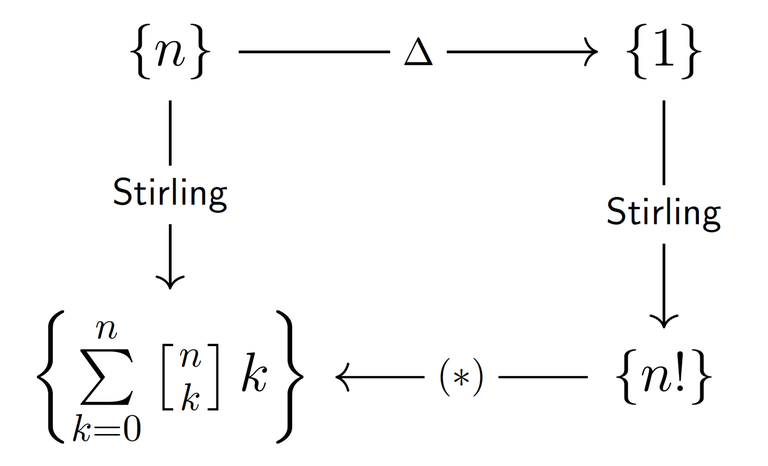

We can easily find the sum in the numerator by applying theorem 1 to the sequence $$$x_n = n$$$.

where $$$H_n = 1 + 1/2 + \dots + 1/n$$$ is $$$n$$$-th Harmonic number. Hence $$$\mathbf{E}[cycles]=H_n=O(\log n)$$$, a very nice result!

6. We generalise 5 and 6 by consider the sequence $$$x_n = n^k$$$ for a fixed $$$k$$$. From adamant's blog (highly connected with this section), we know that first-kind Stirling numbers behave much better with falling factorials. So, in order to find a good formula for the Stirling transformation of $$$n^k$$$, we first find it for $$$(n)_k$$$ and then apply

and derive the desired formula.

The details are left for the reader, here is the outline.

Step 1. Prove the identity $$$\sum_{j=0}^{N} s(j,k)/j! = 1/N! s(N+1,k+1)$$$ by double induction and Pascal-like identity (lemma 1).

Step 2. Apply Theorem 1 to $$$x_n=(n)_k$$$ (fix $$$k$$$) and using 1 prove that

Step 3. Finally, prove that

The last formula is obviously practical.

7 (Bonus).

Theorem 3 (transition between two kinds of Stirling numbers). Let $$$(a_n)$$$ be a sequence and

be is second-kind Stirling transform. Then

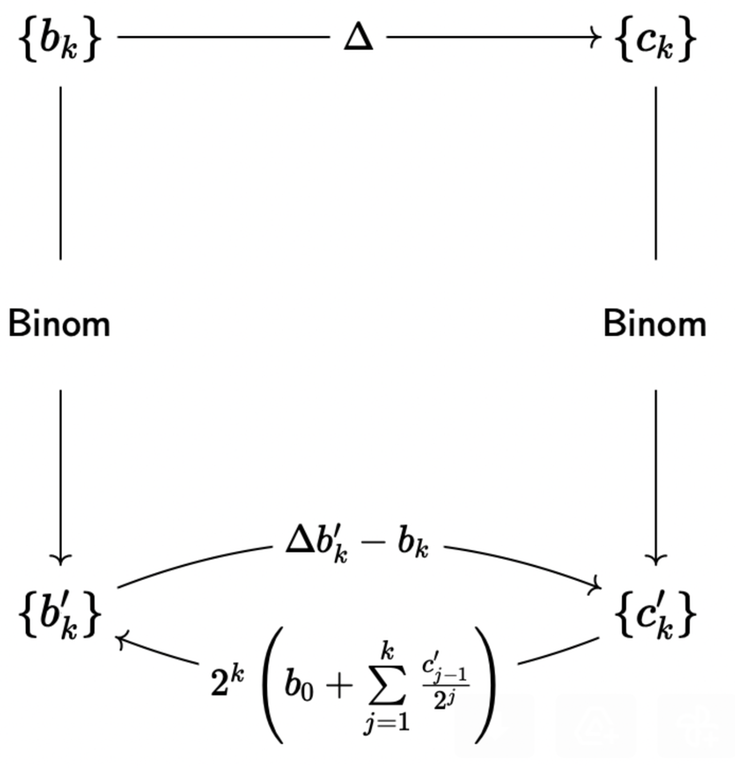

8 (Remark). I mentioned twice that this method can be generalised. For example, for the binomial transformation

the main theorem will be the following

Using a similar strategy as in 6, we can prove that

Is this based on this article? Quite nice presentation! Yet, I generally don't like direct work with coefficients, so I tried to understand e.g. what formulas for binomial transformation and finite differences mean in terms of EGF.

So, the binomial transformation is equivalent to multiplying EGF $$$A(x)$$$ with $$$e^x$$$:

And finite differences, defined as $$$a_n \mapsto a_{n+1} - a_n$$$, correspond to the transform on EGF:

So, the binomial transform of finite differences is $$$e^x( A' - A)$$$, while the binomial transform of the initial sequence is $$$e^x A$$$.

To express the binomial transform of finite differences through the binomial transform of original sequence, we notice

Meaning that the transform from $$$b_k'$$$ to $$$c_k'$$$ should, indeed, be $$$\Delta b_k' - b_k'$$$ (I assume, $$$\Delta b_k' - b_k$$$ is a typo).

Unfortunately, I don't have any clear ideas right now on how to do something similar with Stirling numbers, or even how to derive the inverse formulas or interpret them in terms of genfuncs...

Ok, I see why the inverse transform is messy. So, we know that

How to get $$$G(x)$$$ from known $$$F(x)$$$? Well, it's just a linear diffeq, so there is a formula:

It probably yields the same result, but an easier way to solve it is, let $$$A(x)$$$ and $$$B(x)$$$ be the ordinary genfuncs of sequences, for which $$$F(x)$$$ and $$$G(x)$$$ are exponential genfuncs. Differentiation in EGFs translates to OGFs as

so we have

which gives the same conversion formula between $$$b'_k$$$ and $$$c'_k$$$.

Now, for Stirling transform, Wikipedia suggests that a proper representation, in terms of EGFs is

I guess, it makes sense, as

given that $$$\frac{\log(1+x)^k}{k!}$$$ is the EGF for $$$s(n, k)$$$ with fixed $$$k$$$. So, the Stirling transform of finite differences is

Using $$$[A(\log(1+x))]' = \frac{A'(\log(1+x))}{1+x}$$$, we can re-express it as a function of $$$A(\log(1+x))$$$ as

Note that it corresponds to transition formula $$$c_n = b_{n+1} + (n-1) b_n$$$ instead of $$$c_n = b_{n+1} - (n+1) b_n$$$.

This is because for signed Stirling numbers $$$s(n, k)$$$ the transition formula is

instead of

which was used in the blog.