We hope you enjoyed the contest!. Thank you for your participation! Do vote under the Feedback section, and feel free to give your review of the problems in the comments.

1777A - Everybody Likes Good Arrays!

Idea: ShivanshJ

Preparation: ShivanshJ

Editorialist: ShivanshJ

Try to make the problem simpler.

Parity?

Try replacing even numbers with $$$0$$$ and odd numbers with $$$1$$$ in other words consider all numbers modulo $$$2$$$.

Think harder! It works!

/* Enjoying CP as always!*/

#include <bits/stdc++.h>

using namespace std;

#define int long long

signed main() {

ios_base::sync_with_stdio(false);

cin.tie(NULL);

int t;

cin>>t;

while(t--) {

//Take input

int n;

cin>>n;

int a[n];

for(int i=0;i<n;i++) {

cin>>a[i];

}

//initialize answer..

int ans=0;

for(int i=0;i+1<n;i++) {

ans+=(!((a[i]^a[i+1])&1));

/*XOR the two numbers and check 0th bit in the resultant, if it is 1

then, numbers are of different parity, otherwise both are of same parity*/

}

cout<<ans<<"\n";

}

return 0;

}

def main():

T = int(input())

while T > 0:

T = T - 1

n = int(input())

a = [int(x) for x in input().split()]

ans = 0

for i in range(1, n):

ans += 1 - ((a[i] + a[i - 1]) & 1)

print(ans)

if __name__ == '__main__':

main()

Idea: TimeWarp101 quantau

Preparation: TimeWarp101

Editorialist: TimeWarp101

Will the answer differ for different permutations?

If you only look at 2 elements, how much will they contribute to the answer?

#include<bits/stdc++.h>

using namespace std;

#define int long long

signed main()

{

const int N = 1e5 + 5;

const int mod = 1e9 + 7;

vector<int> fact(N);

fact[0] = 1;

for(int i = 1; i < N; i++)

{

fact[i] = fact[i - 1] * i;

fact[i] %= mod;

}

int t;

cin >> t;

while(t--)

{

int n;

cin >> n;

int ans = n * (n - 1);

ans %= mod;

ans = (ans * fact[n]) % mod;

cout << ans << endl;

}

return 0;

}

t = int(input())

for _ in range(t):

n = int(input())

nf = 1

mod = int(1e9 + 7)

for i in range(n):

nf = nf * (i + 1)

nf %= mod

ans = n * (n - 1) * nf

ans %= mod

print(ans)

Idea: quantau

Preparation: TimeWarp101 quantau

Editorialist: TimeWarp101

Would sorting the array help?

Would iterating over the factors help?

If the optimal team has students with maximum smartness $$$M$$$ and minimum smartness $$$m$$$, would having students with smartness $$$X$$$ such that $$$m \le X \le M$$$ the answer will not change.

Two pointers?

#include <bits/stdc++.h>

#define all(v) v.begin(), v.end()

#define var(x, y, z) cout << x << " " << y << " " << z << endl;

#define ll long long int

#define pii pair<ll, ll>

#define pb push_back

#define ff first

#define ss second

#define FASTIO \

ios ::sync_with_stdio(0); \

cin.tie(0); \

cout.tie(0);

using namespace std;

const ll inf = 1e17;

const ll MAXM = 1e5;

vector<ll> factors[MAXM + 5];

void init()

{

for (ll i = 1; i <= MAXM; i++)

{

for (ll j = i; j <= MAXM; j += i)

{

factors[j].pb(i);

}

}

}

void solve()

{

ll n, m;

cin >> n >> m;

vector<pii> vec;

for (ll i = 0; i < n; i++)

{

ll value;

cin >> value;

vec.pb({value, i});

}

sort(all(vec));

vector<ll> frequency(m + 5, 0);

ll curr_count = 0;

ll j = 0;

ll global_ans = inf;

for (ll i = 0; i < n; i++)

{

for (auto x : factors[vec[i].ff])

{

if (x > m)

break;

if (!frequency[x]++)

{

curr_count++;

}

}

while (curr_count == m)

{

ll curr_ans = vec[i].ff - vec[j].ff;

if (curr_ans < global_ans)

{

global_ans = curr_ans;

}

for (auto x : factors[vec[j].ff])

{

if (x > m)

break;

if (--frequency[x] == 0)

{

curr_count--;

}

}

j++;

}

}

cout << (global_ans >= inf ? -1 : global_ans) << "\n";

}

int main()

{

FASTIO

init();

ll t;

cin >> t;

while (t--)

{

solve();

}

return 0;

}

Idea: AwakeAnay

Preparation: mayankfrost ShivanshJ

Editorialist: AwakeAnay

What would be the value of a node at time $$$t$$$?

The value of a node $$$u$$$ after time $$$t$$$ would be the xor of the initial values of all nodes in the subtree of $$$u$$$ which are at a distance $$$t$$$ from $$$u$$$.

What is the expected value of xor of $$$k$$$ boolean values?

#include <bits/stdc++.h>

using namespace std;

#define MOD 1000000007

long long power(long long a, int b)

{

long long ans = 1;

while (b)

{

if (b & 1)

{

ans *= a;

ans %= MOD;

}

a *= a;

a %= MOD;

b >>= 1;

}

return ans;

}

int DFS(int v, vector<int> edges[], int p, int dep, int ped[])

{

int mdep = dep;

for (auto it : edges[v])

if (it != p)

mdep = max(DFS(it, edges, v, dep + 1, ped), mdep);

ped[v] = mdep - dep + 1;

return mdep;

}

int main()

{

ios_base::sync_with_stdio(false);

cin.tie(NULL);

int T, i, j, n, u, v;

cin >> T;

while (T--)

{

cin >> n;

vector<int> edges[n];

for (i = 0; i < n - 1; i++)

{

cin >> u >> v;

u--, v--;

edges[u].push_back(v);

edges[v].push_back(u);

}

int ped[n];

DFS(0, edges, 0, 0, ped);

long long p = power(2, n - 1), ans = 0;

for (i = 0; i < n; i++)

{

ans += p * ped[i] % MOD;

ans %= MOD;

}

cout << ans << "\n";

}

}

Idea: Crocuta

Preparation: mayankfrost

Editorialist: mayankfrost

If the cost is c, all edges with weight less than or equal to c are reversible.

If an edge can be reversed, can it be treated as bidirectional?

Let there exist a set of possible starting nodes. If this set is non empty, the highest node h in the topological ordering of nodes will always be present in the set. Think why.

#include <bits/stdc++.h>

using namespace std;

// Using Kosa Raju, we guarantee the topmost element (indicated by root) of stack is from the root SCC

void DFS(int v, bool visited[], int &root, vector<int> edges[])

{

visited[v] = true;

for (auto it : edges[v])

if (!visited[it])

DFS(it, visited, root, edges);

root = v;

}

int cnt(int v, bool visited[], vector<int> edges[])

{

int ans = 1;

visited[v] = true;

for (auto it : edges[v])

if (!visited[it])

ans += cnt(it, visited, edges);

return ans;

}

int main()

{

ios_base::sync_with_stdio(false);

cin.tie(NULL);

int T;

cin >> T;

while (T--)

{

int i, j, n, m, u, v, w;

cin >> n >> m;

vector<pair<int, int>> og_edges[n];

for (i = 0; i < m; i++)

{

cin >> u >> v >> w;

u--, v--;

og_edges[u].push_back({v, w});

}

int l = -1, r = 1e9 + 1, mid;

while (r - l > 1)

{

mid = l + (r - l) / 2;

vector<int> edges[n];

for (i = 0; i < n; i++)

{

for (auto it : og_edges[i])

{

edges[i].push_back(it.first);

if (it.second <= mid)

edges[it.first].push_back(i);

}

}

bool visited[n] = {};

int root;

for (i = 0; i < n; i++)

{

if (!visited[i])

DFS(i, visited, root, edges);

}

memset(visited, false, sizeof(visited));

if (cnt(root, visited, edges) == n)

r = mid;

else

l = mid;

}

if (r == 1e9 + 1)

r = -1;

cout << r << '\n';

}

return 0;

}

Idea: Crocuta

Preparation: TimeWarp101

Editorialist: Crocuta

Can we somehow fix the maximum element ?

To calculate the answer over all subarrays with the same maximum element, can we use the trie trick for calculating the maximum xor.

#include <bits/stdc++.h>

using namespace std;

struct Trie{

struct Trie *child[2]={0};

};

typedef struct Trie trie;

void insert(trie *dic, int x)

{

trie *temp = dic;

for(int i=30;i>=0;i--)

{

int curr = x>>i&1;

if(temp->child[curr])

temp = temp->child[curr];

else

{

temp->child[curr] = new trie;

temp = temp->child[curr];

}

}

}

int find_greatest(trie *dic, int x) {

int res = 0;

trie *temp = dic;

for(int i=30;i>=0;i--) {

int curr = x>>i&1;

if(temp->child[curr^1]) {

res ^= 1<<i;

temp = temp->child[curr^1];

}

else {

temp = temp->child[curr];

}

}

return res;

}

int main() {

int test_cases;

cin >> test_cases;

while(test_cases--)

{

int n;

cin>>n;

int a[n+1];

for(int i=1;i<=n;i++) {

cin>>a[i];

}

trie *t[n+2];

int prexor[n+1];

prexor[0] = 0;

for(int i=1;i<=n;i++) {

t[i] = new trie;

insert(t[i], prexor[i-1]);

prexor[i] = prexor[i-1]^a[i];

}

t[n+1] = new trie;

insert(t[n+1], prexor[n]);

pair<int,int> asc[n+1];

for(int i=1;i<=n;i++) {

asc[i] = make_pair(a[i],i);

}

sort(asc+1,asc+n+1);

int left[n+1], right[n+1];

stack<int> s;

for(int i=1;i<=n;i++) {

while(!s.empty() && a[i]>=a[s.top()])

s.pop();

if(s.empty())

left[i] = 0;

else

left[i] = s.top();

s.push(i);

}

while(!s.empty())

s.pop();

for(int i=n;i>0;i--) {

while(!s.empty() && a[i]>a[s.top()])

s.pop();

if(s.empty())

right[i] = n+1;

else

right[i] = s.top();

s.push(i);

}

int ans = 0;

for(int i=1;i<=n;i++) {

int x = asc[i].second;

int r = right[x]-1;

int l = left[x]+1;

if(x-l < r-x) {

for(int j=l-1;j<x;j++) {

ans = max(ans, find_greatest(t[x+1], prexor[j]^a[x]));

}

t[l] = t[x+1];

for(int j=l-1;j<x;j++) {

insert(t[l], prexor[j]);

}

}

else {

for(int j=x;j<=r;j++) {

ans = max(ans, find_greatest(t[l], prexor[j]^a[x]));

}

for(int j=x;j<=r;j++) {

insert(t[l], prexor[j]);

}

}

}

cout<<ans << endl;

}

}

Auto comment: topic has been updated by ShivanshJ (previous revision, new revision, compare).

Auto comment: topic has been updated by ShivanshJ (previous revision, new revision, compare).

Auto comment: topic has been updated by ShivanshJ (previous revision, new revision, compare).

lol didn't read

The time complexity allowed for problem C is too high

We had initially thought of keeping it tighter but after some discussions we decided that it wont be be a good idea to let $$$n * \sqrt[2]{A}$$$ not pass, but $$$n * \sqrt[3]{A}$$$ pass.

how to achieve O(n*A^(1/3))?

The maximum factors of a number $$$A$$$ are bounded by $$$\sqrt[3]{A}$$$. Discussion for the same.

Using some precalculation methods you can store factors for all possible smartness values as $$$n$$$ was capped at $$$10^5$$$. Refer the implementation for the same.

thanks!

I do not quite understand this, are you saying that it is possible to enumerate all factors in $$$^3\sqrt{x}$$$ time? Can you tell how?

[DELETED]

The statement clearly say that:

It is guaranteed that the sum of n over all test cases does not exceed 10^5.

I passed with time complexity $$$O(n\log n \sqrt A)$$$.

I used Segment Tree and passed with time complexity $$$O(128 n \log m)$$$.

Same, shouldn't have passed, code breaks an test

1

100000 100000

83160 100000 times

They just didn't include that test, which allowed many wrong submissions to pass, not only segtrees, but also some people used maps, which results in same complexity.

It is posible to fit this code in TL, the person's above code runs in 2550ms, mine in 2170 ms (without I/O), so with small bit optimizations on segtree I got 2022ms (also without I/O), if improved more, this should pass.

When systesting?

The TL on F is stupidly high, a simple $$$O(n^2)$$$ passed: 190000292

Hacked

Optimized it, I wanna know if you could hack this one: 190039238

Also I think it's kind of wrong that people started downvoting my comment, me being hacked doesn't change the fact that TL is absurdly big when only $$$O(nlognlogA)$$$ was intended in the first place.

what does that "tune=native" do ?

Auto comment: topic has been updated by ShivanshJ (previous revision, new revision, compare).

idk why i was stuck on B. I knew the final product needed for the answer, but it was failing pretest 3

same i dont know why

If you change n and m to long long it works, i guess because you are combining long long and int you should go for all long long but I´m not completely sure.

it worked thanks,I read 10^5 as 10^4 in the range of n and tested 10^4 and thought declaring m long long shouldn't be a problem fm.

I had the same problem as you. When n = 10^5, n*(n-1) overflows an integer. So n also has to be a long long.

The editorial of #845 is earlier than #844

Or there will be no editorial of #844

Oh please stop spamming everywhere, You have commented in most of the blogs in recrnt months, Instead of spamming go and find some friends to talk with them

lol, don't assume we have friends.

In problem A,you could just simply check consecutive elements whether they were even or odd.The count of consecutive even or odd pairs or both would be the result._

My solve for C barely passed, 1996 ms. Thought I had to binary search for length.

Lucky! Can you share your idea?

Similar to the solve in the tutorial, I kept a frequency of how many factors appeared and I binary searched to what length to keep it. If the length is greater than the series, it fails.

Any advice on How to master number theory..I Struck in "C" today.(How to think in the mathematical sense for the problem).

can anyone tell me what i did wrong in C?

Bro,You have to minimize the answer.Which you have notdone.

I have sorted the smartness array in reverse, so the answer is the maximum value possible for each number,how can i further minimize it?

I managed to hack my own code after the contest, feels bad to get accepted after this...

A good Spring Festival gift, but a stupid me. ):

It's such a pity that I watched the Spring Festival Gala and failed to solve E in time.

In E instead of topological sort, you can just check the in degree of each scc. If there are more than one scc with in degree == 0 then graph is not reachable.

I'm not sure if I got your point right, but I guess you're saying that if there's more than one node with indegree equal to zero in the scc graph, then there is no answer, if so consider the following test:

3 2

1 2 1

3 2 2

you can make node one reach every node by reversing the second edge (3 2 2) could you clarify a bit more ?

No I was talking about the routine inside binary search. You make all edges with weights less than or equal to mid double sided. Then you make SCC and if there are more than one nodes with in degree 0 then not possible for that value of mid.

Aha it's clear now. Thanks !

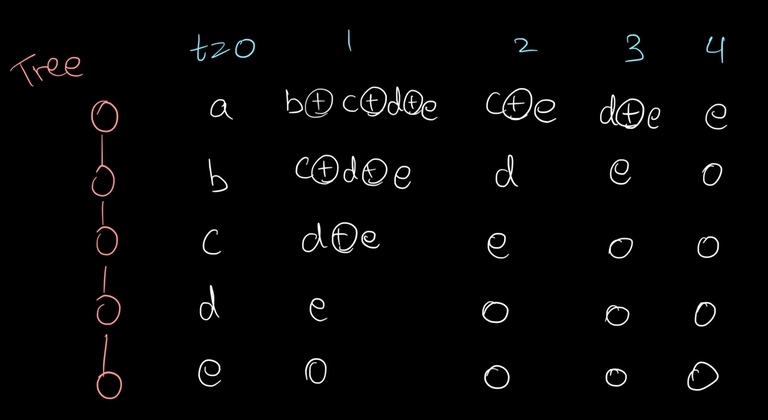

There is a right bracket missing in the solution of Problem D. The location is just as the picture shows.

In the contest I wrote such an algorithm below for F: for every index I,find the interval that a_i can be the largest element in any subarray of it,which can be solved simply in O(n) complexity. Then just calculate the answer for every I using 01trie.The total complexity is O($\sum len(interval)$log n). If the value of a_i are distinct,the complexity is O(nlogn),which is acceptable.However,if there are same elements---for example a_i are same for every I,the complexity would be O(n^2) or higher.

However,the algorithm passed both the pretest and the system test.Isn't the data too weak?

This algorithm could be improved by finding the interval that a_i is the leftest largest element in any subarray.

I don't understand. Won't that be O(n^2) even when the elements are distinct. Like, consider when the given array is in sorted order.

$$$a_i = i$$$

$$$l_i = 0$$$ and $$$r_i = i+1$$$, $$$len_i = i$$$, $$$\sum len_i = O(n^2)$$$.

To my attitude , it's too standard. If you understand Chinese (or use translation softwares), you can see that the last two problems are just constructed with some standard algorithms(E: https://www.luogu.com.cn/problem/P3387 and F: https://www.luogu.com.cn/problem/P4735) . The fact that they weren't as hard as usual caused the ranking to seem a little strange. And I think if you replace E or F with harder or more interesting problems, then this round will be much better.

Super fast editorials (: And very interesting problems.

Am I correct in saying the complexity of my solution for C is $$$O(a \ln m \cdot \ln(a \ln m) + m)$$$? It TLEs in 190006029 when I just use $$$a = 10^5$$$ but passes in 190008245 when I use compute up to the max $$$a_i$$$ from the input.

I thought about a harder version of problem D during the contest —

At any integer time t>0 , the value of a node becomes the bitwise XOR of the values of all children (all nodes below it) at time t−1.

How to solve this ?

I'm guessing that the solution would be the exact same. That the node would have a value of 1 approximately half the time. Not sure though :/

well you could look at it this way that, let say the grandchildren becomes zero, it means they don't affect the children and we go back to the same case as was mentioned in the original question. Now another case is that the value of grandchildren matters , but you can see that the great grand children will become zero 1 step before, leaving children's value to be dependant on the grandchildren only and not the nodes below that. This way we can conclude that the answer would not change and probability of 0 or 1 will still remain (1/2).

Correct me if I am wrong :)

I mean, I'm not sure it's EXACTLY the same. You mentioned that after the leaves become 0, the parents of the leaves won't be affected. But if you look at the root of the tree for example, it will still have to deal with the XORs of a lot more values than in the original problem. However I'm pretty sure that the answer would still stay the same, b/c I doubt that the #of descendants that affect the current node matters.

video editorial for Chinese:

BiliBili

In problem E we don't even need to check for toposort in SCC, we just need to check the count of indegree, there should be only one element whose indegree should be zero in the SCC

if there are no elements (you graph is not DAG)

and if there are more than one, than you can't reach from one of the elements to other

Yes. You are right. The editorial is a little off. What we meant was you don't need to do the complete SCC algorithm. You can just do a toposort on the graph (which is the first part of SCC algo). If you think about it,the first node in this sorted list will be the one belonging to a 0-degree SCC. So, you can just check the reachability of all other nodes from the first node.

Can someone please tell me what is the mistake in my code of problem C https://codeforces.me/contest/1777/submission/190077492

how can one can generalize this statement "We will focus on computing the expected value of F(A) rather than the sum, as the sum is just 2n×E(F(A))" from editorial of question d.

Each initial situation has chance of p(A)=1/2^n to appear, so E(F(A))=sum(F(A)*p(A))=sum(F(A)/2^n)=(1/2^n)*sum(F(A)).

F can be solved with the standard D&C in less than 1s. why is the TL 8s?

I didn't understand the explanation for D.

Jumping off someone else's comment, the idea is that if we let V(node, time) be the sum of all the possible values of this node over all initial positions, then V(node, time) is equal to 2^n-1 for all nodes and times(except when we hit a time such that the value of the node is guaranteed to become 0). This is b/c we can expect each node to have a value of 1 approximately half the time. This is kind of intuitive to see, but you might need to do some examples to prove this to yourself. So in order to calculate the sum of all ideal V's, we need another observation. At time 1, the problem states that the leaves of the tree will become 0. This effect will actually cascade upwards. So if a node i has a height of k_i (the distance from the node to the farthest leaf that's a descendant), then that means that it will have a 50% chance to be a 1 for exactly k_i times, before becoming a 0 for the rest of eternity. So the solution is to sum up all the values of k_i and multiply that sum with 2^n-1.

In problem D, the way $$$V_u(A,t)$$$ is defined (that is, value of node u is bitwise xor of all nodes at distance t from u) is not at all true for t=1, or many instances of time, for ex:

Did I do something wrong or there is something wrong in the editorial?

I didn't understand how we calculate expected value of k boolean nodes in problem D, can someone explain in simpler)

Expected value = C(k, odd) / (C(k, odd) + C(k, even)). It's a well known fact that C(k, odd) = C(k, even) with k > 0.

thanks!

I approached Problem C: Quiz Master in the following way:

Let $$$pos_x$$$ store all numbers $$$num$$$ (present in given sequence $$$A$$$) such that $$$num$$$ is divisible by $$$x$$$. Also make $$$pos_i$$$ is sorted for each $$$1 \leq i \leq m$$$.

If $$$pos_i$$$ is empty for any $$$i$$$ then our answer is $$$-1$$$ else it is just a variation of this problem Smallest range in K lists.

We need to take one element from all $$$pos_i$$$. Let us take the smallest element from each $$$pos_i$$$. Since the range (difference in smartness) depends only on maximum and minimum value of numbers in list, so we try to increase the minimum number in our list (as we cannot decrease the maximum number). We continue this until we run out of option i.e., we can no longer increase the minimum number. The answer is the minimum range found in all cases.

Here is my submission.

emordnilap is palindrome backwards.

For B. Emordnilap, wouldn't you have to set ans to a long long (c++ solution)? N goes up to 10^5 and n * (n-1) would most definitely be past the ranges of an int. an int goes to about 2 billion (2^9 or to be exact 2147483647), and multiplying would be out of the range. Please tell me if I'm wrong.

3rd line of code:

For Problem F, the editorial solution gives Memory Limit Exceeded on test 84. https://codeforces.me/contest/1777/submission/190232289

Can someone please tell me where have I got it wrong?

That's because you submitted it in a 64 bit compiler. Pointers are 8 bytes in 64 bit compilers and 4 bytes in 32 bit compilers. The solution will pass if you submit it in a 32 bit compiler (like GNU G++17). But it takes double the memory in 64 bit compilers and therefore MLEs.

Thanks.

Now I know that 64-bit can be harmful too.

Suggestion for people having TLE on F even though they implemented the optimal solution: Make sure you use a structure like a segment tree or sparse table to find the maximum in the interval you are solving. Or alternatively, pass it when returning recursion. Do not iterate through the entire interval as I did as that can take up to $$$O(N^2)$$$ time, because d&c does not divide in half each time.

Or they could just do the standard D&C which divides in half :)

How to solve it with standard D&C?

For a segment $$$[l;r]$$$ let $$$m={(l+r)\over 2}$$$.

The optimal answer is either in the left, right or both parts. For the left and right parts solve recursively.

Now, if the answer lies in the both parts. Assume that a segment $$$[L;R]$$$ such that $$$L \le m \lt R$$$ is the optimal answer.

$$$max(a_L,...,a_R)$$$ lies either in the $$$[L;m]$$$ (left part) or $$$[m+1;R]$$$ (right part).

Let's assume that it's in the right part. We will use two pointers. Initially, $$$L=m$$$ and $$$R=m+1$$$

Assume that the right end of the answer is $$$R$$$. We already know the maximum element $$$mxR$$$ and xor $$$xrR$$$ of the right part.

We have the right part of the answer, but we are missing the left one. How do we find it fast such that it would be the optimal one?

We assumed that the maximum element in the optimal answer lies in the right part. It means that each segment $$$[L;m]$$$ such that $$$max(a_L,...,a_m) \le mxR$$$ combined with the right part could be the optimal answer.

Create a trie for xor.

While $$$max(a_L,...,a_m) \le mxR$$$, add $$$xor(a_L,...,a_m)$$$ to the trie, and decrease $$$L$$$.

When you can't move $$$L$$$ anymore, get the best number $$$xrL$$$ from the trie to maximize $$$mxR ⊕ xrR ⊕ xrL$$$ and update the answer.

When you are done, repeat the process for the left part(as if the max would be in there).

submission

I think that I understand your solution. It seems like O(N^2), but it actually has O(N log A) armortized complexity per division. Thank you for sharing.

The complexity is $$$O(nlogn * logn)$$$.

Btw, why does it look like an $$$O(n^2)$$$ algo? It is absolutely the same as the standard D&C with an additional log from the trie?

I mean the solution which first comes to mind seems like you would need to do $$$O(N^2)$$$, because of keeping maximum and stuff. (Like, going through all possible right and left borders) But actually, it's enough to move one border and move the other one only when necessary by fixing on which side maximum is.

problem B was just super good.Idea of solving it is just amazing.

It's a bit too much talking about SCCs and kosaraju for E when in the end it just comes down to topologically sorting the uncondensed graph to find an appropriate candidate. But knowing how to think it terms of SSCs does help in this kind of problem.

No, you need to do topological sort on the condensed graph.

If what you call the condensed graph is the one with nodes being SCCs, then you definitely don't. you can check my submission for example. I use binary search. To verify a given cost $$$c$$$, I consider the graph with reverse edges only if $$$c \leq w$$$. I only topo sort then do a dfs on the untransformed graph starting from the top/root node i get. This is because topo sort guarantees that if the "root" node can't reach another node, then this node can't reach the root node either.

Of course it's true for whatever node $$$n_i$$$ that appears before node $$$n_j$$$ in the topological sorting.

That’s an interesting solution. I didn’t think that you can do that. However, I believe that the SCC solution is actually straightforward for most people.

Hey I find this Round has become unrated.Does anyone know why?

I was about to write the same; did you get any answers ???

Maybe unrated all the time?

What E(F(A)) means?

Don't understand why they prefer to put something like calculating things about $$$max(a_l,\cdots,a_r)$$$ to the last problem. You can solve most of this kind of problem using divide and conquer, or play dsu on cartesian tree. It's very typical and you can see many similar solutions in other problems about min max. With this trick, I figured out F in ten minutes with E still unsolved. (Actually I worked on D for 40 minutes after the contest) I also passed an Ex in ABC easily with this idea.

as a beginner problem a was fun solving

Cant figure out why this code is not working for Problem C. Any help is appreciated!

include<bits/stdc++.h>

using namespace std;

define pb push_back

define all(c) (c).begin(),c.end()

define fi first

define se second

typedef long long ll; typedef vector vi; typedef vector vvi; typedef vector vl; typedef pair<int,int> pi;

const int mxN = 1e5; vi facs[mxN+1]; int freq[mxN+1]; int cnt,m;

void init() { for(int i=1; i<=mxN; i++) for(int j=i+i; j<=mxN; j+=i) facs[j].pb(i); for(int i=1; i<=mxN; i++) facs[i].pb(i); }

void add(int x) { for(int fac : facs[x]) { if(fac>m) break;

freq[fac]++; if(freq[fac]==1) cnt++; }}

int sub(int x) { int res = cnt;

for(int fac : facs[x]) { if(fac>m) break; int t = freq[fac]-1; if(!t) res--; } return res;}

void solve() { int n; cin>>n>>m;

for(int i=1; i<=m; i++) freq[i] = 0; cnt = 0; vi a(n); for(int &i : a)cin>>i; sort(all(a)); int l = 0; int res = 1e9; for(int r=0; r<n; r++) { add(a[r]); if(cnt>=m) res = min(res,a[r]-a[l]); if(l==r) continue; int temp = sub(a[l]); while(temp>=m) { cnt = temp; for(int fac : facs[l]) { if(fac>m) break; freq[fac]--; } l++; res = min(res,a[r]-a[l]); if(l==r) break; temp = sub(a[l]); } } if(res==1e9) cout << "-1\n"; else cout << res << "\n";}

int main() { ios_base::sync_with_stdio(false); cin.tie(0);

}

sorry for necroposting but in E, we don't even need SCCs just taking the first element in topoSort and checking whether it is the required node is enough. because, if the first node cannot be the required node neither can any node later that node because such later node would have first node as its descendent which contradicts the toposort itself. (note here i used the fact that even if we don't have DAG topo sort still kind of works. you can verify this fact. similar fact is used in SCC algo.)

link: 260706982