hello all -- if you happen to be in the market for a new competitive programming extension and use VSCode, may i humbly suggest my new extension? i think it is relatively feature complete, but it's still in alpha (especially for languages other than C++) and may have some kinks that need working out. it theoretically supports C++, Java, Python, and Rust (and it's very easy to add more).

i created this because i was dissatisfied that most existing solutions can't import a bunch of test cases from a directory then run them in parallel. i also believe it has a cooler UI than other extensions. as usual, i didn't think it would take this long when i started...

if you encounter any difficulties, please let me know on github. thanks for taking a look!

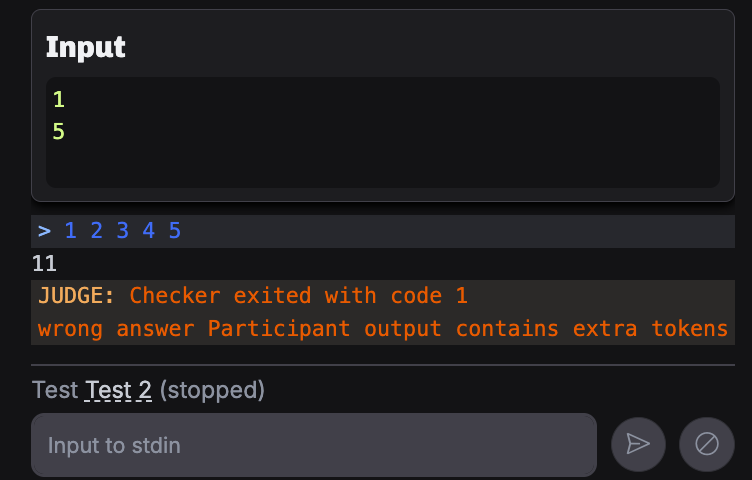

Realtime Input/Output

unlike other test runners for VSCode, we let you use prewritten inputs and still interact with your program on the fly.

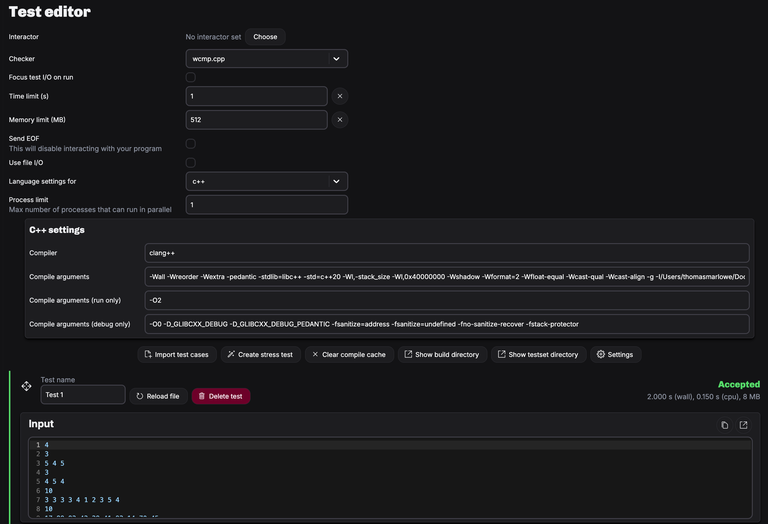

Test Editor

extensive configuration and support for interactors and custom checkers (floating point is easy -- just use fcmp! thanks testlib).

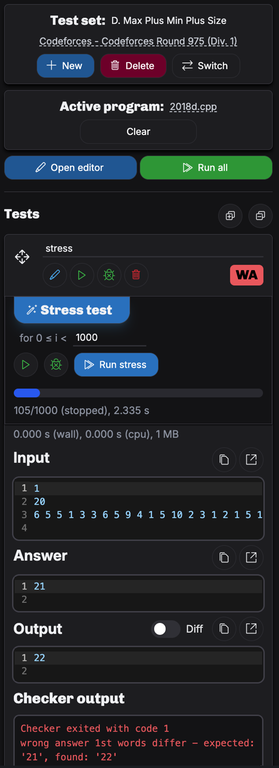

Stress Testing

run stress tests using a generator and brute force solution against your efficient solution. testlib is automatically included in generators/checkers/interactors.

Debugging

debug with CodeLLDB / debugpy / the Java extension for VSCode. integrates with clangd to provide linting based on your compiler arguments.

Competitive Companion Integration

integrates with the Competitive Companion browser extension for one-click test imports.

note: you can't use Hightail's Competitive Companion integration while this extension is active (they bind to the same port).

File I/O and Directory Import

perfect for USACO!