Hi there!

Some long time ago, I and SashaT9 thought of an idea to create a collection of little tricks useful in competitive programming. We believe that such a website can both boost beginners, and be useful for experts as a reference website containing many example problems, which can be used, for example, to recall something, or, say, make an educational contest.

It worth to say that there are already a bunch of blogs here with the same purpose. Our main feature is that we provide not just a list of tricks with their brief descriptions, but a detailed pages for each of them.

This project was inspired by tricki (currently dead) project. I already posted a test blog in order to demonstrate this format here.

We run a website based upon mkdocs (similar to cp-algorithms):

Now we wrote five more or less simple (but unfortunately unpolished) pages:

- Counting labeled trees

- One can process bits independently when relevant

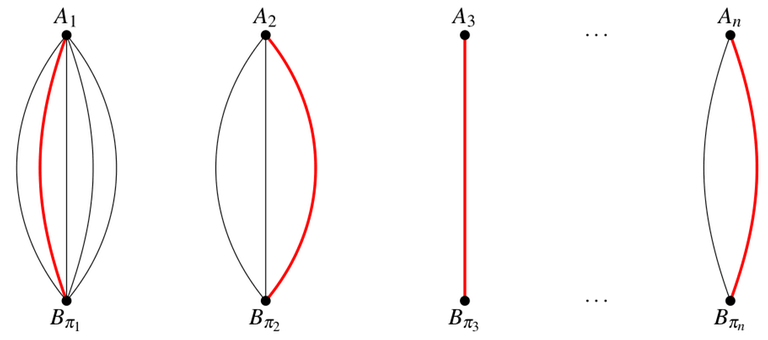

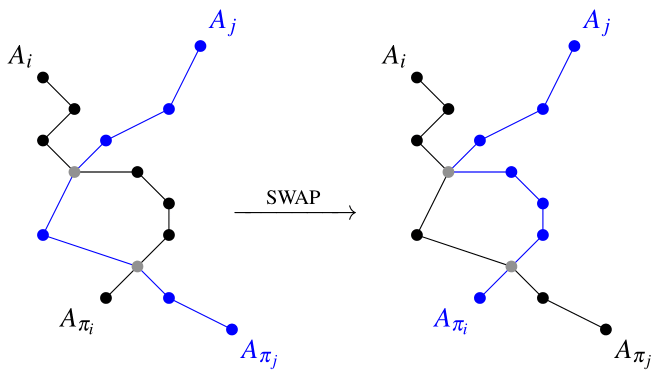

- Permutations can be represented as graphs

- Translate DP to the matrix form

- How to come up with a comparator?

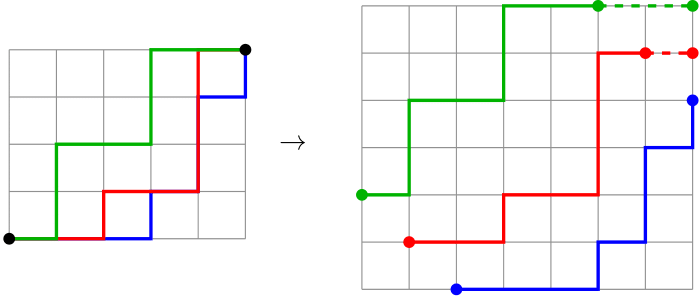

- (02.10.23) One can use determinants to count lattice paths

- (02.10.23) Small number of groups can be processed straightforwardly

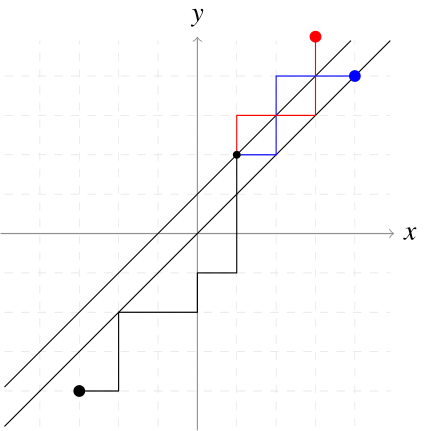

- (03.10.23) Use diagonals as parameters in grid DP problems

- (03.10.23) How to use ternary search

- (03.10.23) Lagrange multipliers can simplify tricky optimisation problems

This is an open project, so we encourage everybody to write their expositions and make add them to the github repository.

Miaow! Miaow! =^◡^=