Author: TheScrasse

Preparation: MyK_00L

Suppose that you can use $$$x$$$ operations of type $$$1$$$ and $$$y$$$ operations of type $$$2$$$. Try to reorder the operations in such a way that $$$a$$$ becomes the minimum possible.

You should use operations of type $$$2$$$ first, then moves of type $$$1$$$. How many operations do you need in the worst case? ($$$a = 10^9$$$, $$$b = 1$$$)

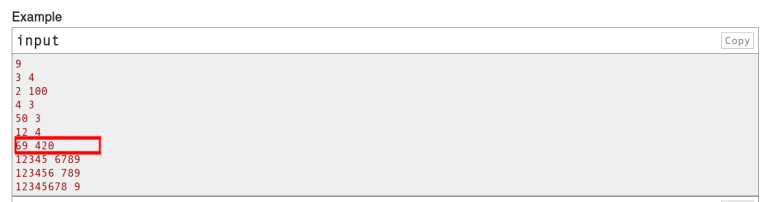

You need at most $$$30$$$ operations. Iterate over the number of operations of type $$$2$$$.

Notice how it is never better to increase $$$b$$$ after dividing ($$$\lfloor \frac{a}{b+1} \rfloor \le \lfloor \frac{a}{b} \rfloor$$$).

So we can try to increase $$$b$$$ to a certain value and then divide $$$a$$$ by $$$b$$$ until it is $$$0$$$. Being careful as not to do this with $$$b<2$$$, the number of times we divide is going to be $$$O(\log a)$$$. In particular, if you reach $$$b \geq 2$$$ (this requires at most $$$1$$$ move), you need at most $$$\lfloor \log_2(10^9) \rfloor = 29$$$ moves to finish.

Let $$$y$$$ be the number of moves of type $$$2$$$; we can try all values of $$$y$$$ ($$$0 \leq y \leq 30$$$) and, for each $$$y$$$, check how many moves of type $$$1$$$ are necessary.

Complexity: $$$O(\log^2 a)$$$.

If we notice that it is never convenient to increase $$$b$$$ over $$$6$$$, we can also achieve a solution with better complexity.

Official solution: 107232596

1485B - Replace and Keep Sorted

Author: TheScrasse

Preparation: Kaey

You can make a $$$k$$$-similar array by assigning $$$a_i = x$$$ for some $$$l \leq i \leq r$$$ and $$$1 \leq x \leq k$$$.

How many $$$k$$$-similar arrays can you make if $$$x$$$ is already equal to some $$$a_i$$$ ($$$l \leq i \leq r$$$)?

How many $$$k$$$-similar arrays can you make if either $$$x < a_l$$$ or $$$x > a_r$$$?

How many $$$k$$$-similar arrays can you make if none of the previous conditions holds?

Let's consider each value $$$x$$$ from $$$1$$$ to $$$k$$$.

- If $$$x < a_l$$$, you can replace $$$a_l$$$ with $$$x$$$ (and you get $$$1$$$ $$$k$$$-similar array). There are $$$a_l-1$$$ such values of $$$x$$$.

- If $$$x > a_r$$$, you can replace $$$a_r$$$ with $$$x$$$ (and you get $$$1$$$ $$$k$$$-similar array). There are $$$k-a_r$$$ such values of $$$x$$$.

- If $$$a_l < x < a_r$$$, and $$$x \neq a_i$$$ for all $$$i$$$ in $$$[l, r]$$$, you can either replace the rightmost $$$b_i$$$ which is less than $$$x$$$, or the leftmost $$$b_i$$$ which is greater than $$$x$$$ (and you get $$$2$$$ $$$k$$$-similar arrays). There are $$$(a_r - a_l + 1) - (r - l + 1)$$$ such values of $$$x$$$.

- If $$$x = a_i$$$ for some $$$i$$$ in $$$[l, r]$$$, no $$$k$$$-similar arrays can be made.

The total count is $$$(a_l-1)+(k-a_r)+2((a_r - a_l + 1) - (r - l + 1))$$$, which simplifies to $$$k + (a_r - a_l + 1) - 2(r - l + 1)$$$.

Complexity: $$$O(n + q)$$$.

Official solution: 107232462

Authors: isaf27, TheScrasse

Preparation: Kaey

Let $$$\lfloor \frac{a}{b} \rfloor = a~\mathrm{mod}~b = k$$$. Is there an upper bound for $$$k$$$?

$$$k \leq \sqrt x$$$. For a fixed $$$k$$$, can you count the number of special pairs such that $$$a \leq x$$$ and $$$b \leq y$$$ in $$$O(1)$$$?

We can notice that, if $$$\lfloor \frac{a}{b} \rfloor = a~\mathrm{mod}~b = k$$$, then $$$a$$$ can be written as $$$kb+k$$$ ($$$b > k$$$). Since $$$b > k$$$, we have that $$$k^2 < kb+k = a \leq x$$$. Hence $$$k \leq \sqrt x$$$.

Now let's count special pairs for any fixed $$$k$$$ ($$$1 \leq k \leq \sqrt x$$$). For each $$$k$$$, you have to count the number of $$$b$$$ such that $$$b > k$$$, $$$1 \leq b \leq y$$$, $$$1 \leq kb+k \leq x$$$. The second condition is equivalent to $$$1 \leq b \leq x/k-1$$$.

Therefore, for any fixed $$$k > 0$$$, the number of special pairs ($$$a\leq x$$$; $$$b \leq y$$$) is $$$max(0, min(y,x/k-1) - k)$$$. The result is the sum of the number of special pairs for each $$$k$$$.

Complexity: $$$O(\sqrt x)$$$.

Official solution: 107232416

1485D - Multiples and Power Differences

Author: TheScrasse

Preparation: MyK_00L

Brute force doesn't work (even if you optimize it): there are relatively few solutions.

There may be very few possible values of $$$b_{i,j}$$$, if $$$b_{i-1,j}$$$ is fixed. The problem arises when you have to find a value for a cell with, e.g., $$$4$$$ fixed neighbors. Try to find a possible property of the neighbors of $$$(i, j)$$$, such that at least a solution for $$$b_{i,j}$$$ exists.

The least common multiple of all integers from $$$1$$$ to $$$16$$$ is less than $$$10^6$$$.

Build a matrix with a checkerboard pattern: let $$$b_{i, j} = 720720$$$ if $$$i + j$$$ is even, and $$$720720+a_{i, j}^4$$$ otherwise. The difference between two adjacent cells is obviously a fourth power of an integer. We choose $$$720720$$$ because it is $$$\operatorname{lcm}(1, 2, \dots, 16)$$$. This ensures that $$$b_{i, j}$$$ is a multiple of $$$a_{i, j}$$$, because it is either $$$720720$$$ itself or the sum of two multiples of $$$a_{i, j}$$$.

Complexity: $$$O(nm)$$$.

Official solution: 107232359

Author: TheScrasse

Preparation: TheScrasse

What happens if you can't swap coins?

Let $$$dp_i$$$ be the maximum score that you can reach after $$$dist(1, i)$$$ moves if there is a red coin on node $$$i$$$ after step $$$3$$$. However, after step $$$2$$$, the coin on node $$$i$$$ may be either red or blue. Try to find transitions for both cases.

If you consider only the first case, you solve the problem if there are no swaps. You can greedily check the optimal location for the blue coin: a node $$$j$$$ such that $$$dist(1,i) = dist(1,j)$$$ and $$$a_j$$$ is either minimum or maximum.

Instead, if the coin on node $$$i$$$ is blue after step $$$2$$$, the red coin is on node $$$j$$$ and you have to calculate $$$\max(dp_{parent_j} + |a_j - a_i|)$$$ for each $$$i$$$ with a fixed $$$dist(1, i)$$$. How?

Divide the nodes in groups based on the distance from the root. Then, for each $$$dist(1, i)$$$ in increasing order, calculate $$$dp_i$$$ — the maximum score that you can reach after $$$dist(1, i)$$$ moves if there is a red coin on node $$$i$$$ after step $$$3$$$. You can calculate $$$dp_i$$$ if you know $$$dp_j$$$ for each $$$j$$$ that belongs to the previous group. There are two cases:

if after step $$$2$$$ the coin on node $$$i$$$ is red, the previous position of the red coin is fixed, and the blue coin should reach either the minimum or the maximum $$$a_j$$$ among the $$$j$$$ that belong to the same group of $$$i$$$;

if after step $$$2$$$ the coin on node $$$i$$$ is blue, there is a red coin on node $$$j$$$ ($$$dist(1, i) = dist(1, j)$$$), so you have to maximize the score $$$dp_{parent_j} + |a_j - a_i|$$$.

This can be done efficiently by sorting the $$$a_i$$$ in the current group and calculating the answer separately for $$$a_j \leq a_i$$$ and $$$a_j > a_i$$$; for each $$$i$$$ in the group, the optimal node $$$j$$$ either doesn't change or it's the previous node.

Alternatively, you can notice that $$$dp_{parent_j} + |a_j - a_i| = \max(dp_{parent_j} + a_j - a_i, dp_{parent_j} + a_i - a_j)$$$, and you can maximize both $$$dp_{parent_j} + a_j - a_i$$$ and $$$dp_{parent_j} + a_i - a_j$$$ greedily (by choosing the maximum $$$dp_{parent_j} + a_j$$$ and $$$dp_{parent_j} - a_j$$$, respectively). In this solution, you don't need to sort the $$$a_i$$$.

The answer is $$$\max(dp_i)$$$.

Complexity: $$$O(n)$$$ or $$$O(n\log n)$$$.

Official solution: 107232216

Author: TheScrasse

Preparation: TheScrasse

Why isn't the answer $$$2^{n-1}$$$? What would you overcount?

Find a dp with time complexity $$$O(n^2\log n)$$$.

Let $$$dp_{i, j}$$$ be the number of hybrid prefixes of length $$$i$$$ and sum $$$j$$$. The transitions are $$$dp_{i, j} \rightarrow dp_{i+1, j+b_i}$$$ and $$$dp_{i,j} \rightarrow dp_{i+1,b_i}$$$. Can you optimize it to $$$O(n\log n)$$$?

For each $$$i$$$, you can choose either $$$a_i = b_i$$$ or $$$a_i = b_i - \sum_{k=1}^{i-1} a_k$$$. If $$$\sum_{k=1}^{i-1} a_k = 0$$$, the two options coincide and you have to avoid overcounting them.

This leads to an $$$O(n^2\log n)$$$ solution: let $$$dp_i$$$ be a map such that $$$dp_{i, j}$$$ corresponds to the number of ways to create a hybrid prefix $$$[1, i]$$$ with sum $$$j$$$. The transitions are $$$dp_{i, j} \rightarrow dp_{i+1, j+b_i}$$$ (if you choose $$$b_i = a_i$$$, and $$$j \neq 0$$$), $$$dp_{i,j} \rightarrow dp_{i+1,b_i}$$$ (if you choose $$$b_i = \sum_{k=1}^{i} a_k$$$).

Let's try to get rid of the first layer of the dp. It turns out that the operations required are

- move all $$$dp_j$$$ to $$$dp_{j+b_i}$$$

- calculate the sum of all $$$dp_j$$$ in some moment

- change the value of a $$$dp_j$$$

and they can be handled in $$$O(n\log n)$$$ with "Venice technique".

$$$dp$$$ is now a map such that $$$dp_j$$$ corresponds to the number of ways to create a hybrid prefix $$$[1, i]$$$ such that $$$\sum_{k=1}^{i} a_k - b_k = j$$$. Calculate the dp for all prefixes from left to right. if $$$b_i = a_i$$$, you don't need to change any value of the dp; if $$$b_i = \sum_{k=1}^{i} a_k$$$, you have to set $$$dp_{\sum_{k=1}^{i} -b_k}$$$ to the total number of hybrid arrays of length $$$i-1$$$.

Complexity: $$$O(n\log n)$$$.

Official solution: 107232144

Love editorials with hints! Also a good but not great contest!!

Video Editorial Problem D: Multiples and Power Differences

Well explained

Thanks a lot don't know why people down-voted

I think because reply was off topic to parent comment. It was good if same comment was made separately.

Why not?

Video editorial for C Floor and Mod: https://youtu.be/ap-q_wQn7BE Solution in O(sqrt(x)).

Can anyone explain the hint A problem to me

The idea is to first increase b as much as you want then perform operation 1. The reason is :- Lets say you divide first and then increase b, our a will be floor(a/b) and b will be b+1. If we increase b first then finally a will be floor(a/(b+1)) and b will be b+1. You can similarly prove for more number of operations. So once you know that we have to increment b first, the question is by how much. You will see that if we start with b and increment b till it reaches b+k ( k steps ), you will now need to integer divide a by b+k until a != 0. Lets call the number of steps to do so to be 'l'. So total number of steps will be l+k. I observed that we dont need to go very far away as that would increase k by very much but l wont be affected that much. So I started with b and went until b+100 and took the minimum value of l+k for every b in this range. P.S : Sorry I dont know how to use mathematical symbols

Sorry ,but i dont get,how do u know to increase b only to a 100????

Its pure intuition,as you can see in the editorial, it turns out that we dont even need to increase above 30. To get b->b+k we need k steps and to get a->0 we need O(log(a)) steps where base is b+k, we can observe that as b+k increases, the function log(a) doesnt decrease very fast and thats why I thought we dont need to increase b by a lot as that would increase total number of steps more than what log(a) would decrease and the net effect would be that we would require more steps.

I also didnt get how 30 comes...and how should I even realize that...:( Bad Problem

I approached in this way: Let's not keep track of count of type2 operation before doing type1 operation.

You cant understand solution --> bad problem.

Amazing logic

XD...was waiting for this comment to appear...no not understanding the editorial is not the reason to consider it a bad problem...

OK, understand you :)

we need almost 30 operations when it s 2 I agree. but how is it related to incrementing b by only 30 times and checking the minimum possibility scenario of each incremented b. why not more than 30 increments of b?

Because if you use 30 steps for incrementing then you need one more step for dividing, making it totally 31 steps. Instead of that you could have.simply used 30 steps all in dividing, which would have brought

adown to 0 already.We can rewrite the question as min(x-b+(ln(a)/(ln(x))) for all x>=b. Of course, there is some rounding off business, which we do at last. So consider this fnc, if we differentiate this function, we get 1-(ln(a)/x), so we get a minimum at x=ln(a), but it may be also possible that ln(a) is less than b, so if we take the worst possible ln(a) it won't cross ln(10^9), which comes around 25. So we can iterate x from b to ln(a)+5, and obtain a minimum value. Well, this analysis is rough. So b we can increase up to up to 25 or so, but the editorial gives a better limit.

You differentiated it incorrectly:

$$$d_x \frac{ln(a)}{\ln(x)} = - \frac{\ln(a)}{x \ln^2(x)}$$$

Therefore after differentiation you must get the equation

$$$1 - \frac{\ln(a)}{x \ln^2(x)} = 0$$$

The upper limit for $$$a$$$ is $$$10^9$$$. Therefore we arrive to

$$$x \ln^2(x) = 9 \ln(10)$$$

The left part is strictly less than $$$0$$$ for $$$x \leqslant 6$$$ and it becomes positive for $$$x \geqslant 7$$$, where $$$x \in N$$$.

As the minimum of $$$f(x) = x - b + \frac{\ln(a)}{\ln(x)}$$$ is somewhere between $$$6$$$ and $$$7$$$ we suffice with at most $$$6$$$ iterations provided that we start from $$$b = 1$$$ (ending up at $$$7$$$).

Otherwise we need to iterate upwards only until $$$b >= 7$$$. So that if you start with $$$b = 10$$$ you do zero iterations!

You can type $...$ to insert math, where "..." is latex math expression. Common examples:

$a_{1} + a^{2}$ --> $$$a_{1} + a^{2}$$$

$\frac{a}{b}$ --> $$$\frac{a}{b}$$$

It wants to say that we should increment $$$b$$$ first as much we want and then do the divide operations . Because that way number of division operations would be less.

Why solution of problem E's complexity is $$$O(n\log n)$$$?

upd:now it's ok

Good contest but I think first 4 problems were all heavily based on Maths (except B).Would have been great contest if atleast one was from another topic making problemset more balanced.

B,C,D involved math but some ideas outside math was also required . Like binary search in C (not necessary though) , prefix sum in B , chess coloring in D. Also though i didn't read E,F they are tagged as DP and data structures.

Thanks for the interesting problems and the fast editorial!

Very nice problems. Every problem had something to offer at least till D(didn't see E and F). I wish I could upvote more for the nice editorial with hints. Looking forward to more contests from the authors.

I solved A but don't know why it worked , now i am reading editorial of A, lol

lol

I don't know why are you commenting, lol

what are you doing here even you didn't participated this contest , that's why you can not understand why i comment here.lol

Newbies just spam everywhere!

pupils think they are very smart,lol

No!!

Thanks for fast editorial

I love math forces.

Can someone please tell why this code for problem C is giving TLE, inspite of O(sqrt(x)) solution? Code: 107231229

Because in works in O(min(x, y) — sqrt(x, y)) >= O(sqrt(x, y))

Is it O(sqrt(x))? Because I think you are iterating from sqrt(x) to x, which I think is O(x).

I see what you did there ;)

Problem — Floor and Mod

Is this dejaVu ? Cause I already saw this in the contest blog ig.

I earlier posted it there but for some reason it got removed.

ROFL !! Keen observer !!

I see that your upvote count is nearing 69 hope it stays there :rofl:

The contest was really enjoyable! Thanks for the fast editorials!

was a good contest .. good work authors ...and editorial with hints is really a good idea :)

I used the same logic in div2A. Can someone please help me where I went wrong?

Link to submission: https://codeforces.me/contest/1485/submission/107217438

I guess it's because double are devil. On case 5 test 3 your l should be 3 but its just à little bit less because of approximation your flag isn't activated, rewrite without double and log and it should work

Thanks, got it. I never knew this happened with log. Could you explain why exactly does this happen?

PS. It's so annoying to get WA for such a silly mistake, could've been a specialist for the first time mayb :( .

Why does first solution in F has complexity O(N^2*log N)? Don't we have O(N^2) elements in map in worst case (if almost all subsegments have different sums) and get complexity O(N^3*log N)?

The possible different sums for each length are $$$O(n)$$$, because they only depend on the rightmost $$$i$$$ such that $$$b_i = \sum_{k=1}^{i} a_k$$$.

It was too easy) Thank you!

In the editorial of problem A, it is given that even if we check the value of b up to 6 it will work. can someone explain why ?

Not a formal proof, but here's an intuitive way to explain it: log(1e9)/log(b+1)+1<log(1e9)/log(b)

I did not understand how it proves that, can you explain it?

The number of moves needed without increasing $$$b$$$, is $$$\frac{log(a)}{log(b)}$$$

So, if $$$\frac{log(a)}{log(b+1)}+1>\frac{log(a)}{log(b)}$$$, it means it's not convenient to increase $$$b$$$.

For all $$$a$$$ up to $$$10^9$$$, this is true for every $$$b >= 6$$$, so it is never convenient to increase $$$b$$$ if it is $$$>=6$$$

We can say this only by verifying or there is some short way to get the above conclusion using the formula in your comment ?

Yeah, you can solve for $$$b$$$ in that inequality. Also, that inequality is just $$$log_{b + 1}a + 1 < log_ba$$$.

Ok, so i think we need to first put the value of $$$a$$$ and then solve for $$$b$$$ .But to justify for all $$$a<=1e9$$$ using this formula , we need to iterate for all values of $$$a$$$.

It's trivial to prove that the answer is <= 31 because you can solve it with +1 to make it 2 and divide it by 2 30 times. So test it up until 31 if that makes you happy.

I understood the O(n^2logn) solution of problem F. But I don't get how to get rid of first layer of dp. Specifically in the editorial —

Let's try to get rid of the first layer of the dp. It turns out that the operations required are....

can anyone explain this ?

Here are video solutions to all problems, a challenge version of E, and a lesson on how to misread problems (of course, the last two things are totally unrelated)

An easier solution for A with dp if you don't know how to prove your greedy solution, here. Dp state is represented by $$$a$$$ & $$$b$$$. At every stage we have two choices:

1) divide $$$a$$$ by $$$b$$$.

2) increase $$$b$$$ by 1.

Now take the minimum of these two at every state. Also in this way we can see that $$$b$$$ can go till 1e9, but we can observe that $$$a$$$ will be zero if we divide it once with atmost 13 consecutive numbers in worst case. So we can stop if $$$b$$$ goes beyond.(for safety i put it 20 instead of 13 in code).

I got WA in Problem A because I used a log() function :((

107195035

When values are for e.g. a = 18^7 and b = 18, log(a) / log (b) returns 6.9999999999994... something. Does anyone know a better way to use log() and floor() to bypass this issue?

temp = (log(a) / log(i));

while(pow(i,temp) <= a ) temp++;

ans = min(ans, temp + i — b);

floor(log(a)/log(b) + 1e-11) should work

what is greedy solution in problem A and how to prove the last statement in editorial :

$$$a = 10^9, b = 1$$$

$$$2^{30} > a, steps = 31$$$

$$$3^{19} > a, steps = 21$$$

$$$4^{15} > a, steps = 18$$$

$$$5^{13} > a, steps = 17$$$

$$$6^{12} > a, steps = 17$$$

$$$7^{11} > a, steps = 17$$$

$$$8^{10} > a, steps = 17$$$

$$$9^{10} > a, steps = 18$$$

$$$10^{10} > a, steps = 19$$$

thanks , example can help to visualize . How to prove it formally ? Like for every $$$a,b <= 1e9$$$ ?

1). $$$a = 10^9$$$, $$$b \in [1, 10^9]$$$

As you can see before, the distribution converges at $$$5$$$ and diverges from $$$9$$$ for $$$b = 1$$$ the same holds for any $$$b$$$ cuz the difference stays the same.

2). $$$a \in [1, 10^9], b = 1$$$

Likewise, $$$\exists x \mid log_2a + 1 > log_3a + 2 > \dots > log_xa + x - 1 < log_{x + 1}a + x < \dots$$$.

And I can state the same from above, the distribution converges at $$$x$$$ and diverges from $$$x + 1$$$ for $$$b = 1$$$ the same holds for any $$$b$$$ cuz the difference stays the same.

Thus, $$$6$$$ is the upper-bound for this problem since $$$a$$$ can be at most $$$10^9$$$.

P.S. The upper-bound could vary accordingly to $$$a$$$.

For F the editorial definition is slightly different from what I thought. Define dp[i][j] as the number of ways of using i numbers and getting j = CURRENT_SUM — sum[1....i]. Under this definition, taking a[i] = b[i] makes the next j be the same as j so if we do nothing we already do the transition. For the other transition, we make j = a[i] — sum[1...i] so we take the total number of ways we have so far and exclude the number of ways such that the sum is the same in this transition or simply override dp[i][j] with the total number of ways in i-1 as the official solution does. In the end the code is equal but this way of thinking seems slightly cleaner (in my opinion)

Very nice problems!! Sad on missing out on Master due to FST in problem B :(

Hi, I think that I might have a better solution for problem div2A. 107216266 I can't say what the complexity of my submission is with total certainty but I think that maybe it is better than that of the proposed solution. Basically, I try all possible divisors in increasing order while keeping track of the previous answer. When it gets to the point where the current solution is worse than the previous one, then it stops the loop and returns the previous answer.

I don't have a formal mathematical proof to state why this works, but it does. It would be great if someone could explain what the complexity of my code is :)

Your solution is pretty much the same as the official solution, the complexity is $$$O(log(n))$$$, but it can be done in $$$O(1)$$$ if you use the builtin log function (paying attention to floating point errors).

The reason why in the editorial we say our complexity is $$$O(log^2(n))$$$ is because we did not prove that the number of increases needed is $$$O(1)$$$ (it's not, it's prob something like $$$O(log(log(n))$$$, 6 for these constrains, but if $$$b$$$ is greater than that, than you don't need to increase at all), for simplicity we just proved it is $$$O(log(n))$$$

Great! thanks

A quick proof of correctness is to note that the number of steps required is the pointwise floor of a convex function.

$$$g'$$$ is obviously increasing, so that $$$f(x)$$$ is non-increasing for $$$x \cdot (\ln x)^2 \leq \ln a$$$ and non-decreasing for $$$x \cdot (\ln x)^2 \leq \ln a$$$. This also means that the optimal value of $$$x$$$ is of order $$$\frac{\log a}{(\log \log a)^2}$$$.

Great Contest, 1st, 2nd, and 4th questions were purely math-based. They should include problems from other topics as well.

all you have to do in D

just get the lcm for the numbers from 1 to 16

and get for every number from the 1 to 16 the multiple to it and call it x and the different between the lcm and x have 4'th sqrt (yeaaaaaaaaah all that just in 1 minute brute force in your local pc)

color the grid as a chess board

replace the black cells by the LCM

and replace the white cells by the answer that you got from the brute force

do you need a proof?

ok i don't give a sh....

i am just upset because I did not try in D in the contest :(

How do you write a brute force efficiently?

all that will just to get the answer for every number and take it by your hands

Nvm, I misunderstood. I thought that you got the idea by bruteforcing the entire solution.

By the way, you don't even need to brute force values on white cells, you can just put $$$720720 + a_{i, j}^4$$$.

yp, i was in brain lag

edit: i was thinking that the next number is lcm * aij^4 instead of lcm + aij^4 :(

Great problems+fast editorial with hints are the best combo.

For C I have good solution it don't requires math just logical binary search. See think of the graph of quotients plotting on (b) axis. For calcuating the peak of quotients basic observation will be for random number x on b-axis having remainders [a>=(x+1)(x-1)]=>>(a=x^2-1) =>>a+1=x^2.This equation comes from X=(Y.r)+r is now x=(y+1)r. So min value of(sqrt(a+1),b). Cool I got peak ! This is digram.. Now iterate from right end using binary search locate the location where quotient is still the same. And subtract both the points multiplying by number of quotients. In this keep way iterating to peak ((sqrt(a+1),b).

Can anyone tell me why this code for E gets WA on test 3? I followed the (alternate) strategy given in the editorial.

submission

EDIT : very dumb error

Here is the corrected implementation of the editorial approach, if it helps.

Corrected code

I have a different explanation for problem F , though the final approach is almost the same.

At each point we have two choices :

1) Make $$$a[i] = b[i]$$$

2) Make $$$a[i] = b[i]-$$$(prefix_sum up to $$$i-1$$$ elements in a[]).

So answer could have been $$$2^n$$$ except when both choices gives the same array.

When both the choices give the same array , prefix_sum of $$$i-1$$$ elements should be 0. This way I got 2 tasks , (1) Find the number of distinct arrays for first i elements : dp1 , (2) Find the number of distinct arrays for first i elements having sum 0 : dp2.

Then we directly have, $$$ dp1_i = (2*dp1_{i-1}) - dp2_{i-1}$$$.

To calculate $$$dp2_i$$$, we have one more observation that, when we do operation of type (1), $$$b[i]$$$ adds to the previous prefix_sum and for type (2), prefix_sum becomes $$$b[i]$$$. This tells us that, prefix sum of i elements in a[] will be a subarray of b ending at i.

So $$$dp2_i$$$ considers the smallest subarray of b ending at $$$i-1$$$ and having sum 0 (This is a standard task using hashmap). Let's say $$$[x,i-1]$$$. Then we take a unique subarray of $$$x-1$$$ elements, do a type 2 operation for x and do type 1 operation for elements in range $$$(x+1,i-1)$$$. This gives $$$dp2_i = dp1_{x-1}$$$.

In problem C, I'm not getting how we're getting the no. of special pairs just by counting the possible no. of b, because can't there be different a's for a fixed b and a fixed k? If so, then how are they being considered in our answer?

$$$a = kb + k$$$.

In EDITORIAL Therefore, for any fixed k>0, the number of special pairs (a≤x; b≤y) is max(0,min(y,x/k−1)−k). Why we have subtracted k from min(y,x/k−1) and taken maximum of both to count special pairs???

Let's look at the four inequalities required for solving this question:

b > k (derived in the editorial)

1 <= b <= y (given in the question)

1 <= k(b + 1) <= x (derived in the editorial)

Modifying equation 3, we get

1/k — 1 <= b <= x/k — 1

1 <= k < b (derived in the editorial)

(Why k != 0? If k = 0, then a = k*(b + 1) = 0 but a >= 1, so the minimum value of k is 1)

Now from equation 1, we know that the minimum value of b is greater than k and from equation 2 we know that the upper limit of b is y.

So we get the following inequality if we merge equation 1, 2 and 4

k < b <= min(y, x/k — 1)

Now we got an open interval at the lower limit and closed interval at the upper limit, so the number of elements in the interval is Upper Limit — Lower Limt = min(y, x/k — 1) — k.

Now the value of k can exceed the value of min(y, x/k — 1) since 1 <= k <= sqrt(x) and in this case, we will get negative number of elements in our interval which is not possible. So if we get negative number of elements, in such cases it means we have 0 elements. That is why we must take max(0, upper limit — lower limit).

Example: If y = 2, x = 36, k = 6

min(2, 36/6 — 1) — 6 = min(2, 5) — 6 = 2 — 6 = —4.

.

a mod b = k, that is, k is the remainder when we divide a by b.

Now applying Euclid's Division Lemma we can write

a = bq + k, where q = $$$\lfloor{\frac{a}{b}} \rfloor$$$ = a mod b = k (Given in question)

Since k is the remainder, we 0 <= k < b by Euclid's Division Lemma or b > k.

Can you please explain why k is always less than or equal to sqrt(x)

$$$0$$$ $$$<=$$$ $$$k$$$ $$$<$$$ $$$b$$$ (By Euclid's Division Lemma)

Multiply both sides by $$$k$$$.

$$$k*k$$$ $$$<$$$ $$$k*b$$$

$$$=>$$$ $$$k^2$$$ $$$<$$$ $$$k*b + k$$$ (Since $$$k$$$ is non — negative, the RHS sum can remain same or increase but never decrease)

But $$$k*b + k$$$ $$$=$$$ $$$a$$$ $$$<=$$$ $$$x$$$ (Given in the question).

Hence $$$k^2$$$ $$$<$$$ $$$x$$$ or $$$k$$$ $$$<$$$ $$$\sqrt{x}$$$.

Now here we need to take equality in case $$$x$$$ is not a perfect square else you can drop the equality. This is because in case of non square number, we will be missing 1 number.

Thank You so much bro!

By that logic we can use equations 2 and 4 to get :

elements in the interval is Upper Limit — Lower Limit = min(y,x/k-1) — max(1/k-1,1)) that should work right ?

I tried to calculate $$$Dp_i$$$ from bottom to top in problem E, but got wa on test 3. It works like this:

Where $$$i$$$ is the depth. The nodes in $$$stage[i]$$$ has the same $$$dis$$$ which is $$$i$$$.

$$$minn$$$ is the minimum of $$$a_i$$$ at the same stage, $$$maxx$$$ is similar to $$$minn$$$.

Could anyone explain that why it is wrong? The submission : 107250435

Thanks!

In Problem C, in the final expression, max(0,min(y,x/k−1)−k), Why are we subtracting k from min(y, x/k-1). Can someone please explain?

x/k gives a count of numbers which can be our b but we need a remainder equal to k, that's why we decrease it by 1 (think?), if it is greater than y, we can take any number up to y as our b, but wait.... we can't take any b which is less than or equal to our remainder, therefore we again subtract k from it.

Can anyone tell why this binary search solution for A failed?

Are you sure that the f is a decreasing function ? I think that it is a

first decreasing and then increasing function.Ternary Search works for this kind of functions in

O(log(n)).Submission

But I can't prove that

fis is a unimodal function !I got it my solution is wrong because f is monotonic.

You mean

not monotonic?No monotonic, i.e it is nondecreasing first then nonincreasing. If f(mid) = f(mid + 1) before the minima then I would get the wrong ans.

I think your definition of monotonic is wrong. Google this.

Yes, it is monotonic before the minima and after the minima.

AC Divide & Conquer solution

That's ternary search, not binary search, because you're looking at the slopes of adjacent elements. I'm not sure how to formally prove that the function is unimodal, but it's pretty easy to see intuitively.

Yep, but I split the search space into halves at each iteration. Also, the function is not unimodal.

It's still called ternary search, even though you're splitting the search space in half. See cp-algorithms for more detail. That's actually how I code ternary search if I'm not working with doubles (I actually did this solution in contest, so you can check my submission). Binary search only works with monotonic functions, but ternary search works with any unimodal function.

It is Binary Search. Yep, F is monotonic.

That example, is different, as you're not really searching on a function. You can read about monotonicity on boolean functions (functions used in binary search) here. If

fwas monotonic, it would mean that looking at a single value, it would be possible to determine whether to go to the right or to the left. Ternary search can essentially be viewed as binary search on the slopes of the function, instead of the values itself. That's what differentiates them. If you look at the slopes of unimodal function, and define $$$g(x) = f(x)-f(x-1)$$$, you can see that some prefix of a unimodal function will have some sign, and the suffix will have the opposite sign, which makes $$$g(x)$$$ monotonic, but $$$f(x)$$$ unimodal.Btw, a function is unimodal if it first increases, then decreases (or vice versa). See here for more details.

Yeah, I said f is not unimodal since, you can see four local minima here.

Arguing if my approach is binary search or ternary search is self-contradictory. I should've mentioned it Divide and conquer in general.

Those local minima you mentioned are also the global minima, so it still satisfies unimodality (it's decreasing instead of strictly decreasing for that segment). Because they are in the same contiguous segment, I think general consensus is that they are still global minima. At this point, we're really arguing about if it's convention to call a function which is decreasing but not strictly decreasing monotonically decreasing, and I guess it doesn't really matter. In general for ternary search/binary search purposes, I would call it unimodal/monotonic because it's still possible to binary search/ternary search over the functions.

Shouldnt k <= root(x) for problem C be k < root(x)? Since if k^2 = x implies b=k-1 which is less than k

I have a question: In problem C's editorial, once "b" and "k" is fixed, is the value of "a" determined and unique?

Yes

How to prove the complexity for the O(n^2logn)-algorithm for prob F?

Thanks

$$$dp_i$$$ contains at most $$$1$$$ more entry than $$$dp_{i-1}$$$.

In problem 1485A - Add and Divide, BFS was fast and memory-efficient enough to get accepted.

107307717

Can someone explain problem B editorial when x is in-between al and ar, this exactly "There are (ar−al+1)−(r−l+1) such values of x."

There are $$$a_r - a_l + 1$$$ integers between $$$a_l$$$ and $$$a_r$$$. Since $$$x \neq a_i$$$, you have to subtract the $$$r-l+1$$$ integers already in the array, in the range $$$[l, r]$$$.

Video Editorial for C

Hello. I've been trying to get "accepted" on problem C in Python3 but I get TLE while implementing the solution suggested here.

Here is the link to my submission: 107347518

I googled a bit and it turns out that the time limits don't seem to be language-specific, i.e. the same algo implementation might be passing in C but not in Python. link: https://codeforces.me/blog/entry/45228

Is this the case? Are some problems not meant to be solved as long as you don't write in the language intended or is something wrong with the submission I posted?

Thanks

Check the following update to your code.

107361634

The same update ran in PyPy 3.7 more than an order of magnitude faster than it did in Python 3.9.

107361941

Thanks for the update!

Yep, the code passes now at 1965ms and we have whole 35ms to spare!

Yes, the speed difference between the Python interpreter and the PyPy compiler in running the same code is obvious.

There is a O(N) solution for F. Note that this solution uses a map. The solution is as follows -

Let f[i] denote the number of hybrid arrays of length i. Let g[i] denote the number of hybrid arrays of length i and sum of the array is 0. Given that we have a[0], a[1], a[2] . . . a[i-1] we have two options for the ith position — 1) Place b[i] 2) Place b[i] — (a[0] + a[1] + a[2] + . . . . a[i-1]) Now there will be over-counting when b[i] = b[i] — (a[0] + a[1] + a[2] + . . . . a[i-1]), or a[0] + a[1] + a[2] + . . . . a[i-1] = 0. Thus f[i] = f[i-1]*2 — g[i-1].

Now how to find g[i]? Observations — 1) The sum of the hyprid array 'a' of length i will always be a suffix of array 'b' ending at i. 2) Let j <= i be the maximum index where sum(b[j] . . . b[i]) = 0. Then for the sum of array 'a' to be equal to sum(b[j] . . . b[i]) = 0, we need two things — 2.1) a[j] = b[j] — sum(a[0] . . .a[j-1]) 2.2) For all j < k <= i, a[k] = b[k]. The array a[0]. . . a[j-1] can be anything we dont care. From 1 and 2 we can say g[i] = f[j-1]. 'j' can be found for every 'i' by maintaining map of prefix sums. 107307490

This is exactly the official solution, and it's $$$O(n\log n)$$$ because of the map.

Hello, can anybody explain me div2-C, I'm not able to understand how is k<=sqrt(x)...Thanks in advance :)

in problem C (floor and mod) max(0, min(0, x/k — 1) — k). Why do we subtract k from min(0, x/k — 1)?

$$$b > k$$$.

Can someone please explain me why my submission are getting TLE? I already try to optimize it but didn't work.

I'm a bit confused with where the factor of 2 comes from in B. Does someone have an explanation for this? Specifically, why it's $$$2((a_r-a_l+1) - (r-l+1))$$$. Thanks!

I think I just understood it. I'll comment it here just in case someone else had the same question:

consider the array [1, 2, 4, 5] for k = 5. Let $$$x = 3$$$. This mean that we could either replace 2 or 4. Similarly, $$$\forall x$$$ such that $$$a_l < x < a_r$$$ and $$$x \not= a_i$$$, it is the case that there will be two options for us to choose.

The expression $$$(a_r-a_l + 1 - (r-l+1))$$$ counts the number of such $$$x$$$s. We just have to multiply that by two since for each such $$$x$$$, there are two options.

Yet again another chess board Yet another Problem to feel dumb I only once recognised this pattern once because I tried with few examples and it was convinent to throw examples but here there was one more step of disguise and it is even harder to check example and more paths to deviate from intended solution, yet I think these kind of constructive problem we need to think of some function of indexes f(i,j)

"When there is a grid, it's a chessboard."