Hi.

On Wednesday at 9:05 CET / 8:05 UCT you can participate in the GYM version of the finals of 2017-2018 Russian Olympiad in Informatics (ROI), Day 1. And on Thursday there will be day 2, same time.

Links to GYM contets: day1 and day2.

5 hours, 4 problems, IOI-style scoring with subtasks. Statements will be available in three languages: English, Russian, Polish.

We wanted to use those problems in a camp in Warsaw so we had to import the problems to some system anyway. Then why not Polygon+Codeforces and thus allowing everybody to participate? Huge thanks to MikeMirzayanov for helping me with using GYM.

And credits to problem authors: Andreikkaa for Radium, Endagorion for Viruses, pashka for Innophone, Георгий Корнеев and GlebsHP for Quantum Teleportation.

Second day authors: cdkrot for Decryption, "jury" for Quick Sort, GlebsHP for Robomarathon, Endagorion for Addition without carry.

I will post a very short editorial in English here, after the contest.

For each column find the maximum value and for each row find the maximum value (and store their positions). This way we know the initial answer. When an update comes, check if it's greater than the previous maximum in this column/row. If yes and that previous maximum was previously suitable for extraction, mark it as non-suitable and decrease the answer by $$$1$$$. And increase by $$$1$$$ if the changed unit square is maximum in its row and column.

Sort buyers by $$$a_i$$$. For a fixed price $$$A$$$ of Innophone Plus, we know how many buyers buy this version, and we can sort the remaining buyers by $$$b_i$$$ and then choose such price $$$B$$$ that $$$countSmaller \cdot B$$$ is maximized, where $$$countSmaller$$$ is the number of remaining buyers with $$$b_i \le B$$$. If we sorted them by $$$b_i$$$, then $$$countSmaller$$$ is just the index in this sorted array.

We should consider $$$A$$$ increasingly and add new values $$$b_i$$$ to a structure that will tell us the maximum value of $$$index \cdot element$$$ in a sorted order. We can do that with square root decomposition. Before anything happens, let's gather all $$$b_i$$$, sort them, and split into buckets of size $$$\sqrt N$$$. When a new $$$b_i$$$ appears, we add it to a corresponding bucket and we rebuilt that bucket in $$$O(\sqrt N \cdot \log N)$$$ or without $$$log$$$ if you avoid sorting every time. When we want to find the maximum value of $$$countSmaller \cdot element$$$ in some bucket ($$$countSmaller$$$ is the number of smaller elements in the whole structure), let $$$x$$$ denote the number of elements in previous buckets and let $$$i$$$ denote the index of some element in this bucket. Then we want to minimize $$$(i + x) \cdot value = x \cdot value + i \cdot value = x \cdot P + Q$$$ for some constants $$$P$$$ and $$$Q$$$. This means we need a convex hull of lines for each bucket, and then we can binary search the minimum for some $$$x$$$ (the number of elements in previous buckets) in $$$O(\log n)$$$.

The total complexity is $$$O(n \sqrt n \log n)$$$. You can improve it to $$$O(n \sqrt n)$$$ if you sort by $$$b_i$$$ only once and then use it instead of resorting a bucket each time something new appears, and if you store a pointer to optimal element in each bucket instead of binary searching (a pointer can only increase because $$$x$$$ can only increase).

Let's say you are in the cell $$$(i, j)$$$. Find all processors $$$(i2, j2)$$$ that $$$i < i2$$$ and $$$j < j2$$$. Find the minimum distance to one of them. Lemma: among those found processors, it's optimal to jump to those that have that minimum distance. Otherwise, it would be not worse to make two shorter jumps.

For a subtask with $$$x_i \neq x_j$$$ and $$$y_i \neq y_j$$$, this gives us $$$O(1)$$$ possible jumps from each cell. You can run Dijkstra in $$$O(K \cdot A \cdot \log K)$$$, where $$$A$$$ is the complexity of big integers.

To make it work for the last subtask, create a fake vertex in the graph for the L-shape to which you can jump. Once you get there in the priority queue of Dijkstra, find all not-yet-processed processors in that shape. For that, you can use sets of positions of not-yet-processed processors for each row and each column.

Big integers can be done in a standard way to get complexity $$$O(\frac{N}{32})$$$ per operation, or you can use the lemma that the optimal path will have at most $$$O(N^{2/3})$$$ bits set to $$$1$$$, so you can store a sorted list of those bits (powers of two), and you can do an operation in $$$O(N^{2/3})$$$

$$$p = 1$$$ is easy, just check if the virus is completely safe in its initial cell. Let's focus on $$$p = 2$$$.

For a pair $$$(i, j)$$$, we want to check if the virus $$$i$$$ can get to the cell $$$j$$$ and be safe there, that is all viruses that would overpower him there can be killed.

Lemma: we can consider only cases where each cell is attacked at most once (except for the cell $$$j$$$ with virus $$$i$$$). Otherwise, if there are two attacks to the same cell, we can ignore the first one (if this virus should go somewhere else, he can do it from his initial cell).

For a pair $$$(i, j)$$$ (as defined earlier), the viruses are split into dangerous viruses that we want to get rid of, and the remaining viruses. We must check if "the remaining viruses" can take all the cells with the dangerous viruses. This can be done easily in $$$O(n^2)$$$. The total complexity would be $$$O(n^4)$$$.

To make it $$$O(n^3)$$$, let's fix the cell $$$j$$$ and then consider viruses in an order sorted decreasingly by strength in the cell $$$j$$$. Add safe viruses one by one. For each of remaining $$$N-1$$$ cell, maintain the position of the strongest safe virus. When a new safe virus appears (because we moved to the left by $$$1$$$ in the permutation), update the "positions of strongest safe virus" for all cells. We want to check if the currently considered virus in the cell $$$j$$$ is safe, that is he can get there in the first move, and all stronger viruses (dangerous viruses) can be killed by other (safe) viruses. For each dangerous virus, we check if he is weaker than "the strongest safe virus" in his initial cell~--- otherwise, that cell will always have a virus that is dangerous, and thus the current pair $$$(i, j)$$$ is bad.

Or you can use bitsets. For a virus in its initial cell, store a bitset of viruses that can kill him. You can get $$$O(n^4/32)$$$ or even $$$O(n^3/32)$$$ this way.

Second day tomorrow, same time.

Thank you for participation.

Let's describe a polynomial solution first.

Let's check if the answer fits in $$$k$$$ bits, that is, check if the answer is smaller than $$$2^k$$$. Go from bit $$$k-1$$$. If there are two numbers with 1 on that position, we finish and the answer is NO. If there are zero numbers with 1 on that position, choose the maximum of remaining numbers $$$a_i$$$ and increase it to $$$2^{k-1}$$$ (digit 1 here and 0's to the right).

This way we can go from higher bits, check if we can have 0 here in the answer (by running the check described above for remaining $$$k-1$$$ positions), and put 1 here if the check fails.

To make the above solution quite fast, use hashing or some string algorithm to be able to quickly compare suffixes (to be able to find the maximum $$$a_i$$$).

The idea for the intended solution is the following. Sort numbers decreasingly. Let $$$B$$$ be the maximum value of $$$L_i + i - 1$$$ in this array ($$$L_i$$$ is the length of $$$a_i$$$). The answer length is then $$$B$$$ or $$$B + 1$$$ (the latter one comes from chaning each number to the next available power of two). I would say that this idea comes from understanding the slow approach described above.

To check if $$$B$$$ is possible, we only care about critical numbers: those that $$$L_i + i - 1 = B$$$. We must quickly go to the next (biggest) critical number, say that its first digit 1 must stay untouched, and put the remaining suffix (without this 1) back in the array. That might change the ordering and thus new critical numbers will appear. We need segment tree of sorted suffixes to quickly go to the next critical numbers. Segment tree allows us to find a number with $$$L_i + i - 1 = B$$$.

One of possible solutions is greedy. Let $$$i$$$ be the position of the first element smaller than $$$a_0$$$. Find the position $$$j$$$ of the maximum element in $$$[0, i-1]$$$. Let the first segment be $$$[0, j]$$$. Repeat the process starting from $$$j+1$$$.

To get 80 points, we can insert each element to its position in $$$log(n)$$$ operations. To move the number $$$n$$$ to the end of the array, let's apply $$$S(i, n)$$$ where $$$i$$$ is the current position of this number. This operation moves the number to the middle of segment $$$[i, n]$$$. This way, in one operation we can halve the distance from a number to its position at the end of the array. So, in $$$log(n)$$$ operations we move $$$n$$$ to the end, and then we forget about that last element and we move $$$n-1$$$, and so on.

The last subtask is hard and it's worth only 20 points, so I think it's optimal to skip it during a contest. But let's see a solution.

Let's simulate the process from the end (then print operations in the reversed order). We start from the sorted permutation $$$[1, 2, \ldots, n]$$$ and we want to get the permutation from the input. The reversed operation changes a segmnet $$$[a, b, c, d, e, f]$$$ into $$$[d, a, e, b, f, c]$$$. Let's focus on element $$$c$$$ here. In one move, we can double the index of $$$c$$$ in the whole array, if we are able to choose a long segment with $$$c$$$ being the last element in the left half of the segment. If the index of $$$c$$$ is at least $$$n/2$$$, then we can get $$$c$$$ to the end of the array in one move! It still takes $$$log(n)$$$ operations to move an element from the beginning to the end, but most positions require only $$$O(1)$$$ operations. The array of costs is something like $$$[4, 3, 3, 2, 2, 2, 2, 1, 1, 1, 1, 1, 1, 1, 1]$$$, while previously it was $$$[4, 4, 4, 4, 4, 4, 4, 4, 3, 3, 3, 3, 2, 2, 1]$$$. The $$$i$$$-th element is the number of operations required to move an element from position $$$i$$$ to position $$$n$$$. The sum of costs is $$$2 \cdot n$$$.

The remaining issue is that maybe we first move one element from position $$$1$$$ to position $$$n$$$, then the next needed element is at the position $$$1$$$ and we must move it to $$$n-1$$$, and so on – we move each element from position $$$1$$$, so the number of operations is $$$n \cdot \log(n)$$$. To avoid that, we must shuffle the array first. Either apply several hundred random operations, or generate a random permutation $$$P$$$ and then first run the algorithm to change the initial permutation into $$$P$$$ and then $$$P$$$ into the sorted permutation. In fact, we must do the opposite because we simulate the process from the end. Anyway, this way organizers can't create a special malicious test against your solution.

The case $$$p = 1$$$ is easier. To minimize the place of robot $$$i$$$, we should put one active device there and nowhere else. Any other scenario gives all other robots the starting time (relatively to robot $$$i$$$) that is not-worse that in this scenario. We need to count robots that will be faster than robot $$$i$$$. Let's focus on counting those with index $$$j < i$$$ and then we can reverse the sequence and repeat the algorithm.

Robot $$$i$$$ will lose against all robots $$$j < i$$$ that $$$a_j - j < a_i - i$$$. To count such indices $$$j$$$, we can change each value $$$a_x$$$ to $$$(a_x - x)$$$ and then we have a sequence in which: for each element, we want to compute the number of previous elements that are greater. This can be done by renumerating values to range $$$[1, n]$$$ and then doing a segment tree, or by sorting values and then considering them from small to big, marking their positions in the array and using segment tree to know how many marked positions there are on the left.

Now we move to the case $$$p = 2$$$. To maximize the place of robot $$$i$$$, the intuition is we should put devices far away from $$$i$$$. If we put a device somewhere, it's usually better to move it away from robot $$$i$$$, so maybe we should put it on track $$$1$$$ or on track $$$n$$$. If we put a device in one of those two tracks, it doesn't make sense to put devices anywhere else. In the following scheme, 'x' denotes devices. The second way is only worse for us, because it improves the starting time of some robots by $$$1$$$, including robot $$$i$$$, so the place of robot $$$i$$$ won't get worse.

x _ _ _ _ _ i _ _ _ <--- version A x x _ _ _ _ i _ _ _

If we put one device on track $$$1$$$ and one on track $$$n$$$, the starting time of $$$i$$$ will be $$$min(i-1, n-i)$$$. We don't affect it by putting more devices near the further of two endpoints: $$$1$$$ or $$$n$$$. On the scheme below, the second way to put devices only helps other robots, while not improving the situation of robot $$$i$$$.

x _ _ i _ _ _ _ _ x x _ _ i _ _ x x x x <--- version B

It doesn't make sense to put devices even closer to $$$i$$$ because it wouldn't improve the situation of any robot relatively to robot $$$i$$$ (the difference between starting times).

Versions A and B are the only strategies we need to consider, plus version A has two possibilities (putting the only device on track $$$1$$$ or track $$$n$$$). We need to implement each of them to compute a possible place of every $$$i$$$ and then, for every $$$i$$$, print the maximum of three computed possible places.

In version A with the device on track 1, robot $$$i$$$ will be beaten by all $$$j$$$ that $$$a_j + j < a_i + i$$$. This is even easier than the case $$$p = 1$$$.

In version B, for each $$$i$$$, we need to answer several queries like "give me the number of indices $$$j$$$ in some interval with value $$$a_j - j$$$ smaller than $$$X$$$". Interval $$$[L, R]$$$ can be replaced with $$$[1, R]$$$ minus $$$[1, L-1]$$$. Now we need to answer only prefixes, so we go from left to right, extending the prefix one by one, and we need three data structures — one for $$$a_j - j$$$, one for $$$a_j + j$$$, one for $$$a_j$$$, to answer queries "count the number of values smaller than given $$$X$$$". You can actually use this for version A too.

If possible in your solution, use BIT/Fenwick instead of a segment tree to get the full score. You might get ~90 with slower implementation.

Wow, thank you very much! So you had translated those problems for the camp?

I translated from Russian to English, and then a few other guys did from English to Polish.

So, do you know Russian or just used google translate? If you know Russian, it looks like you would be eligible to participate in old versions of vk cup :) (But they may change rules)

So let's hope they raise the age limit.

I tried that in the first (I think) version of VK Cup, I was told "nope".

5hours and 4problems!

How hard the problem could be...

I remember that ROI's difficult as same as Div1B — Div1E (4 problems) if need to solve all subtasks per problem. Something like this

Can I ask why it is just a gym? Why didn't you do something like Codeforces Round 545 (Div. 2), some rating round?

Probably because there are some people who already know the problems and their solutions

The best preparation for an olympiad are problems from olympiads. Similar topics, same scoring format (subtasks). And people solve problems from CF anyway, like Junakson said.

Small mistake in Russian name of the contest

:D Sorry, the Russian name doesn't appear at all in the English interface of editing the contest. It's ok now. (The name was "Russian name".)

Day 1 starts in 10 minutes. Good luck!

Can you extend registration?

You can register at any moment of the contest.

Can we get the actual verdict?

I can change if for tomorrow to "complete" feedback whatever that means (does it show a test for which a solution fails?). Also, maybe it would be better to show the current leaderboard? I'm not sure.

I think the leaderboard should stay hidden. But that verdicts are really annoying — I don't know if it's TLE or WA. Anyway, it's not a strict problem.

Not showing TLE/WA on big subgroups is feature intended by problemsetters of original contest, not a bug. When you can submit without restrictions and penalties, many participants starts staring to submit a lot of different trashy hacks, instead of solving problems.

So, showing detailed reports on small subtasks, and binary report got score/not got score on big gives you quite a lot of information (most wa's will happen on small subtasks), while not inspiring incorrect and unproven hacks like "oh, lets check first 1000 possibilities, not all".

Side effect of this solution, is increasing cost of some kinds of errors like integer overflows or out of bounds, but we consider it reasonable. Anyway, if you are testing large timelimit, you probably should generate big test locally, and will see if it crashes or returning negative answers. If you do not, and just submitting random constants for testing — that is not behavior I want to inspire. Hardness of such tests is one more think which considered when desiding which result showing should be used.

Everything above should be threat as my personal opinion, not a official position of Olympiad jury. But I think reasoning of others are more or less same.

As I said before, that's not a big deal. The only think made me to ask this question was IOI rules thing.

And thanks for a detailed answer. I agree with your opinions.

Not showing proper verdict costed me not-getting-a-AC.

Someone can also say "not showing the editorial before a contest costed be not-getting-AC" ;p

But yeah, it's better to show the verdict if IOI does it.

I solved B with $$$O(N \sqrt{N})$$$ Time, but it turns out to be not enough for getting 100 pts (I submitted with various constants, but 84 was maximum)..

Sounds like a big constant factor. Organizers' solution: https://ideone.com/12D1zF.

In the problems 3, how can we prove that it is always optimal to jump to some nodes(i,j) from the node(x,y) that satisfied x<=i and y<=j, which means we don't have to concern about jumping to a node (i,j) where either i<x or j<y (or both) is satisfied. This claim is intuitively correct, but I couldn't get the exact proof.

By the way, thank you for preparing such a nice problem set.

It isn't true. You must consider all four directions (plus going to the next/previous cell in the same row/column). The lemma is: if you go from $$$(i, j)$$$ to $$$(i2, j2)$$$ such that $$$i < i2$$$ and $$$j < j2$$$, then it's enough to consider going to the closest such $$$(i2, j2)$$$ (to one of those tied at the minimum distance).

Yeah, problems are nice. Kudos to the organizers of ROI. I will list them tomorrow in the blog, when I have time.

If we have multiple processors having the same minimum distant from the current processor, do we only need to link to any one of them, or we have to consider all?

All. Have you read the editorial in the blog?

Yes, but I am kind of confused about the detail of the L-shape thing. Could you please explain a bit more?

My questions:

why is it an L-shape? for finding these processors have the same shortest distant I think it should be a diagonal or so.

what do we store and how do we find the vertices in L-shape?

Thanks a lot!

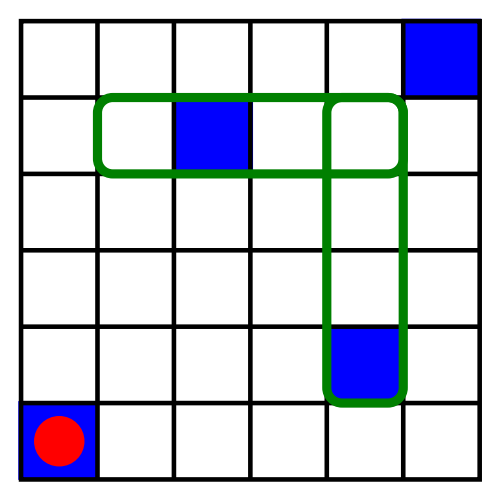

There is a drawing in the editorial. Each cell in the L-shape has the same distance to the current cell $$$(i, j)$$$.

If you want to find cells in such a shape, you can use sets of positions for each row and for each column. Or just iterate linearly over all $$$k$$$ cells and check which are in the shape.

Oh I see, I made a mistake.

I transferred Chebyshev distance to Manhattan Distance, And all I am thinking is with Manhattan Distance. Sorry for that.

Got it, thanks!

Oh, I see! I apologize for my misunderstanding.

Btw this greedy is very genius and amazing...(easy to understand but super difficult to come up with during the contest)

Problem B is quite similar to 436F

Day 2 starts in 10 minutes. This time with complete feedback.

Will the standings of Day 2 be available?

When will the tutorial for second day be published?

Three of the four problems are done. Robomarathon coming soon. Sorry for the delay.

Just as a reminder if you have forgotten to add it, but I would like to see the solution to the problem "Robomarathon". I don't intend to hurry you, but I would be grateful if you add some brief editorial about this.

Or does anyone know the solution? It seems it was a bit relatively easy one in this problem set, but I haven't got to the right answer even though it has passed 3 weeks after the contest ended. I've been distressed by this problem from morning till night for more than a half the month (note: contains some exaggerations). I guess many participants have already forgotten how to solve this problem, but would anyone help me get out of this restive circumstance?

For minimum place, only one start neer this robot should be used. For maximum — either everything with distance not less than closest endpoint (1 or n), or only other endpoint (could be proven by removing closest or adding furthest on other cases).

After that number of persons before us is some sets on (i, a_i) plane, number of points in which can be found using several sweeping lines.

Also, timelimit in this problem is quite tigh, so good constant is required for 100-point solution. Any nlogn should score 70+.

Even $$$O(n^2)$$$ solution can score 78-points or even 83-points if we optimize a lot :-)

I've just written a full editorial. Sorry for the delay.

It's bizarre that Pavel answered while I was writing it. After two months of you waiting...

Sorry again.

Thanks for all who taught me the solution! I really appreciate it.

I mentioned post in top (probably, because of your edits), and answered for unseen post. Even didn't saw it was 2 months ago :)

Can someone please tell me why this approach doesn't work for B — Decryption of ROI Day 2: Start from the first element in the sequence not already put in a segment, create a new segment and put every subsequent element such that it's larger or equal to the first element in this segment, and the maximum of all of the remaining numbers is greater or equal to the current maximum element.

I'm really curious why this gives WA, because at first glance it looks like it's optimal.

Hi Errichto, thank you for the great problem set!

It seems that subtasks in Day1 P4 are not well configured. My full solution with

assert(n <= 50)gets only 33 points. Could you please check this?You should thank the original organizers, obviously :D

Everything is ok with subtasks. Notice the dependencies. Subtask 4 requires subtask 2 to be solved so you need to solve $$$p = 1$$$ for all $$$n$$$ first. This is indeed quite strange but I've just checked in the original Russian statement that it should be like that.

That's a weird dependency :p Thanks anyway.

for the question Viruses, any idea why this passes. It seems to me like clear $$$n^4$$$