Hello Codeforces,

I will present today, and in any day in the future, a means to correctly calculate a corner case of the well known small-to-large trick, and how this can be employed to solve a set of certain problems. This blog is written with encouragement from the Month of Blog Posts initiative.

The standard example.

Let's try to solve the following problem: Cat In a Tree, BOI 2017. Although this problem can be solved employing some greedy algorithm, assume we have no idea what greedy is or how it could be correct, and revert to the basics:

The standard DP.

How can we formulate a DP on this problem? Well, what we need to keep track of is how can we select multiple nodes such that they are all at distance greater than D. When we are considering a correct solution on a subtree and we want to merge it with that of its brother, only one parameter is of importance: the distance of the closest chosen node to the root (As much as any other pair of nodes that traverse their common ancestor would have their length at least as long). And as this seems to be sufficient, we find that the following DP definition is sufficient: $$$dp_{\texttt{node}, \texttt{closest}} = $$$ the highest cardinal of any solution included in the subtree of the node $$$node$$$ such that the closest node chosen is at distance $$$closest$$$

The transition from the sons into their father would look like the following (for two sons $$$s1$$$ and $$$s2$$$): $$$dp_{\texttt{root}, \texttt{min+1}} =\max(dp_{\texttt{s1}, \texttt{min}} + dp_{\texttt{s2}, \texttt{x}},dp_{\texttt{s1},\texttt{x}} + dp_{\texttt{s2}, \texttt{min}})$$$ for any $$$x + min + 2 \ge D$$$ and $$$min \le x$$$

It is clear that the transition for more than two sons is made progressively by merging the current calculated dp in the root with yet another son. Furthermore, we can tweak the definition to represent instead the highest cardinal of any solution whose closest chosen node to the root is at distance at least $$$closest$$$ (i.e. perform suffix maximums). This will help reduce the unnecesary linear traversal of index $$$x$$$, leaving only $$$min$$$ to be iterated.

To sum up these observations in some comprehensive formula, let's rephrase the transition algorithm as follows:

- At first, we take the $$$dp$$$ array of a son $$$^\dagger$$$. We now assign $$$dp_{\texttt{node}, \texttt{closest+1}} = dp_{\texttt{son}, \texttt{closest}}$$$. We also assign $$$dp_{\texttt{node}, \texttt{0}} = dp_{\texttt{son},\texttt{D-1}} + 1$$$ (i.e. adding a new node to the solution).

- To further merge this $$$dp$$$ array with that of a different son, we assign: $$$dp_{\texttt{node}, \texttt{T}} = \max( \,(dp_{\texttt{node},\texttt{T}})^{v_1}\,,\,(dp_{\texttt{node}, \texttt{H}} + dp_{\texttt{son}, \texttt{T}})^{v_2}\,,\,(dp_{\texttt{node}, \texttt{T}} + dp_{\texttt{son}, \texttt{H}})^{v_3}\,)$$$ where $$$H$$$ is $$$\max(T, D-T-1)$$$. Then, adjust to preserve the $$$dp$$$ definition by setting $$$dp_{\texttt{node}, \texttt{T}} = \max( dp_{\texttt{node}, \texttt{T}}, dp_{\texttt{node}, \texttt{T+1}})$$$. (Markings $$$v_1, v_2, v_3$$$ are footnotes, not exponents)

Turns out that $$$\dagger$$$ makes this entire algorithm $$$O(N)$$$. $$$\,$$$

So who is this $$$\dagger$$$?

He is the son with the maximum height.

The reasoning for this better analysis than that of the standard small-to-large $$$O(N \cdot log(N))$$$ is the following:

First, we have to do the first transition in constant time. This can be done by keeping the $$$dp$$$ arrays for each node as some sort of deques $$$^1$$$. As such, we can just move the deque into the parent and add an empty entry at the beginning.

Then, observe how it is sufficient to do the second transition within proportional time to the size of the array of the son (i.e. iterating $$$T$$$ from the previously written recurrence within the limit of said size). This clearly makes sense for the correctness of transitions $$$v_1$$$ and $$$v_2$$$, but this also applies to $$$v_3$$$ because:

- if the size of the $$$dp$$$ array of the son we consider spans up to less than $$$\frac{D - 1}{2}$$$, then any other index we would try to update would call values within it that are at least $$$\frac{D - 1}{2}$$$ (as $$$H = \max(T, D - T - 1) \ge \frac{D - 1}{2}$$$). So, $$$H$$$ would always call values out of bounds in $$$v_3$$$, yielding then 0.

- if the size of the updating $$$dp$$$ array is greater than $$$\frac{D - 1}{2}$$$, then beside the now covered and fully considered cases of $$$T \le \frac{D - 1}{2}$$$ (where therefore $$$H = D - T - 1$$$), now remain the cases where $$$H = T$$$. In these, if then $$$T$$$ surpasses the bounds of the array, then the values will always yield $$$0$$$, therefore, both transitions $$$v_2$$$ and $$$v_3$$$ are redundant.

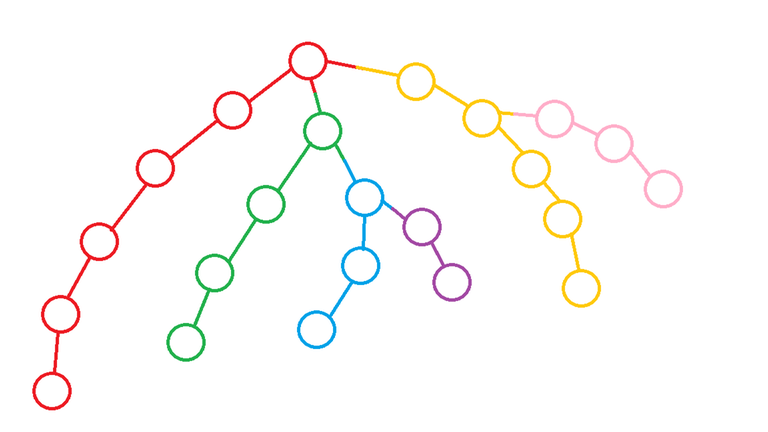

It remain to prove that this sum of sizes of $$$dp$$$-s of not-maximum-in-height sons will yield linear time. For this, I employ the following drawing:

The idea is that everytime we traverse through the second step, we are consuming some sort of potential value, equal to the size of the merged array. This potential increases by one everytime we employ the first step, by extending some succesive maximum height chain. So then, basically everytime we apply the second step, we traverse proportionally as many nodes as the HLD chain (formed by succesively continuing through the tallest descendant) of the son we transition from has.

Furthermore, second type transitions never increase the potential (because the height of the parent remains constant throughout). And then, every node on every chain corresponds to a single possible transition, and as such, every potential increment can only be exhausted once. Therefore, the complexity is $$$O(N - H)$$$, where $$$H$$$ is the unexhausted potential of the chain of the root, as no node on it (and for that matter the root) reports to a second type transition.

And that is the trick, folks.

Discussion on typical patterns

As I've marked in $$$^1$$$ and it kept on appearing, the required data structure for maintaining any sort of such $$$dp$$$ arrays is a deque. There are, however, some problems with that, arising from the fact that the deque allocates a significant amount of memory for a simple instantiation. As such, it has often happened to me that just declaring $$$10^5$$$ of them will be an instant TLE, i.e. a great overhead happening before even running a single line in main.

To reduce the risks of this happening, I suggest the implementation of this trick using a wrapper of a vector instead, similar to the one below, as all the operations we need require insertion from a single end:

Furthermore, such a data structure can employ a wide variety of operations, although they need to be generally held on suffixes, as much as one would always update a prefix (and suffix conglomerate values would only need to be updated on said prefix). Along with those, operations that imply lazy modifications on suffixes work as well, usually by pushing them further right whenever accesing a position. Hopefully to illustrate such operations better I leave the following problem:

Final Acknowledgements

I am unaware of a large variety of problems that employ this specific trick. Please let me know in the comments if you know of more interesting applications of it, if you've ever seen any. Here are some others that I do know of:

- Echidistant, Romanian Pre-TST struct a

- Car race, Balkan OI 2023

- CSES Fixed-Length Paths II

- Dominant Indices, thank you greatly estoy-re-sebado for the suggestion!

- Red-Black Tree, 2023 Asia Nanjing Regional and Color the Tree, 2022 Asia Nanjing Regional, the latter illustrating very well an application of lazy propagation on such structures and its power. Thank you bobbilyking for the valuable suggestions!

Also, many thanks towards spatarel for teaching me this trick 3 years ago, and to Andrei_ierdnA & tibinyte2006 for proof-reading.

As a reader, I read this. Much quality, very wow!

I really like this small-to-large technique, as it really shines a good light on depth-based tree DPs, which sometimes appear unexpectedly and must always be embraced! Not to mention, the complexity proof of this is really cool!

Also, yeah, don't use deque when doing this. Seriously. ESPECIALLY when n <= 1'000'000.

As a reader of your comment, much 2012, very wow.

Thank you! It was very hard to find english resource on this trick.

Sometimes, I'm really wondering how much "non-english" knowledge/resources exist out there that could be really useful to have...

All OI camps should spend 2 years teaching basic Chinese first

Thank you ntherner now I can solve problema Pisica from baraj 2024 😁

I just wanted to take a moment to appreciate the incredible work Valeriu has been doing on his blog. From the moment I stumbled upon it, I was hooked. His insights into competitive programming and detailed explanations of various algorithms and strategies have been a game-changer for many of us in the Codeforces community.

Whether you're a beginner looking to understand the basics or an experienced coder seeking advanced techniques, Valeriu’s posts are a treasure trove of knowledge. I particularly loved the proofs—it really helped me understand the reason it works.

Thank you, Valeriu, for your dedication and for sharing your expertise with us! Keep up the amazing work!

Who stole the password from your account? Or maybe you just forgot to log out? :)))

What are you trying to imply :(

orz

2023 ICPC Asia Nanjing Regional Contest, "Red Black Tree"

if you notice that the DP function is convex, you can do some kind of direct O(n) merging subtree DP like the one shown in the editorial

You can do a naive merging DP in O(n) by doing some kind of sweepline merge between any two dp states that only costs O(min_dep of children) or O(1) depending on if you have more than 2 children or not (do an optimization for when you only have 1 child since the transitions are obvious then). Then we resize the DP to size O(min dep of children) at every node.

You can show this achieves the same bound as the one you've shown, since we're doing not more operations per merge and resizing the DP array to be not bigger at every step than a "true" maxdepth DP.

In general, I think as long as you avoid doing O(max_depth) work at every node, your complexities will work out regardless of whatever scheme you pull.

There's also a thing where you store your "longest path decomposition" in a segment tree (similar to HLD), and it may or may not make the problem easier to work with. I found it from this blog.

I could only find one problem that might've benefitted from this, 2022 ICPC Asia Nanjing Regional Contest, but you could just do a normal path DP lol. But a lot of times people do weird things to achieve O(n) depth dps like compressing reversed prefix sums or whatever, here you can just pay an extra O(log(n)) to make the querying normal in a simple way.

i cannot find the english version of the blog...please help

"Longest path decomposition" does sound cool and tells you exactly which dp's get extended through the front/top! However, I couldn't find a situation where it's actually practical...

I could solve the problem you linked with just some lazy tags, which propagate from index to index+1 and are solved with RMQ in the given array (you can use sparse). I didn't find the need for a segment tree as part of the dp, if that's what you were talking about

Yeah often with these problems there's just a straight up O(n) way to do the DP :P

But if you take a look at the this solution for CSES fixed length paths, for example, the author's storing the prefix sums in reverse and then doing like unwrapping — rewrapping shenanigans to handle all the necessary operations in O(1), when I think you can just store them in a segment tree, pay log(n) and not think about any of that.

I imagine if your setup is convoluted enough, e.g. say you need arbitrary range max queries on your DP combines within the DP's themselves, you can force this "naturally".

Let's say you had to compute the maximum weight path for all paths with lengths between k1 and k2, where weight = sum of weighted edges (possibly negative) between two nodes, and length = # of edges between two nodes.

Is this still possible with just deques/vectors?

Ah, yeah; I figured that for stuff like min/max queries on depth, one would need a Segment tree, that's why I really liked your presentation of "longest path decomposition", which makes a lot of sense! And, of course, one could artificially develop a problem that can require more advanced stuff, like lazy propagation or maybe... sqrt decomposition instead of segment tree? However, it all relies on that decomposition, which is really cool!

As for fixed length paths... I'd personally rather do wrapping/unwrapping based on math and prefix sums than implement a segment tree on the longest path decomposition. (biasposting)

Once again, thanks for the enlightment!

I think this problem is relevant to the blog 1009F - Dominant Indices

how to build back the DP? i.e. after calculating the max number of nodes, each >=d apart, how to find an example set of nodes?