Hello, Codeforces!

UPD: Challenge is over. You can find results in this post

We are happy to invite you to an exciting online event: ICPC 2023 Online Spring Challenge powered by Huawei, which will start on April 13, 2023, 11:00 UTC.

In this Challenge, you will have a unique chance:

- to compete during 14 days online challenge

- to solve 1 exciting problem prepared by Huawei

- to win amazing prizes from Huawei!

As a special prize, Huawei together with ICPC Foundation will provide the travel trip to the 46th Annual ICPC World Finals in a guest role!

Everyone is welcome to participate. It is an individual competition.

ICPC 2023 Online Spring Challenge powered by Huawei (open to the public):

Start: April 13, 2023 11:00 UTC (UTC+0)

Finish: April 27, 2023 10:59 UTC (UTC+0)

We hope you'll enjoy this complex yet very exciting Challenge!

Problem

This time we’re delighted to provide you with a new challenging problem from Huawei — Buffer Sharing in Multi-Tenant Database Environment, which is prepared by Huawei 2012 Labs and Huawei Cloud Computing.

Nowadays everyone uses databases directly or indirectly. For example, databases of service providers store shopping records, transaction records, or ticket purchase records. During this challenge, you will work with the multi-tenant database, in which a single database instance is divided into multiple virtual sub-databases, serving different tenants of cloud service.

In such databases, different tenants need to be isolated from each other to prevent services from affecting each other. Therefore, buffer is considered as an important resource for management of large amount of data. When the amount of data in the database buffer reaches the maximum value, the database buffer evicts some data from the memory based on the eviction algorithm and loads new data pages from the disk. Your goal is to optimize sharing and isolation of database buffers in the multi-tenant database. While replacement algorithms considered in this contest be one of the core algorithms, we believe there are multiple approaches one can try here, and some crossover of the algorithms will also increase your chances to win!

Prizes

| Rank | Prize | |

| Grand Prize (Rank 1) | € 15 000 EUR + the travel trip to the 46th Annual ICPC World Finals in a guest role | |

| First Prize (Rank 2-10) | € 8,000 EUR | |

| Second Prize (Rank 11-30) | € 3,000 EUR | |

| Third Prize (Rank 31-60): | € 800 EUR | |

| TOP 200 Participants | Souvenir T-shirt | |

| * If the allocated Huawei Challenge prize cannot be delivered to your region for any reason it may be replaced by another prize of the same value (if no legal restrictions), at the discretion of the Sponsor. | ||

To be eligible for Certificates of Participation and to win prizes you are required to register at https://icpc.global/regionals/finder/Spring-Challenge-2022 by April 26th.

If you are an employee of Huawei, we invite you to participate in a competition in a special corporate scoreboard. To do this, register at the link in the secret Codeforces group. Please note that you must register even if you already have a Codeforces account, as this group has its own separate account system. Good luck!

Challenge Rules and Conditions

By participating in this Challenge, you agree to the Conditions of Participation and Challenge Rules. If you cannot access this document, please email [email protected]

Good luck, we hope this will be fun!

Update, as of April 27

Congratulations to all the participants of the ICPC 2023 Online Spring Challenge powered by Huawei!

As stated in the problem statement, the jury needs to test the latest non-zero score submissions on the final set of tests after the end of the contest. Results will be announced no later than May 1, 2023

If you registered for the Challenge by April 26th and submitted at least one response, an ICPC Certificate of Participation will be posted on your ICPC Challenge Team Record in the “Attachments” section by the end of May.

Update, as of April 29

Please ensure you register on ICPC.global using the same email as your Codeforces account no later than Fri, May 5th in order to be eligible for prizes and a Challenge certificate. If you have already registered on both platforms, please ensure your ICPC profile email matches the email used on Codeforces.

Update, as of May 1

We are glad to announce the completion of the ICPC 2023 Online Spring Challenge powered by Huawei and announce its results. Thank you very much for your interest in the competition. It was exciting for us to follow the results. We hope you enjoyed the challenges.

All tests are done, and we are glad to announce that the results in the Standings are final!

Congratulations to winners! Thanks again for your interest. We will be glad to see you in our future contests.

can i participate if i am a huawei employee

if no then the "Everyone is welcome to participate." is misleading

i think you can. just tell them to skip you for prizes

Yes, you can participate in a special corporate scoreboard. Added information about that to post

Could you please tell me how to register on this site ICPC global Spring Challenge to claim the prizes?

First you need to register on ICPC Global. Then follow the link and find your account by nickname or email

will it be rated??

No.

When I register on the site ICPC Global Spring Challenge and create a new team, it says "Person XXX is twice in team XXX (834853)!". Could you please tell me what's going on?

Maybe you have registered the same team before for ICPC. I faced the same issue and changing the team name worked for me.

You need to tick the box "I will be a coach and a participant" and do not enter yourself in the field of a team member

I don't see you in the list of participants on the site ICPC. Tell me your participation was confirmed (team confirmed or not)?

ICPCNews Can you confirm that it's intended way of registration?

Or maybe HuaweiChallenge can?

I have issues registering on the ICPC website too. My 'team' status has been stuck at the "Pending" stage, and under the "Your teams" widget, my team has a red exclamation mark with mouseover annotation "Eligibility issues". Is this an expected behaviour and am I supposed to just wait for the status to be updated to "Accepted"? I saw on the ICPC contest page that already 92 'teams' are listed. Thanks!

ICPCNews answer this question and above please

My status has become "accepted" and I'm now on their list of participants! chakred_namor hope your issue has been resolved too

How much tine it takes for being Accepted and where are the list of participants.

To see the list of participants, go to ICPC registration page and on the right side there is a "check out: Teams | Standings | Unofficial standings"

That's expected behavior. All teams will be accepted in closest time

Non-rated, right?

No rated contest in upcoming 2 weeks =(

There are now rated contests :)

for receiving prizes do I need to have any master card / visa card ? ICPCNews

Dear Nafis, the prize will be transferred to the bank account specified by the winner.

will the prize be paid in bitcoins?

How to bind the team in icpc.global to CF account? Use the same email to register?

Yes, same email address — the screenshot is from a previous Huawei contest

Is it enough to register in the codeforces contest to get a chance to win prizes? $$$\newline$$$ Is it necessary to register on the icpc website?

Yes, they mentioned that we have to register to be able to get a prize.

Which output is considered as Wrong Answer?

For example, if for query $$$x$$$ I print $$$x \bmod q$$$, why is it WA on all tests?

Well, I guess because of following.

Assume $$$Q^{min}_t = 4$$$. Assume I do following: let the $$$[1, 4]$$$ be the segment of tenant $$$t$$$, and for query $$$t \; x$$$ I answer $$$(x - 1) \bmod 4 + 1$$$. Then, if input queries are $$$[t \; 1, t \; 5]$$$, then I print $$$1 \; 1$$$, and its considered as wrong answer, because I could find another free frame for page $$$5$$$.

Edited

If you use some of the q slots for the first time, then you can use that slot only for the tenant of that first time, Also you may be unfulfilling the condition of Qmim and Qmax

"If you use some of the q slots for the first time, then you can use that slot only for the tenant of that first time".Why?

I misunderstood the problem. A tenant $$$x$$$ can evict a page of a tenant $$$y$$$ if $$$x$$$ has less than $$$Qmax_x$$$ occupied slots and $$$y$$$ has more than $$$Qmin_y$$$ occupied slots

Sorry in advance for grumbling and being too pessimistic, I really like that Huawei runs marathon-style challenges every year with great prizes, and don't want to discourage organizers from doing it in the future!

(Hope it is ok to post this message during the competition as it is absolutely unrelated to a specific problem).

But why do you never tell how the tests are generated? The task descriptions are usually very general, and it is impossible to solve them for all cases. So the only way to write a good solution is to guess how the input data is generated and what patterns it has. Guessing how authors generated data is not fun!

Probably you want it to be similar to real life, but in real life, you can do some experiments, log interesting events and analyze the data.

I think it would be much more interesting to solve the challenge if you could share test generators (similar to AtCoder Heuristic Contest or TopCoder Marathons).

Second this. Otherwise significant part of contestants' effort will be spent on black box experiments which is neither fun or educational...

Hi, thank you for paying attention to the Challenge. The tests, as well as details about their generation, are secret. The intention is to incentivize contestants to solve the general problem, and not a particular test set.

The solution is judged on your particular test set, not on the "general problem". So, we are solving a particular test set anyway.

It means that knowing some details about the input generation is a huge advantage (and it's necessary if we want to get a good rank). Unfortunately, if the tests are secret, the only ways to get some information about the tests are

Hence, the winners of the challenge will have spent (wasted?) a lot of time reverse engineering the inputs, which is not what you are trying to achieve.

The final score will be calculated by testing not on these inputs but on a different set

The final set is "similar" to the preliminary set.

In one of the previous challenges, I've found out by binary search that a variable in the range $$$[0, 1]$$$ was actually almost always in the range $$$[0.1, 0.2]$$$. Of course, binary searching single inputs is not so useful, but you may want to find out how frequently something happens over all the tests.

You get more information from a submit, namely execution time and memory consumption. Thus there are faster ways than binary search.

Ok, good to know. Anyway, I still think that secret test cases are annoying (and some people seem to agree). I would like to hear more feedback from other people.

In this case, the problem is it's too general.

Hidden testcase generation

Prevents analyzing the characteristics of the entire set of possible testcases

Prevents local benchmark with more testcases

Prevents pointing out the problem of current solutions and improve it by analyzing behavior for each testcase

Causes more unexpected results at the system testing

Look at AHC(AtCoder Heuristic Contests). Each of them has clear testcase generation methods, but I never feel "Oh no, it's a too specific problem!" Sharing the information about arguments of the utility function is a prerequisite of the problem.

I feel more explanation about testcases is needed to make it clear the situation we have to solve.

One possible solution would be to allow contestants who manage to submit a solution scoring above some threshold $$$S$$$ to contribute test cases themselves.

I second this. I kiiinda get organizers motivation and I don't want to say it's straight up bad decision, but not being able to reliably conduct any meaningful local tests was what swayed me away from engaging in this one

Now that the contest is over: testcases felt a bit like a guessing competition to me. Case 26 and 27 in particular felt very arbitrary. It turned out that you have to evict the most frequently used page here to score well. I don't see how hiding those cases could lead to a more desirable outcome for the organizer in terms of solution quality. You just submit random stuff and hope for a lucky hit that increases your score.

I'm a bit confused with score calculation. A quote from description:

Doesn't this mean that if I will use LRU with buffer of size $$$Q_t^{base}$$$ I should get $$$Cost_j=0$$$ and as a result $$$Score_j=500$$$ for all tests? Or $$$\sum Q_t^{base} > Q$$$ and it is impossible to have buffer of size $$$Q_t^{base}$$$ for all tenants? But then, if there is no upper bound on $$$\sum Q_t^{base}$$$, they could be arbitrary large to fit whole database for every tenant and then LRU or any other cache eviction strategy just doesn't make sense.

I am confused a bit in the number of faults in the 1st TestCase. Should it be 0 or 10. if we assume that initially the buffer is empty then the number of page faults would be 10, if not we could initially fill the buffer with the pages (1-5) of both users and number of page faults would be 0. Can someone please clarify this.

It depends not only on your number of page faults, but also on number of faults in the base solution (LRU). So if your SLA matches base answer, which is most likely also 10, you’ll get Cost=0 and Score=500

Yeah understood it, thanks , also your query seems valid then.

whiteapple is so strong!

Can you please do the same distribution for the last challenge?

Hello, could someone please help me to understand

what is the point of "3" factor in the $$$f_1(x)$$$ function formula?

How could it possibly affect the score calculation?

In the formula for $$$Score$$$ calculation we use cost divided by cost

(our cost divided by best baseline cost),

which means that the score should not depend on any constant factor in $$$f_1(x)$$$

Thus, it seems to me that $$$f_1(x)=3 \times x^2$$$ should result in same scores as $$$f_1(x)=x^2$$$ would.

Where am I wrong? (if I'm wrong)

Hello, in order to answer your questions in time, please log in to the competition question interface

https://codeforces.me/contest/1813and click 'ask a question'How can the problem "... is twice in team ..." be fixed while the registration process?

Am I able to get prizes if i'm not studying in university? During team registration it asks for a university name.

About prize money, that's not a problem. As long as you have a collection account and the account can be paid through the banking system.

To be eligible for Certificates of Participation and to win prizes you are required to register at https://icpc.global/regionals/finder/Spring-Challenge-2022 by April 26th. So, it's unnecessary to register there to get money prizes and I just have to write the contest on codeforces, right?

You can choose ICPC U as your institution in the ICPC System

is it possible to make a limit on the number of submissions at a certain time, because I see a huge queue of people who disrespect others

(EDIT: problem solved!)

I'm now getting "Runtime error on test 1". I suspected it's not really my code crashing (works fine locally), so I started my Rust program with:

And I still get:

Note that the sleep itself should take 200 ms. So I suspect there's a server issue. Is there someone who can check it?

15ms is a user (processor) time, but not a real time. Sleep increases a real execution time and doesn't affect the user time.

Try to replace 200 with a huge value: you should recieve something like "Idleness limit exceeded" (don't know the exact name of this outcome on CF).

Can I see other people solutions after contest ?

20 people in 200 points' difference... There must be a huge rank reorder after system test...

Some of these are super depressing.

Another half of them is quite uplifting though )

ICPC site says that my institution is not eligible for ICPC, can I set ICPC University instead? Also what is intended way to link Codeforces account with ICPC account? What exact time the registration ends?

Yes, please use "ICPC U" as your institution. There is no way to link the CF account to ICPC account, so all matching will be by email — please use same email in both systems

What if I have different mails for CF and ICPC?

How our codeforces account and our ICPC registration are supposed to be linked? Do we have to use the same email adress?

yes, same email address — as explained in the comment directly above yours ;)

If I register after April 26th, will I be able to get a certificate?(

Yes, but you need to do that before 5th May — that's deadline for registration.

Will there be a cheating check after the contest?

Funny, the two top contestants from North Korea had a jump of scores almost at the same time and have now very similar scores.

By the way, Codeforces/ICPC should have some strategy to prevent a handle from participating from multiple accounts (assigned to each of his family), just to get more prizes... And plagiarism detection is not enough...

BTW, one of them is known for getting top places in the previous Huawei challenges. But the second CF user was created just before this contest and this indeed looks a bit suspicious. Especially submissions' timing.

https://codeforces.me/submissions/whiteapple

| whiteapple | is invited to the reception: now explain how to get a score of 14246, which would require structure knowledge of testcases (e.g. pattern switching per tenant...), in just 1 try...

I think he can explain his solution correctly. Scoring 14k+ is huge, but it's quite clear he is a top scorer in the contest, whose main account is also present in the leaderboard.

The organizer should verify every top scorer's identity. There may be more like this account; maybe the top 2 (before the system test) are the same person.

Finding similarity through plagiarism will fail because they are top-rated, have huge knowledge about problem solving, and can solve the same problem in multiple ways.

I lost 30–40 positions in the last 2 days of the contest. That's a joke after all these efforts.

I can't figure out if I did everything right. Please tell me if my registration was correct.

ICPCNews, HuaweiChallenge, please answer. I'm really worried about it. I wrote messages to you to two emails, but did not receive a reply.

Yes, everything correct, your team was accepted — you can check that in list of teams

Can anyone share an algorithm which got >=14000pts in contest?

I can only do 13700 by dynamic swapping between LRU and LFU.

I hope after system testing they will enable the possibility to read others' solutions

They wont. I viewed previous contests and the solutions are unavailiable.

I couldn't get past ~12k by dynamically swapping between LRU and LFU :( I guess I should've tried this more.

Btw, MRU did really well on tests 26 and 27 for me. So maybe you could try dynamically swapping between all three of them (my final submission was exactly this, but ig my swapping strategy was really poor).

PS: Did you mean "second chance" LRU? Plain LRU didn't seem to be doing well enough for 13.7k.

I did some changes in LRU like adding Cnt/4 to the priority, which gives about 500 points.

I tried second chance LRU, but it worked worse.

I also used something similar to what nweek said to optimize tenant/algorithm choosing.

In the end I'm actually swapping between LRU, 70%LRU+30%LFU, 30%LRU+70%LFU, LFU algorithms.

Interesting. Btw, could you please explain the motivation behind your tenant-switching formula? I tried a bunch of different strategies (for example, ran all three algorithms in parallel; and then whichever algorithm performed well for the last 1000 queries, switched to that one) but none of them worked well. Now, looking at nweeks's formula, I'm lost as to how one would come up with that.

I was just looking for a formula to choose the right algorithm.

Soon found that the formula in the statement for score calculating can be calculated dynamically. So I choose the algorithm with highest score to use.

Then I found that each tenant is an individual part in the final score. By going farther in the formula I found that their effect on scores increase in a speed of w_i^2/w_std, which w_std is the std fail-count. I assume an evict gives same effect on different tenants, so the tenant with smallest w_i^2/w_std will be chosen.

It's actually quite natural : My first idea was to just take the person which minimizes the score given in the statement. Then I realized that taking the max was silly because we don't treat the case where our solution is doing better than expected. So I dropped the max and made sure the result was of the correct sign. Finally, I noticed that you may evict many times in a row someone that has a low score, but his number of faults doesn't change while you do that so you might increase the score a lot because of that. To counter that, I look at the ratio cache used / Qbase. If it's high then you can easily match LRU so you want to evict him more. If it's low, it's going to be hard to match LRU so you should evict him less. So I just divided by that ratio squared. I hope everything is clear now.

There are 3 algorithms that are actually useful : LRU LFU MFU (works for tests 26,27)

Taking the best one for each test case is enough, but you need a good strategy to decide between tenant. To decide between two different tenants my formula is : ((faults — faultsInSolution) / faultsInSolution)^2 * sign(thing on the left) * priority / (cache Used / Qbase)^2 This alone gives 14k (even 14400 I think) To gain the last points, you had to notice that in some tests, the structure was in blocks where two blocks of the same person are identical. I wrote a program that could see this case, and I ran belady's algorithm by looking at what happened in the past. This gives 500 on test 4 for example.

xdd

Yes I didn't really like that part of the contest. I had the same feeling when MFU passed, no one actually uses that as an eviction policy in real life. Just happened that some tests were very weird. I think I tried a dozen different eviction policies, at the end, only 3-4 were interesting. Other than that, there were some interesting ideas to have in that contest. But those ideas were barely enough for top 30.

I believe that it depends on where caching is performed. Because in the environments with repeated looping accesses something based on MFU actually may be useful.

Again, it's a pity that we hadn't any hints for at least which environment was simulated in the input data. Then there were more conscious work towards certain directions instead of trying random stuff or guessing/extracting input data characteristics.

P.S. Congrats with the impressive score, great job!

Learned a lot and now I am so curious about how you get 500pts in 4th testcase. Could you explain more about it? Congratulations to your great performance in this contest. XD

TC 4 is : 25% tenant 1, 25% tenant 2, 25% tenant 1, 25% tenant 2. Block 3 = block 1 and block 2 = block 4

To solve it, just use LFU during the first two blocks. Then use Belady's algorithm from what you saw in the first two blocks : basically evict the page that is gonna appear the latest. This gives almost 500. And it works on a few other test cases as well (3 TCs I think)

Thanks for your kindness. I hope this also works for the system test.

Again congrats!

Thanks !! very unexpected.

Just to clarify, did you select the best one for each test case per tenant or used same strategy for all tenants in that TC? And did you decide which one is best once at some point or did you continuously/periodically adjust which one is the best along the way?

Best one per test case per tenant. It dynamically changes during the test case. Basically I simulate all my strategies for each user on various buffer sizes between Qmin and Qbase. When I want to evict a tenant, I look at the best strategy on the cache size closest to what the tenant is actually using. So it can change many times

Let's define a few caching strategies: LFU, LRU, segmented LRU+LRU (those were mentioned in the statement), MFU (evict the most frequent one, testcase 26+27), some hybrid (page hits + 3e-3 * time of last hit), least frequent among the last few requests (I used 3 * tenant's current Q, but the factor doesn't matter that much).

You run each of these strategies on each tenant and keep track of what gets the most hits. This strategy then becomes your main strategy for this one tenant.

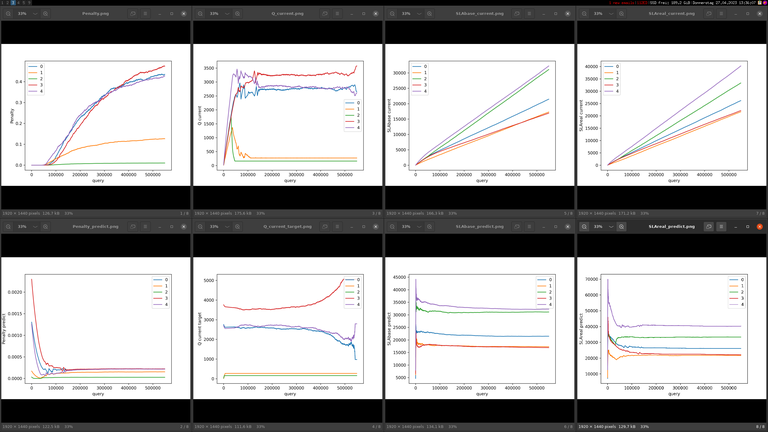

The decision which tenant to evict was rather painful, as whatever test I generated offline showed different behavior than submits on deciding whether or not a change was good. Here are some charts I made to see how close my prediction gets to the final SLA and such:

After some trial and error I settled on evicting whichever tenant minimized the Score() function below

Dynamic swapping between LRU LFU MRU and MFU )

can't wait for system testing

Harmony_'s solution is still running...(On test 29 now)

It has been ~1h for it to be runnning.

Hope that won't take another 1h in system test.

Has anyone tried using RRIP? If so what base did you find to be most useful? My sol switches between LRU, LFU, RRIP base 2, 3, 4 XD

By switching do you mean that you periodically look at the recent history and determine which algorithm would have minimized the miss rate (or another function) and you end up using just that one for the near future?

yes

are these the final results? i wanted a t-shirt

Dont know

Dear judges, when will the system test begin?

I think it will be better to have the first few tests open to learn generation patterns and don't spend a lot of time trying to get their structure through test system.

I forgot subscribe on ICPC's site. I will lost my T-shirt....

Hi! We updated blog post: you still can register on ICPC system. Please do that till May 5th

Dear organizers, Will Huawei recruit high achievers for this Challenge? And will this be somehow taken into account when applying for a job at Huawei? Perhaps you will offer some positions for the participants?

If so, what positions in the table will be considered?

Testing seems to have started.

Rejudge finished

Dear Huawei team, I would like to apologize for forgetting to register on the ICPC global website. Is it still possible to register now, or has the registration closed? If registration is no longer possible, would you consider opening it up again? Thank you for organizing this wonderful contest, and I appreciate your understanding in this matter.

We updated registration deadline: you still can register on ICPC system till May 5th

Dear Huawei team, I would like to apologize for forgetting to register on the ICPC global website. Is it still possible to register now, or has the registration closed? If registration is no longer possible, would you consider opening it up again? Thank you for organizing this wonderful contest, and I appreciate your understanding in this matter.

We updated registration deadline: you still can register on ICPC system till May 5th

From 60th place before systests, to 72th after. Systests did me dirty :|

"The final tests will be very similar to the current tests."

0 points on testcase 12 — but looking at others going up and down, I'm even somewhat lucky with that.

(the following takes best scores from before system tests into account, not the latest submission)

Can you please do the same for the last challenge?

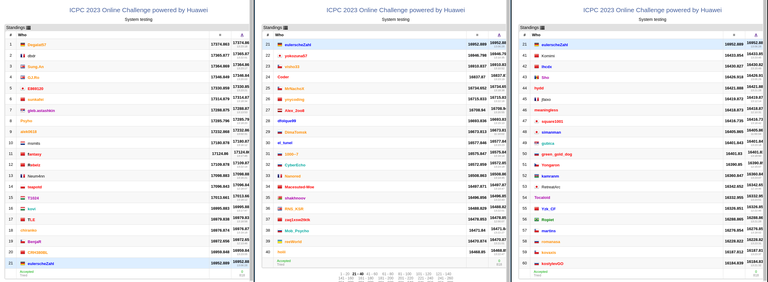

Sorry, I don't have the provisional standings in a machine-readable format this time. I can give you a screenshot however: The first place changed, but none of provisional top 10 dropped below the line. Most notable changes for top 30 are Alex_2oo8 dropping to 31st and T1024 taking a nose-dive from 15th all the way to 34th.

The first place changed, but none of provisional top 10 dropped below the line. Most notable changes for top 30 are Alex_2oo8 dropping to 31st and T1024 taking a nose-dive from 15th all the way to 34th.

Will upsolving be possible for this problem?

I forgot to register on the ICPC site. Can I still get the T-Shirt and the Certificate?

Please register on ICPC system till May 5th

Congrats to the winners. It seems the biggest ranking jumps (within TOP-60) after system testing, are on:

Dear Huawei team, I would like to apologize for forgetting to register on the ICPC global website. Is it still possible to register now, or has the registration closed? If registration is no longer possible, would you consider opening it up again? Thank you for organizing this wonderful contest, and I appreciate your understanding in this matter.

We updated registration deadline: you still can register on ICPC system till May 5th

Dear Huawei Team, I was curious about the criteria used for selecting the final submission for judging. I understand that the most recent submission was chosen, but I was wondering if it would be better to consider the submissions with the highest scores instead. After the ddl, I realized that I may not have submitted my best version in the last moment for judging, and I was wondering if there is any possibility for a rejudging based on the highest scored codes. I understand that this may be a personal oversight, and I apologize for any inconvenience or disruption caused!

I had a similar issue in one of the past similar competitions. I got score equal to about 7 instead of about 180000. They just ignored me...

Question to those with top scores. Here are some of my lowest scores: 0 pts on test 12, 220 pts test 58, 176 pts test 68. What are your scores there? I would guess those are MFU tests, although I did not come up with such a crazy idea like MFU xD. Just curious.

testcase 12 is definitely not MFU, then I wouldn't have gotten 0 points there. Case 58 (498 pts) and 68 (500 pts) might be.

Here are my points if you you are intersted :

1: Accepted [8018 ms, 204 MB, 390.421 points]

2: Accepted [7784 ms, 204 MB, 499.604 points]

3: Accepted [10077 ms, 200 MB, 499.786 points]

4: Accepted [9796 ms, 200 MB, 500 points]

5: Accepted [4102 ms, 196 MB, 498.741 points]

6: Accepted [3961 ms, 196 MB, 499.975 points]

7: Accepted [5038 ms, 198 MB, 479.644 points]

8: Accepted [5272 ms, 198 MB, 499.211 points]

9: Accepted [9874 ms, 418 MB, 500 points]

10: Accepted [9686 ms, 418 MB, 500 points]

11: Accepted [6520 ms, 325 MB, 407.035 points]

12: Accepted [6676 ms, 325 MB, 191.4 points]

13: Accepted [7066 ms, 478 MB, 491.979 points]

14: Accepted [7004 ms, 478 MB, 498.897 points]

15: Accepted [7238 ms, 204 MB, 398.07 points]

16: Accepted [7658 ms, 204 MB, 500 points]

17: Accepted [9360 ms, 200 MB, 498.216 points]

18: Accepted [10249 ms, 200 MB, 500 points]

19: Accepted [3993 ms, 196 MB, 498.542 points]

20: Accepted [3525 ms, 196 MB, 499.82 points]

21: Accepted [4944 ms, 198 MB, 469.806 points]

22: Accepted [5116 ms, 198 MB, 499.223 points]

23: Accepted [8704 ms, 418 MB, 500 points]

24: Accepted [8860 ms, 418 MB, 500 points]

25: Accepted [6349 ms, 325 MB, 433.091 points]

26: Accepted [7222 ms, 325 MB, 443.925 points]

27: Accepted [6676 ms, 478 MB, 464.605 points]

28: Accepted [6692 ms, 478 MB, 495.43 points]

29: Accepted [7128 ms, 204 MB, 399.969 points]

30: Accepted [7971 ms, 204 MB, 499.998 points]

31: Accepted [9687 ms, 200 MB, 495.194 points]

32: Accepted [9546 ms, 200 MB, 500 points]

33: Accepted [4180 ms, 196 MB, 498.746 points]

34: Accepted [4055 ms, 196 MB, 499.981 points]

35: Accepted [5584 ms, 198 MB, 484.41 points]

36: Accepted [5600 ms, 198 MB, 499.67 points]

37: Accepted [9219 ms, 418 MB, 500 points]

38: Accepted [9203 ms, 418 MB, 500 points]

39: Accepted [6801 ms, 325 MB, 406.276 points]

40: Accepted [6847 ms, 325 MB, 456.052 points]

41: Accepted [7082 ms, 478 MB, 496.187 points]

42: Accepted [6863 ms, 478 MB, 499.511 points]

43: Accepted [6988 ms, 479 MB, 491.886 points]

44: Accepted [6847 ms, 479 MB, 498.72 points]

45: Accepted [6691 ms, 552 MB, 500 points]

46: Accepted [6925 ms, 552 MB, 500 points]

47: Accepted [7253 ms, 568 MB, 481.945 points]

48: Accepted [7519 ms, 568 MB, 499.547 points]

49: Accepted [8689 ms, 594 MB, 491.156 points]

50: Accepted [9640 ms, 594 MB, 500 points]

51: Accepted [8018 ms, 392 MB, 490.947 points]

52: Accepted [8454 ms, 392 MB, 498.267 points]

53: Accepted [10358 ms, 392 MB, 499.746 points]

54: Accepted [9484 ms, 392 MB, 500 points]

55: Accepted [7175 ms, 479 MB, 477.443 points]

56: Accepted [8142 ms, 479 MB, 500 points]

57: Accepted [7346 ms, 479 MB, 495.26 points]

58: Accepted [8377 ms, 479 MB, 498.831 points]

59: Accepted [7628 ms, 551 MB, 500 points]

60: Accepted [7783 ms, 552 MB, 500 points]

61: Accepted [7097 ms, 568 MB, 487.06 points]

62: Accepted [6520 ms, 568 MB, 498.779 points]

63: Accepted [8407 ms, 593 MB, 494.716 points]

64: Accepted [9640 ms, 593 MB, 500 points]

65: Accepted [8985 ms, 392 MB, 494.104 points]

66: Accepted [8969 ms, 392 MB, 499.688 points]

67: Accepted [10701 ms, 392 MB, 499.548 points]

68: Accepted [9889 ms, 392 MB, 500 points]

69: Accepted [7877 ms, 479 MB, 469.424 points]

70: Accepted [7347 ms, 479 MB, 499.416 points]

71: Accepted [7362 ms, 479 MB, 490.507 points]

72: Accepted [7971 ms, 479 MB, 491.499 points]

73: Accepted [7160 ms, 551 MB, 500 points]

74: Accepted [7081 ms, 551 MB, 500 points]

75: Accepted [7300 ms, 567 MB, 493.652 points]

76: Accepted [8361 ms, 568 MB, 498.227 points]

77: Accepted [8767 ms, 594 MB, 499.923 points]

78: Accepted [9874 ms, 594 MB, 500 points]

79: Accepted [8641 ms, 392 MB, 478.288 points]

80: Accepted [8142 ms, 392 MB, 492.566 points]

81: Accepted [10202 ms, 392 MB, 499.665 points]

82: Accepted [9608 ms, 392 MB, 500 points]

83: Accepted [7284 ms, 479 MB, 477.289 points]

84: Accepted [7176 ms, 479 MB, 488.084 points]

I'm not quite sure what was test 58, other people that used MFU also got a low score so I'm not sure is that. Maybe I have a hidden heuristic in my code that works well in that case which probably made me win.

even my quick draft submission got more pts on Test#12, at least something to be proud of :D

12: Accepted [7191 ms, 36 MB, 292.464 points]

Your points are so BIG

I'm not top scorer but I got 456 pts on test 12. My solution is to find the tenant and page with the min cost.

For the cost of each page, I defined it as either 0 if the page doesn't appear for a period of time, or how much removing it will approximately affect the cost from function described in the problem.

Anyone know how the T-Shirt will look like? :)

You can see t-shirt from previous ICPC challenge in our social media accounts and in challenge video!

I wonder which data structures did you use for keeping LRU/LFU/… items ordered? I used regular STL

set, though probably my solution was not very optimized, I felt like having more than 2-3 strategies running concurrently could risk in exceeding allowed time limit. As I understand for each strategy there should be 2 copies of it: 1st — simulated/virtual/shadow, which runs as if it was the only one strategy used, 2nd — real/actual copy, that has same shared items among all real strategies. So introducing an additional strategy would require 2x additional time. (Please, correct me if I’m wrong and only one copy of a strategy is enough)Another small question is about LFU, which is often mentioned. Did you calculate frequencies only while item was in cache or even after it was evicted (total frequencies)? And if you ordered items first by frequency and second by time accessed, as there could be multiple items having similar frequencies, especially low ones. In that case seems like a better name would be like LFRU not just LFU.

For the data structure I used a segment tree. That allows you to get and update the minimum of an array in logarithmic time. I modified it a bit to also keep track of the index where the minimum value is located. An alternative would be a priority queue where you allow the same page to be entered multiple times and make sure that you have the most recent one on removal.

Regarding LFU, why not both: frequency over the entire run and over the last few requests only? Those can be two different caching policies that you compare against each other. I did sort by last access time as a tie breaker. LFRU would be a bad name because it's already taken.

Hmm, well as you can see from my color, I have very vague understanding of segment trees for now )) Is it really faster than a regular

set? You can get minimal element from it in O(1) and delete/reinsert it back in O(logN). Consideringpriority_queue— I'm not sure, why you might need same page multiple times there, but the main disadvantage of it for me (at least standard implementation) was that it seems impossible to delete an item from the middle from it (correct me if I'm wrong).Updated: Ahhh, probably understood, you meant instead of deleting item from pq, just inserting same page multiple times there.... okay. So will it be faster than a set?

Well, in my case because, I was limited by only 2-3 different strategies because of probably not very optimal implementation. Can you share how many strategies you had running concurrently in total (assuming there should 2 copies of each, right?)?

Basically the LFU question arose because people are using freely the same term LFU, that in fact could mean quite different things for different people. And often implementation details could separate top 10 from top 100.

It's probably faster than using a set, but the complexity is about the same (log for insert and update). I implemented everything with set using the same strategies as other described (dynamically swapping heuristics, in my case I used only LRU, LFU and MFU because adding additional did not impact the score significantly) and had no problems with efficiency (my code runs in about 7-8 s worst case).

My solution used a real caching strategy, 7 different virtual ones and an LRU with QBase for the score calculation per tenant. You don't need every strategy twice, you just build a new cache every time you chance strategies (using the current cache content and scoring of the strategy you want to use). The maximum runtime was around 11-12 seconds and I coded it in C# (which is a bit slower than C++), so I didn't really look for alternatives. You are right about time complexity in O-notation. It might still differ in a constant factor which is hidden in the O-notation (I really don't know how efficient or inefficient a C++ set is).

For the priority queue the idea would be to add a new page every time you change a score of an existing page and also have some lookup array to track the latest update time of each page. Then, when you dequeue, you make sure that the time matches your expectation (or you dequeue again because you got an outdated value). Therefore you don't have to delete from the middle, you just delay the deletion long enough that it becomes a regular dequeue action.

According to the rules I only have to grant Huawei a "non-exclusive license to [...] publicly display" my submission, so I think it's ok to share the code: https://gist.github.com/eulerscheZahl/9fd7ffe449f20fb49a1f972ef570e010

Thanks for explanation )

I haven't thought about having only one real strategy and rebuilding it when switching. Though in worst case, if switching strategies on every request, it could perform worse than if keeping real copies for each strategy, but in reality you probably don't need to switch them so often. So it could be faster... depending how often you switch.

And thanks a lot for sharing the code, I'll look into it more deeply a bit later. (from the first look it looks less messy than mine ))

For LRU, where you can insert items only to the end, I know that you can use just double linked list + hashtable. So all operations will be in O(1). But for other ordering criteria you need something more complicated/slow.

I used kinda-persistent wrapper of set and vector, to copy state to a strategy-class effectively. Allowed my to change strategies as much as I like. However, coping strategies so often is bad, because you do not consider longterm impact of the strategy.

Sounds a bit magical ) You still need to reorder all current items in set when switching, don't you?

For LRU, all operations can be done in O(1) with STL list and an array of list iterators. But for LFU, it won't be done in O(1) since we can't use pure LFU in this multi-tenant caching.

It seems some participants submitted few solutions but got high score. They probably generated local testcases and tested in local, I also tried but actually failed because all algorithms make similar results in uniform random testcases. Is there any good idea to generate a really good testcases that make us examine in local?

I don’t know how to generate, but you can use some real workload traces (for example see list of them here https://github.com/sylab/cacheus)

Stop ingnoring me, please. I registered for the Challenge by April 26.

You did not validate my team on the ICPC website and now I do not have a certificate in my personal account. Dear Jury, please correct this.

So far, nobody has talked about this issue. So I am making a comment.

Please remove the cheaters, and please try to find out group solvers (I don't know, but some of the contestant approaches in the contest time look fishy to me). There are some participants who just submitted 2-3 solutions and got 39k+. I am not 100% sure he is a cheater, but my mind says that I am about 99.99% sure. There may be some top contestant participate in this contest with multiple ids. And submit multiple versions of his probable solution. (I am not sure about my claim)

Most likely | whiteapple | will get away with it,

Behind the scenes :D -->

ICPC to whiteapple : what is the name of this other contestant

whiteapple : my mum

Unless ICPC does some identification interviews with the contestants, it wouldn't be easy..

Any idea why these contests always lack transparency? The ability to explore other solutions is such a great selling point of Codeforces.

Is the scoreboard final?

last time they made an additional blog post to announce the winners. the statement update says

Probably understood, last time they either did not find cheaters, or they did not choose cheaters from the list. If this time it will also be, then they are probably already used

Now it's final, see the updated announcement. No changes made to the rankings.

So why whiteapple haven't been removed from the score table? This account was simply created a few hours before the end of the competition. And If registration to the ICPC site wasn't extended, It wouldn't be able to win prizes. And it scored very high on the first try

I agree that this is a rather suspicious case. But I don't see any strong reasons to remove this participant from the standings. I can't just remove participants based on an atypical participation pattern. There are no signs of plagiarism or other strong arguments in the direction of violating the rules.

So why whiteapple haven't been removed from the score table? This account was simply created a few hours before the end of the competition. And If registration to the ICPC site wasn't extended, It wouldn't be able to win prizes. And it scored very high on the first try

devilcry I got to know that you are #61, man, you have all the rights to protest your missed prize. For me, at least, you are a winner :)

Yeah, I also tried to send an email to the organizer on April 28th and they replied that they would seriously investigate the matter, but they haven't gotten back to me whether they verified it or not, yet they published the final list on May 1st. At this point, without strong evidence, I had almost given up on defending my rights, because 'whiteapple' might be really strong enough to get a high score in one try or successfully avoid cheating by just slightly modifying the logic and the style of the code. Finally, I would like to thank codeforces and Huawei officials for their work on this case.

can anyone share the top-60 code? it's my code: https://github.com/MQN-80/Buffer-Sharing-in-Multi-tenant-Database-Environment (top-118), I use the derivative of SLA to choose the user, then every user's blocks are listed by LRU(improved by hot-cold zone), I want to konw how to choose the appropriate algorithm (LRU,MRU or LFU) based on the given data.

A robust way to choose the best algorithm for the given data is to simulate all of them, keep track of their hit rate, and switch to the best performing one.

Just curious, what's the biggest score anybody achieved without simulating multiple policies but instead following a single one?

The best I got with a single policy was 10878.186, using as queue priority this:

last_block_access_time + 10*TOTAL_MEMORY/TOTAL_QUERIES*block_frequency — 6*TENANT_CURRENT_ALLOCATION + 600*TENANT_PRIORITY

Good to know, thanks for sharing. I had a multiple single-policy solutions scoring in that range. And the last one which gave me ~12k on the preliminary data and was my final solution. So disappointed that I discarded idea of trying multiple policies simultaneously :(

Yeah. I switched to multiple policies in the last 2 days and got close to 14k, in time to get top 60 happily, but I wish I did it sooner. Spent too much time insisting and researching on state-of-the-arts algorithms...

Same here. I'm reassuring myself that at least I learned something new that way...

11800 without using parallel LRU, 12500 with.

LRU parallel LRU?

Any info on how to recieve prizes?

Have you received any info since your question? I'm also waiting for Huawei to contact.

Still no info. ICPCNews HuaweiChallenge can you help?

Dear participant, We are waiting participant list from Codeforce platform. As soon as we receive the list, we will contact you immediately. Thank you for your understanding!

Dear Huawei I'm not quite sure which list you have in mind.? Would this one work for you: https://codeforces.me/contest/1813/standings ?)

They probably mean an "internal" list with more information that is not necessarily public, such as email address so they can reach out to us.

MikeMirzayanov, can you please tell how long the wait is?

I didn't participate in the contest, but I think the participants are very modest to ask for it themselves:) Dear MikeMirzayanov, I think you forgot to send the e-mails plate to Huawei. I think all participants are very grateful to you for organising such a contest, which was obviously not easy to organise. Please send them the details, my friend is very worried :) Thank you for everything Mike, have a nice day!

I received an email regarding the reception of the prize yesterday. Apparently they finally received the list from Codeforces.

I didn't :(

I hope I haven't messed up anything (ō_ō)

I didn't get one either(

Did the letter say only about the T-shirt?

When will the t-shirts be shipped?

got it!

I have received a phone call asking for money for custom clarence. Is it required to provide any fee for the t-shirt?

I'm from Bangladesh.

I guess it's enough time passed to ask what's up with my T-Shirt and when I will receive it?

CC: ICPCNews HuaweiChallenge