Привет, Codeforces!

В 16.12.2022 17:35 (Московское время) состоится Educational Codeforces Round 140 (Rated for Div. 2).

Продолжается серия образовательных раундов в рамках инициативы Harbour.Space University! Подробности о сотрудничестве Harbour.Space University и Codeforces можно прочитать в посте.

Этот раунд будет рейтинговым для участников с рейтингом менее 2100. Соревнование будет проводиться по немного расширенным правилам ICPC. Штраф за каждую неверную посылку до посылки, являющейся полным решением, равен 10 минутам. После окончания раунда будет период времени длительностью в 12 часов, в течение которого вы можете попробовать взломать абсолютно любое решение (в том числе свое). Причем исходный код будет предоставлен не только для чтения, но и для копирования.

Вам будет предложено 6 или 7 задач на 2 часа. Мы надеемся, что вам они покажутся интересными.

Задачи вместе со мной придумывали и готовили Адилбек adedalic Далабаев, Владимир vovuh Петров, Иван BledDest Андросов, Максим Neon Мещеряков и Алексей shnirelman Шнирельман. Также большое спасибо Михаилу MikeMirzayanov Мирзаянову за системы Polygon и Codeforces.

Удачи в раунде! Успешных решений!

Также от наших друзей и партнёров из Harbour.Space есть сообщение для вас:

Harbour.Space University в сотрудничестве с Noventiq, ведущим мировым поставщиком решений и услуг в области цифровой трансформации и кибербезопасности, предлагает возможность работать и учиться в Барселоне специалистам по данным. Мы стремимся распределить стипендии для интенсивных учебных программ на самом высоком уровне для подходящих кандидатов, которые смогут присоединиться к нашему путешествию.

Кандидаты будут работать над выполнением следующих задач:

- Изобретение и внедрение подходов к решению задач компьютерного зрения и машинного обучения, формирование требований совместно с командой;

- Планирование экспериментов, обучение моделей, оценка их качества и встраивание в пайплайны;

- Работа с данными, формирование ТЗ для разметки;

- Регистрирование результатов обучающих прогонов моделей и отслеживание динамики их выполнения;

- Написание алгоритмов пре- и постобработки изображений и видео, логики сценариев обработки медиаданных;

- Проведение исследований в области компьютерного зрения: классификация, обнаружение, сегментация;

- Оптимизация нейронных сетей: дистилляция, квантования, обрезка;

- Подготовка моделей для производства;

- Проведение разработки пользовательских алгоритмов и модулей нашей платформы видеоаналитики.

Все успешные кандидаты будут иметь право на получение стипендии со стопроцентной оплатой обучения (22 900 евро в год), предоставляемой Noventiq для Data Science.

ОБЯЗАТЕЛЬСТВА КАНДИДАТА

Обучение: 3 часа в день

За год обучения вы завершите 15 модулей (длительность каждого 3 недели). Ежедневная учебная нагрузка составляет 3 часа, плюс домашнее задание, которое нужно выполнить в свободное время.

Работа: 6 часов в день

Погрузитесь в профессиональный мир во время обучения. Вы будете учиться у лучших и с первого дня сможете применять свои новые знания на практике.

Требования университета

- Степень бакалавра в области математики, статистики, науки о данных, информатики или аналогичных областях

- Знание английского языка

Требования к работе

- Экспертные знания и опыт в Python, а также в TensorFlow/PyTorch;

- Опыт внедрения моделей углубленного обучения для коммерческих проектов;

- Опыт решения реальных задач в области компьютерного зрения;

- Опыт работы с Linux OS, Git, Docker;

- Понимание принципов работы современных популярных архитектур нейронных сетей;

- Владение культурой проведения экспериментов, вы знаете о воспроизводимости и протоколировании, можете объективно оценить качество модели;

- Владение испанским языком;

Удачи в раунде,

Harbour.Space University Team

UPD: Разбор опубликован

Auto comment: topic has been updated by awoo (previous revision, new revision, compare).

ScreenCast

highly excited as this is my first participation as a expert

12/15 to 12/19 contests 5 combo lol

Love awoo.

Hoping for a positive delta. Either the codeforces problem pattern has changed or I have become too weak.

So .. how was it ??

will improve today.

Hope to return expert after this one! Educational rounds can't let down, so i will do my best!

I wish to return zero Contribution .. ~~~~~ Upvote pls ~~~~~

ok downvoted

ok upvoted

nowadays people get upvotes just by asking, and downvotes without even asking

Hope to get to CM!

Looking at your rating curve, doesn't seem like you aren't already CM!

I don't have any hopes from Educational rounds now as I mostly get -ve delta , I don't know why I mess here only ??

Upd :- After the contest I know why, it is because due to sudden high difficulty jump between problem and it become more like how quickly you solve the easy ones !!

Noticing and thinking about these trends will only make it worse. Good luck

Well if you look at my profile you will find I don't care about it :) .

I look forward to solve the problem as many as I can ,rating can be regained!!

Each test case consists of four lines. The first of them is empty.

This ruined my contest :)

[DELETED]

Weak samples :(

And C>>D!

Lets hope pretests are not weak. _/\_

What do you even mean by weak samples? Samples arent meant to cover every heurestic solution you could think of.

I don't mean it should cover all corner cases. I just want it to show huge bugs in my code(completely wrong ones)

Samples should probably just be good enough to understand the problem statement,

Problem C or Problem Me?

It was tricky to find the cases when answer is 0, but otherwise the problem is a straightforward DP. I dunno why so many people didn't think of DP.

DP[n, any] = 1DP[i, j] =number of ways to complete string fromionwards, andjis the index of start of the current continuous digits.So many people solved D (including me) without proof by just writing down some cases and using intuition or guessing.

I found the cases where the answer is 0 easily (merge overlapping 1 subarrays, and then check if any 2 array is contained within a 1 subarray), but couldn't deal with overlapping 2 subarrays...

I wrote similar thing in

O(n^4)lol 185523269It could be done in $$$O(n^2)$$$, and that's mainly because you have to sift through $$$O(n^2)$$$ values in the input. For each row, only the rightmost 1 and the leftmost 2 matter. All other values can be ignored. Once you store the corresponding indices, you can easily partition the range into runs of 1-subarrays, and then check if any 2-subarray is contained within such a range (this part can be done in $$$O(n\log n)$$$ time even, though the $$$O(n^2)$$$ approach is much easier).

I am literally you ;_;

The proof is somethong like this. If you can find x '1', then the player with number t must have his power >= 2**x(Its quiet easy)(And it easy to costruct an example). Similarly for 0. It is also obvious that if x and y fit, then any between x , y also fits. This is where the answer comes from.

Still, I can't think of proof why any between x and y also fits, I wrote some brute force to verify if that's true.

Ok, if there are L people less than x, and R people greater than x. Then you pair x with either people from L and R (depending on s[i]) You can pair other people however you want to, like if L = R and s[i] = '0' you can be left with only L's or L= L/2 and R= R/2 or anything in between

what's up with those div1+div2 contests ...

Good question, but this one isn't a 1+2.

What's up with those div2 contests ...

I dunno, but somehow everyone solved $$$D$$$ including me :D

what was the idea behind it

someone used my account and posted this comment!! sry

Problem C: Wrong answer on test 6. Everyone is waiting to know test 6 after the contest ends.

I got the same result and then refactored the code and tweaked dp state then it just magically worked. Have no idea what the error was, lol.

Damn in problem C. D is quiet easy

Спасибо за отличный раунд, расстановка по задачам мне очень понравилась. Теперь я еще более убежден что едуки это не спидфорсес.

Простой решай Е, лол. И не будет спидфорсес

Btw, there is none information about problems being in difficulty-increasing order and ICPC-rules do not provide this as well, so i think it is just a lesson. Skip a task, if you considered it as difficult, to read next problems, maybe they are more suitable for you

Why was it decided for C to be placed before D?

I spent over an hour on C without reading D yet, before I noticed the Dashboard having way more D solves than C, which got me to read D and solve it very quickly. I know it's my fault for not reading all problems near the start (but in my defense, figuring out how to deal with 1s was relatively straightforward, so I implemented that first before agonizing over overlapping 2 subarrays), but I can't understand why anyone would consider C easier than D...

was D based on divide and conquer qpproach I was going in that direction please provide a hint

observation + formula

The implementation involves very simple techniques (simpler than divide-and-conquer even) once you figure out the key observation (which is the hard part).

Hint: if there are exactly $$$x$$$ 1s in the string, what is the lowest possible skill level of a potential champion?

How do you prove that those people who are potential champions can win in a curtain situation?

Honestly, I still think that C is easy. I wasn't sure about it being much easier than D (although I still thought C < D), but I wasn't expecting the solve count of D to be higher. It was a misevaluation of difficulty on my end, C seemed pretty standard to me, and D actually required some thinking.

Maybe we have seen lots of problem in tournament like D, so the formula are easy to get. But when I try to solve C, the $$$1 \leq n \leq 100$$$ leads to many possible algorithm. I tried DP, making a graph, brute count, but still failed.

My guess is that the "easy" solution of C requires thinking about the problem from a very different angle, which doesn't have to deal with the hell of multiple overlapping 2-subarrays. I still have no clue what the supposedly easy solution is, though, but I am skeptical of whether it would be a natural approach to think of without considerable experience in its area.

Suppose we fill the string from left to right; when we place the $$$i$$$-th character, let's check if any segment ending in the $$$i$$$-th position has its constraint violated.

What information do we need to know to check if any segment ending in the $$$i$$$-th position is violated? In fact, all we need is the position of the last 0 and the last 1 before the $$$i$$$-th position (including this position itself).

This leads to a dynamic programming solution $$$dp[i][j][k]$$$, where $$$i$$$ is the number of characters we placed, $$$j$$$ is the position of the last 0, and $$$k$$$ is the position of the last 1. The straightforward implementation works in $$$O(n^4)$$$, but it is possible to optimize it to $$$O(n^3)$$$ or $$$O(n^2)$$$ — for example, by noticing that at least one of the states $$$(j, k)$$$ is equal to $$$i$$$, and maybe changing the dp to something like $$$dp[i][x]$$$, where $$$x$$$ is the position of the last character different from the $$$i$$$-th one.

Are you sure this is easier than printing all numbers from 2^a to 2^n-2^b?

Depends on the actual participant. For me it was easier. For some other folks in the comments too. For more than a half of participants it seems harder, but not for all of them.

Putting C before D was a honest mistake on my part. I wasn't trying to deliberately mess up the order, I just underestimated one problem and overestimated another one. I am sorry for that, but I think many people could make the same error with these two problems.

On the other hand, I don't think it is an issue if the problems are not arranged in sorted order of difficulty in the Educational rounds, as all problems have the same points. Participants can always see the solve counts to get a rough idea about the difficulty of problems.

Complaining about the order of problems makes sense in the case of non-ICPC style contests in which scores are usually assigned based on the order of problems.

my way of thinking when I was solving:

If problem asks number of ways to do something, it most probably DP.

If there are multiple segments ending at same position r with value 1, then we can remember only longest one. because to satisfy all of them we should satisfy longest one.

If there are multiple segments ending at same position r with value 2, then we can remember only shortest one. Because if there are two different bits in shorter segment, then they are also two different bits in longer segment.

For each r position there are two values (segments). how far earlier is same bit, and how near below should be different bit.

Key insight: information about last length of same bit is enough to figure out that: 1) we have enough same bits. 2) where is previous different bit.

There is no next idea, just solution

I know what do you mean by multiple overlapping 2-subarrays ...that was really painful

To me C was easier, I started to write it almost straight ahead. But for D I had to struggle. My solution comes from

idea that every permutation can be transformed in a way that in each pair first one wins. Thus, we can make indices for all people, and they outcome of tournament will be:

Then, just make oriented graph, run thing similar to topsort from vertex 0 by direct edges. and run same thing (similar to topsort) by reverse edges from vertex 0. This will be number of vertices required to be below and above. Then, just guess, that you can get everything in between, without proof.

i did not even solve D completely, i made a guess and it worked.

So you people Judge difficulties with just 1-2 people ?

It explains why recent Edu's are unbalanced

My way is observation and finally finding patterns.

It toke me near an hour to figure out what D mean :(

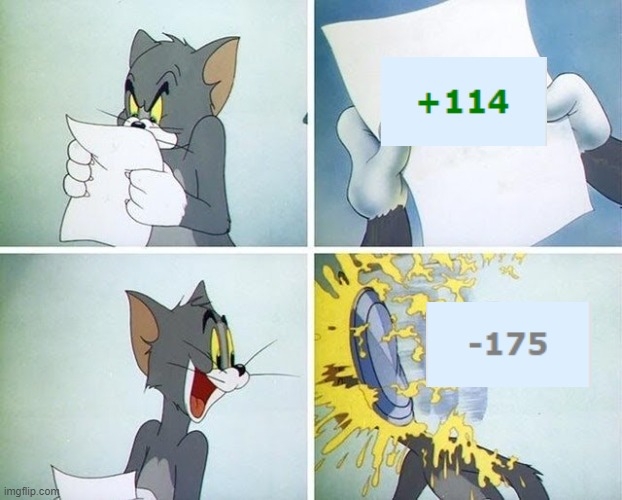

Avg edu round be like:

Swap(c,d) 🗿

Corporate needs you to find the difference between these two pictures

left picture right picture

They are the same picture

Arrays.sort(problemSet);

Oh no, it just TLed on test 192

Maybe including some blue/cyan in the testers team would help to notice the difficulty of problem C to average div2 contestants

Please do not put a (seemingly easy) hard problem at C

Wish I've solved D first

The problem C is harder than I think. And why the D is easier than C?

Best fkn contest of my life!!.Kudos to the authors for this round!!

I have doubts regarding the solve count of D.

I have solved C but no clue about D.

Same, C is very simple and clear in how to approach it

But I have no idea for D

I think most people solved D by sheer intuition and case examining. I did.

The order of contests does not matter

https://codeforces.me/blog/entry/110154?#comment-981086 among us

For D:

Let $$$f(t)$$$ be the minimum skill level of the champion when there are exactly $$$t$$$ 1s in the string. The value of $$$f(t)$$$ also indicates the minimum number of people with skill level lower or equal to the champion.

Consider the last 1 in the string. Let's say the champion X beat player Y in this round. Before this round, there were $$$(t - 1)$$$ 1s in the string. X and Y can both be considered champions in their sub-tournaments before round $$$n$$$ and these sub-tournaments do not overlap. So there are $$$f(t - 1)$$$ people in X's tournament with skill level $$$\leq X$$$ (including X) and $$$f(t - 1)$$$ people in Y's tournament with skill level $$$\leq Y$$$ (including Y). Since $$$Y < X$$$, both groups are included in $$$f(t)$$$, which means $$$f(t) = f(t - 1) + f(t - 1) = 2f(t - 1)$$$.

This is a very simple recurrence relation, made even simpler by the trivial observation of the base case $$$f(0) = 1$$$, leading to the obvious solution of $$$f(t) = 2^t$$$.

The upper bound is symmetric, but do be careful of mapping to the correct ID offset ($$$t$$$ 0s means the last skill level is $$$2^n - 2^t + 1$$$).

Problem E is just realizing that the graph formed by colors as nodes and edges when colors are adjacent for some platforms, and finding the minimum cost vertex cover for the graph.

The minimum cost vertex cover is equivalent to maximum cost independent set, which is equivalent to maximum clique on the inverted graph, which can be learnt here in Problem E editorial

Sadly couldn't debug my program in time.

it didn't help me. because even though I realized we need maximum clique, I have no idea how to find it in reasonable time. (plz don't spoil).

It's in the link to the editorial I provided in my comment.

Side note on how to handle self-loop in this problem: just to make sure each node in the maximum clique has a self-loop in the inverted graph.

So the ACs to problem D spiked after the hour mark in incredible fashion, what happened ? Did everyone just give up on C at the same time

actually a very good question

As for me, i just gave in on C, read D and solved it within minutes. There was about 1000 solves on D by that time, ig

Problem D was leaked on telegram on 1:16, most of the submissions after 1:16 are that one.

If this is true, I think moderators really have to take some action about that. I solved $$$C$$$ which was solved only by ~$$$560$$$ people and will still lose ~$$$50$$$ rating because ~$$$3160$$$ people solved $$$D$$$.

Ahh...same for me, I solved C, refreshed the dashboard and boom 3000 people already solved it.

If you have seen that code, you should put it here(since the contest is over) so that it will help others to know who are cheaters and also it may help Mike to catch the cheaters.

The code was behind the paywall so didn't see it but I suspect this is the Id of the person that leaked :- vedujod

bhai dhanda bnd kwaega kya.

I believe there were around 1500 ACs in 10 mins.

Cool!

How to solve D?

I found the largest and smallest winners possible for the tournament and assumed that everything in between them can win.

same, and it's pretty easy to figure out the smallest and largest ones

please discribe it. how to figure out that?

I maintained a list of the highest and lowest set of vertices that can be alive after $$$i$$$ rounds, while iterating through $$$i$$$. Take the highest one W.L.O.G. I define a set of vertices $$$V1$$$ to be higher than $$$V2$$$, sorted in decreasing order from $$$V1_1$$$ to $$$V1_k$$$ and $$$V2_1$$$ to $$$V2_k$$$, if $$$\exists i$$$ s.t. $$$V1_i > V2_i$$$ and they are equal for all indices less than $$$i$$$. In fact, for this case, I can even show that for the highest index $$$V$$$, there is no other sequence X s.t. $$$X_i > V_i$$$ for any index. Intuitively, if you pick 10 first and a guy picks 8 followed by say 6 — you could always add 6 to your list. Hence, such an approach is bound to give you the highest possible after $$$n$$$ rounds, i.e. I have basically shown the optimal subproblem property.

Now, finding $$$V$$$ is trivial. If in the given round, the higher skilled player wins, you simply keep the top half of the players in $$$V$$$. Else, you keep the 2nd, 4th, 6th highest and so on..

I have attached my code for reference

say we are trying to find lowest possible one to winner. if there are $$$X$$$ rounds that the higher one wins(or you can say there are $$$X$$$ "1"s). Then the lowest number must be $$$2^X$$$. It's not hard to understand. You can stimulate backwards and you will soon find that you need the $$$2^X-1$$$ ones to stay alive. I didn't quite find the mathematic prof, but I believe it's simple to understand.

Weirdly enough for me C is easier than D

How to solve C?

I did dp, calculating dp[i] in the following way: (let s[n+1][n+1] be the input array)

for each 1<=j<i we check if the place between j-th and j+1-th elements can be the last space between 2 different elements (a[j]!=a[j+1], but a[j+1]=a[j+2]=...=a[i]): if for each pair x,y that 1 <= x <= j < y <= i, a[x][y]!=2, then the difference of j-th and j+1-th is legal. Also we should check if a[x][y]=1 for each j < x <=y <= i. It's clear that if and only if these 2 conditions are true then the place between j-th and j+1-th elements can be the last space between 2 different elements. And so we add dp[j] to current dp[i]. Notice that we counted each correct string exactly 1 time (except if we can make all elements equal, check that case separately). And so we have dp[i].

If you have any questions feel free to ask! also can i please know the normal solution for D?) since i did something strange...

If there are $$$t$$$ 1s in the string, then the minimum champion skill is $$$2^t$$$.

Reason: if X won the round with the last 1, beating player Y, then the minimum number of people with skill level $$$\leq X$$$ is equal to the minimum number of people with skill level $$$\leq X$$$ in X's sub-tournament before this round PLUS the minimum number of people with skill level $$$\leq Y$$$ in Y's sub-tournament. So the lowest possible champion skill level when there are $$$t$$$ 1s is double the lowest possible champion skill level when there are $$$(t - 1)$$$ 1s.

Symmetrically, if there are $$$t$$$ 0s in the string, then there are a minimum of $$$2^t$$$ players with skill level $$$\geq$$$ the champion.

More generally, if there are $$$a$$$ 1s and $$$b$$$ 0s, then the lowest possible winner is $$$2^a$$$ and highest possible winner is $$$2^n - 2^b + 1$$$.

Thank you very much!

Detailed Explanation of Problem C

Same

Any idea how to solve E? I can reduce the problem to maximal weighted vertex cover with V = 40 and I think the question itself is NP-hard since you can reduce maximal weighted vertex cover to the question itself. Brute force seems to take 2^40.

Maybe, we had to use meet-in-the-middle to reduce it down to O(m*2^(m/2))

Notice that if 2 colors are adjacent to one another, then there is no solution which excludes both of those colors. Make a graph where two nodes a and b are connected by an edge iff it is possible to exclude both of those colors, which is actually when a and b are not adjacent colors(by adjacent we mean that there is an instance of color a that is adjacent to instance of color b) Now we are gonna solve the "flipped" problem. We are gonna find the maximum cost set of colors that we can exclude. That is equivalent to finding a maximum cost clique in the mentioned graph. You can find it with meet in the middle, split the nodes into two sets of size m/2, do some precalculations on both of the sets, and merge them. If it is needed i could explain the details of the meet in the middle part.

We have an edge between 2 colors if they are adjacent somewhere in the array. Now we are looking for the mvc of this graph. This is a standart problem and should be doable in many ways, the easiest is to apply meet in the middle and combine halves using a sos-dp like approach.

1767A - Cut the Triangle copy sample input button work incorrectly (additional empty lines) for me (ubuntu 22 firefox 107.0.1). All other problems samples copied fine.

Could anyone please give some hints for dp solution of problem C?

swapCDforces

C was a better question than D. Spent a lot of time on C. :(

I farted

you can solve D withoit even reading, just look at test samples (all of my friends solved like that)

i couldn't understand what the question even wants.... what does this line mean .... Let's say that an integer x is winning if it is possible to find a permutation p such that the team with skill x wins the tournament. Find all winning integers

Find all x

where there is a initial permutation of 1 to 2^n

which x can be the final champion

Well F*ck this contest

MathForces

YES

For people that like hacking: I'm sure my F solution can be hacked. I forgot to use my

coordarray and that makes things not work properly when switching between some different subtrees. Using it, my code passed in 1.8s and not using it, it's currently 4.8s. It shouldn't be too hard to create a bad case for the wrong solution.This is my 200th contest overall and I think I might be an expert finally :)

UnsortedForces

Just been reminded once again that educational rounds are never great contests:

They lack testers to ensure the usual checks

Only if you are lucky will editorials be released within 2 days.

Last question is way,way above div 2 participants.

Of course I'm not saying all other contests are great but these has been consistent with every "educational" round.

While I agree with #3 and partially with #1, care to elaborate on #2?

We have a strict policy of posting the editorial in the next 24 hours after the contest. I don't remember if we have ever violated this policy more than by half an hour. When have we ever delayed it by 2 days (as you say, we do it often)? Or is it just an accusation for the sake of accusation?

#837

contest on 12.11 and tutorial on 12.14

I have to apologize, I completely forgot that this was one of our ERs. Sorry for my bad memory.

why sooooo muuuuuch downvotes

Hm, I unfortunately can't check the exact dates of contests more than a month ago as they don't include the timestamp. I do remember commenting before about lateness of edu round editorials, and there must be some reason for that. I believe it should at least be true that editorials are released, on average, later than other contests though (most release practically right after the contest ends).

24 hours feels a bit long at a time where nearly all contests have editorials posted within 15 mins (or at least I ensure that any round I'm involved with does). Why can't they be written before the contest, or, worst-case, during the contest?

I prefer having editorials later because that encourages discussion. People have the incentive to exchange their own approaches to the problems. Maybe you could say that it provides an additional educational value to the contest :) The intended approach might turn out to be either more tricky overall or just less intuitive for you in particular. So having alternative ones to check out is great.

Feels like a bit of an excuse for not having editorials out on time. People always share their own solutions if they deviate from the intended solution in the slightest on the editorial page anyways, also it's more organised than having to scroll through complaints/praises about problems.

I share the same sentiment as Scarlet. While the idea of encouraging discussion is good, I would question the effectiveness of delaying editorials for that purpose. In another forum where discussion can be focused on each problem rather than a general contest (e.g. AoPS) that would make sense as you can find discussion for each problem being more concentrated and easier to find. Here, most discussions would be scattered; and I believe that people who would want to post solutions would post them anyway. (Without posting editorials, there will be more people with "how to solve qn x" posts, rather than encouraging more people to post solutions, or at least that's what I think.)

In addition sometimes I may have an idea of how to solve something but not sure about the implementation; I might want to refer to the editorial instead of the general comments thread, or perhaps more efficient ways/nuances I may have missed in easy questions. At least for me I would prefer them soon rather than later while questions are still fresh.

Adding editorials leave people with the choice, and I think that choice would benefit different groups of people.

Considering the frequency of educational rounds and the fact that just a few people are responsible for 2-3 rounds every month, I don't think we should expect more.

Sure, I did not mention anything about expecting more. All I mentioned that, if you were to join an educational round, do not be too surprised if there may be issues such as difficulty balancing, and as the authors have mentioned, deliberately delayed editorials by 24 hours.

In A why copying sample input gave two lines between each input instead of one?? Got two runtime errors beacause of that.

Am I the only one who found C a bit easier than D. (I couldn't even solve D :( )

Can you please write the idea of your solution to C? I solved D and I will write the idea of my solution in a comment.

My solution to D goes as follows:

We imagine the way of a candidate to the championship. Let's define two variables $$$s$$$ (number of players smaller than our candidate), $$$b$$$ (number of players bigger than our candidate) and set them $$$= 0$$$ at the beginning. So we run from $$$1$$$ to $$$n$$$ and do the following:

1) If $$$t[i]$$$ $$$=$$$ $$$1$$$, then this candidate has to be bigger than another player in this stage, so it has to be bigger than that particular player and all the players smaller than that player. Thus, we set $$$s$$$ $$$+=$$$ $$$1 + s$$$.

2) If $$$t[i]$$$ $$$=$$$ $$$0$$$, then this candidate has to be smaller than another player in this stage, so it has to be bigger than that particular player and all the players smaller than that player. Thus, we set $$$b$$$ $$$+=$$$ $$$1 + b$$$.

Finally, we print all the number from $$$s + 1$$$ to $$$2^{n} - b$$$.

I feel like the given samples in D were bad, as they led to a lot of guessing solutions, and perhaps it would have been better to put less obvious samples.

I got AC with proving the minimum and maximum elements however it seems that most didnt.

I guess this is the reasoning why C was put before D, however, problemsetters forgot very few people know how to use DP in div2.

Also can the last problem be brought down to 2800R maximum? it makes no sense for it be more for division 2 participants

Last problem uses things that I'd expect CMs to know. I think it's fine for an educational contest, teaching that people have the tools to solve these problems.

the part you are forgetting is its also a rated round.

if it was only for educational purposes, that would make sense.

But having a problem which is impossible to be solvable by an actual div2 participant is not fair imo.

It's not impossible to be solvable by an actual div2 participant. Perhaps during contest time it'd very unlikely to be solved by div2 participants, but that could be said for the last problem in almost any problemset, regardless of div, platform, contest format, etc.

Why does it being rated change anything? Especially for this being an educational round, I think it fits the purpose of teaching something to the participants.

An easier problem teaching the same thing could be chosen.

More than half of the LGMs couldn't AK the contest, which almost never happens in a div2 contest.

Check any div 3 or div 4, and i can bet you there will even be experts/CMs having Aks, so it only makes sense to atleast allow some GMs to Ak div2s.

People dont like to solve problems much higher rated than them, and this is nothing different. For purely educational purposes of the masses, participants would benefit from an easier task.

There was abnormal activity in the AC submissions of question D

in Problem D test 1 ( 101 ) how player 5 can win and all permutation are 101,110,011

There is no need to deal with any permutations.

...I think you misunderstood the problem. The problem is asking what are the possible skill levels of the champions, and the string represents whether each round was won entirely by higher-skill players (1) or lower-skill players (0). There is no point in considering any string other than the given one.

An example where 5 wins:

Skill Levels: $$$[5, 1, 8, 7, 4, 3, 6, 2]$$$

Thanks i understand the problem now.

Probably one of the best EDU rounds! Could solve only two, but enjoyed brainstorming on problem D. Kudos to the authors.

Edu round 139 vs Edu round 140

Unlike many others, I struggled with D, but found C and E quite standard. Great problems for an educational contest which is supposed to have standard problems.

Is there a solution to problem F that is not 4-dimensional Mo + sqrt decomposition?

If you do ETT carefully, you can order subtrees in a way that passing through every subtree gives you at most O(NlogN) operations by going to small subtrees first. From there, mapping these subtrees to a point that is the number of operations necessary going from the first subtree to it, you reduce it to a 2-dimensional Mo problem. I actually find it surprising that 4D Mo by itself worked for you in under 5s.

Can you explain how your F works? Your code seems to be the same 4D Mo's as mine, except I used an array of sets to store values at each count and that TLE'd. You're doing some sqrt thing inside

S?Not talking about his solution but a way to get rid of the sets:

do SQRT decomposition on the colors by keeping information of "how many colors have frequency f within this bucket". I'm assuming that you know how to find the max frequency without sets. Then, you can find out if the bucket has some element of max frequency in O(1) so just pass through buckets in order to find the first bucket that has such element and pass through the bucket to find the element. Read the last for in my solution before printing the answer, it should be self-explanatory.

Hi, I saw that the meet-in-the-middle tag and 1767E - Algebra Flash, and I though I want to share a (in my opinion) simpler solution. A little disclaimer: My asymptotic running time is worse than what you can get with MITM, but is in my opinion simpler. I did not invent this solution, it is well known in the field of parameterized complexity. Step one is to reduce the problem to vertex cover in a small graph (a graph with at most m-1<=39 vertices). This is the same as other solutions I presume.

I'll use O* notation in this explanation, which is the same as big O, except polynomial factors are ignored. I'll also use V to denote the number of vertices in the graph, and E to denote the number of edges.

A simple O*(2^V) solution would be to try all subsets and see if they are vertex covers. Optimizing this using meet in the middle is what i presume is the most common way to solve this problem. The solution described in this comment is based on a different O*(2^V) solution:

Look at a single edge. At least one of the endpoints of this edge has to be removed. Just trying both leads to a branching tree with O(2^V) vertices, so the running time of this is also O*(2^V).

A simple improvement to this solution is to instead of looking at a single edge, look at a vertex and its neighborhood. If you don't remove a vertex, then all its neighbors must be removed. So if you branch on the choice between removing a vertex and its neighborhood, it should be faster if the neighborhood is big, since you remove more vertices. But what if we can't find a vertex with big neighborhood? If all vertices have degree 0 or 1, then we just have isolated vertices and isolated edges, which is an easy special case. Otherwise we have get the following recurrence relations for the number of vertices in the branching tree:

and solving this result in

Which is fast enough to solve the problem (yes this is the golden ratio (rounded up) :)).

Here is a submission: 185546658.

Some extra notes: I also got accepted without any special case for small max degree: 185542957. Possibly due to bad tests, but I think also picking highest degree vertex alone could be a good enough rule for this problem, because of the way the graph is constructed. The worst case for such a solution would be a set of disjoint edges, but the graph is connected. Creating a graph which reduces to a set of disjoint edges after a few removals wastes too many vertices by having too high degree.

Better asymptotic running time is possible using a more general base case. A graph where all degrees are smaller than or equal to 2 is a set of disjoint vertices, paths, and cycles, which is also easy to solve in polynomial time, so in the branching we can assume degree 3 or more and get

which solves to

which is still worse than the O(1.4143^V) you can get using MITM and convolutions.

I made a little mistake in my analysis here. Removing the entire neighborhood of a vertex will make the vertex isolated, which means you might as well delete the closed neighborhood. So actually the solutions with special case for max degree <= 1 and <= 2 should have these recurrences instead respectively:

The latter of which is actually asymptotically better than the MITM solution.

According to tags C can be solved with DSU, can anyone explain C with DSU? I can understand overlapping subarrays with '1' can be united, but how to check for '2'?

Problem D is really easier than C! Anyway, thanks for the very nice round!

How to solve E?

can anybody please explaing Problem B(why we should take elements in sorted order from 2<=i<=n and apply operations only on 1st element but not on any element from 2<=i<n)

Because we need to find the maximum height the first one can achieve so we will check for all the remaining towers which are higher than the first tower and whose height we can add on to the first one so we iterate from 2nd to the last tower and check if the height is more than the first tower at every point and keep adding the required height on the first one as we move ahead. This should explain why you should also consider the nth tower.

I need proof for my above statement brother,don't want the procedure to get answer,can anybody please explain?it will be really helpful.

Take for example this given array

2 7 5

If you do not sort and apply the operation as usual. in the first step 2 increases to 5 and 7 decreases to 4. now for the second iteration the height of tower 1 is already 5 which is not greater than the height of third tower[5] so the ans in this case will be 5.

But if you sort the array from 2 to n the array becomes

2 5 7

Now when you apply the operation for 2nd tower. the first towers height increases to 4 and second tower becomes 3.

for the third tower the current height of first tower is 4 and of third tower is 7. so when we apply the operation 4 becomes 6 and 7 becomes 5.

In this case answer is 6 which is correct answer.

Hope this explains.

Can anyone reply me 'A' solution in a efficient way with explanation please? Thanks!

Watch this, if are comfortable in Hinglish(Hindi+English)

Hope to become Master __

Both accounts are mine. I submitted the solution from both of my account. sorry for that, will not happen. Link to Screenshot

Ajitkumar01 and ajitkumar.infinity24 are both my account.

It seems that Problem C can be solved with $$$O(n^2)$$$ time and $$$O(n \cdot \log n)$$$ memory.

Good problem!

So why not make the value of $$$n$$$ larger?

can anybody please explain Problem C?

Full Explanation of Problem C

A round of assumption and assumption... Without getting think of any 'Lemma'.. FO-> :(

Auto comment: topic has been updated by awoo (previous revision, new revision, compare).