As you guys may have noticed, in a Div 4 contest on Codeforces, the system testing phase and queue usually takes like forever (a submission can get stuck in the queue for up to 30 minutes, and the system testing phase sometimes last literally like half a day). So why don't problem setters just... not do that?

What I mean is, why do author deliberately made both the time limit and the constraint so large? I'm actually really confused, since Div 4 is well known for its slow queue, so at some point someone should've thought about enforcing guidelines regarding setting the appropriate constraint for optimal judging speed in "crowded" contests, such as Div 3 and Div 4.

To really understand what I meant, let's take a look at this problem: 1980C — Sofia and the Lost Operations. This problem has a time limit of 2 seconds, as well as the maximum sum of $$$n, m$$$ over all testcase of $$$2 * 10^5$$$. You see, there's actually no reason to not half both the time limit and the constraint, since it's not like we are going to let $$$O(n * log (n))$$$ algorithm pass while failing $$$O(n ^ {1.5})$$$, $$$O(n ^ {1.75})$$$ ones. When you do that, inherently, nothing changed about the problem, and there are numerous merits in return, such as faster queue, faster judging time, happier participants, etc. So... what is stopping authors from setting the constraint to {$$$1$$$ second, $$$n \leq 10^5$$$}, or even as low as {$$$0.5$$$ second, $$$n \leq 5*10^4$$$}. I respect problem setters decision to set the constraint of their problem to a particular number, but I simply think there are things that can be done to make every party happy.

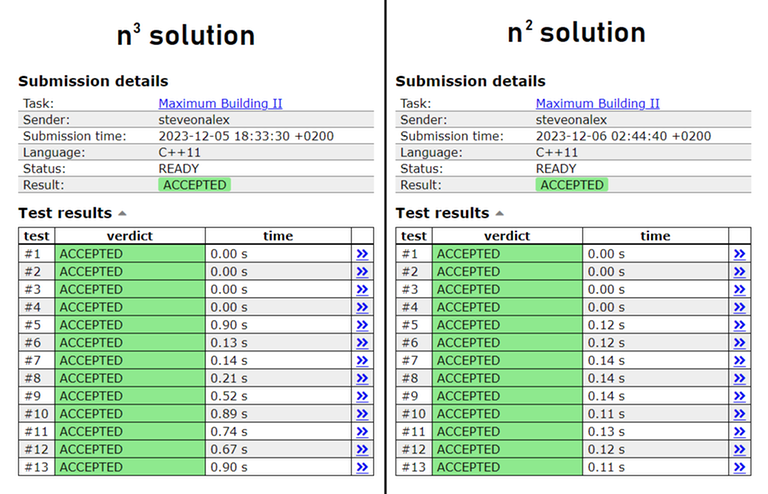

Again, this doesn't mean that every problem's constraint should be lowered. For example, this problem: 1985H2 — Maximize the Largest Component (Hard) works best with the current constraint, as going any lower would means a well implemented, simple brute-force with the complexity of $$$O(n * m * min(n,m))$$$ would be near indistinguishable to a intended, but badly implemented solution.